I got a DM from a journalist called Jordan Pearson this evening and what started out as a quick comment for an article turned into an investigation of an ongoing issue.

etherscan.io

Etherscan is ranked as the 1,379th site in the world according to Alexa, so they're pretty big! According to their About Us page this is what they do:

WHAT WE DO

EtherScan is a Block Explorer, Search, API and Analytics Platform for Ethereum, a decentralized smart contracts platform.

It was only a few hours ago when I got a DM from Jordan Pearson to ask me to take a look at something.

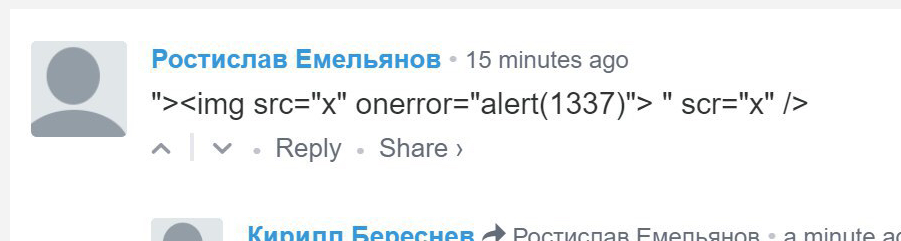

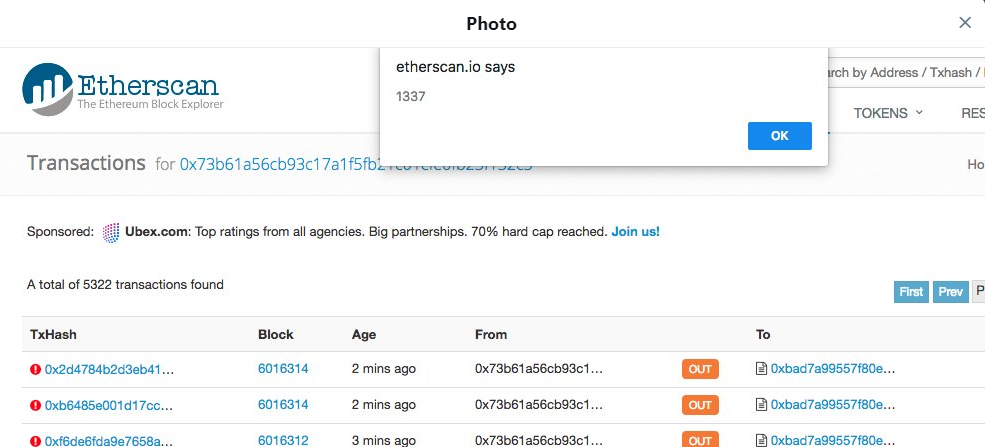

Now, as soon as I see something like this, it's pretty worrying. At first glance this quite clearly looks like there's some kind of XSS vuln on the page. It could be persistent or reflected at this point, but it's definitely executing on the etherscan.io origin as you can see from the alert box. Jordan was doing his responsible journalist thing and wanted to know what the impact of an attack like this could be. What did it mean, what could the attackers do and to verify the information he had. Good on him for that!

Investigating the XSS vulnerability

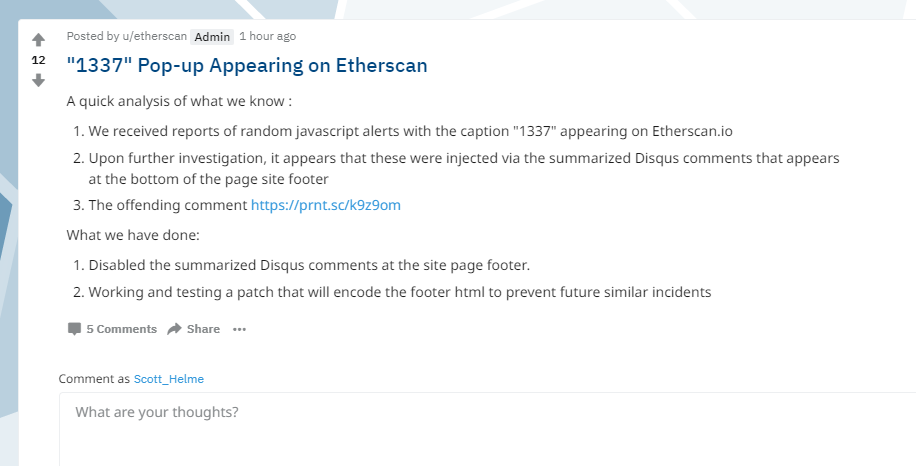

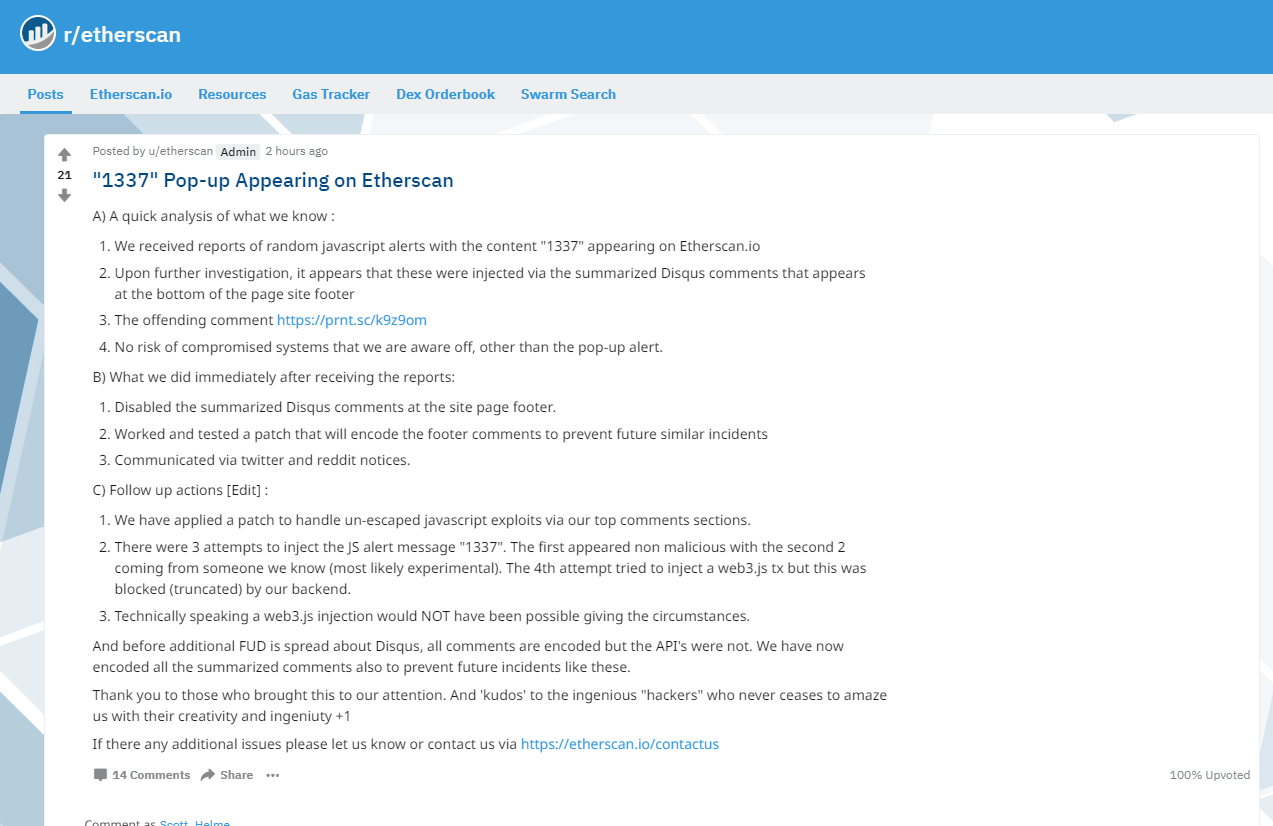

To get started, Jordan sent me a link to this Reddit post in which Etherscan had detailed the problem and notified users.

The linked post was also the pinned tweet on their Twitter timeline when I checked.

An quick update on the random "1337" script pop up on https://t.co/VAEURQyNAG https://t.co/3N222GMucu

— Etherscan.io (Not giving away Ether) (@etherscan) July 23, 2018

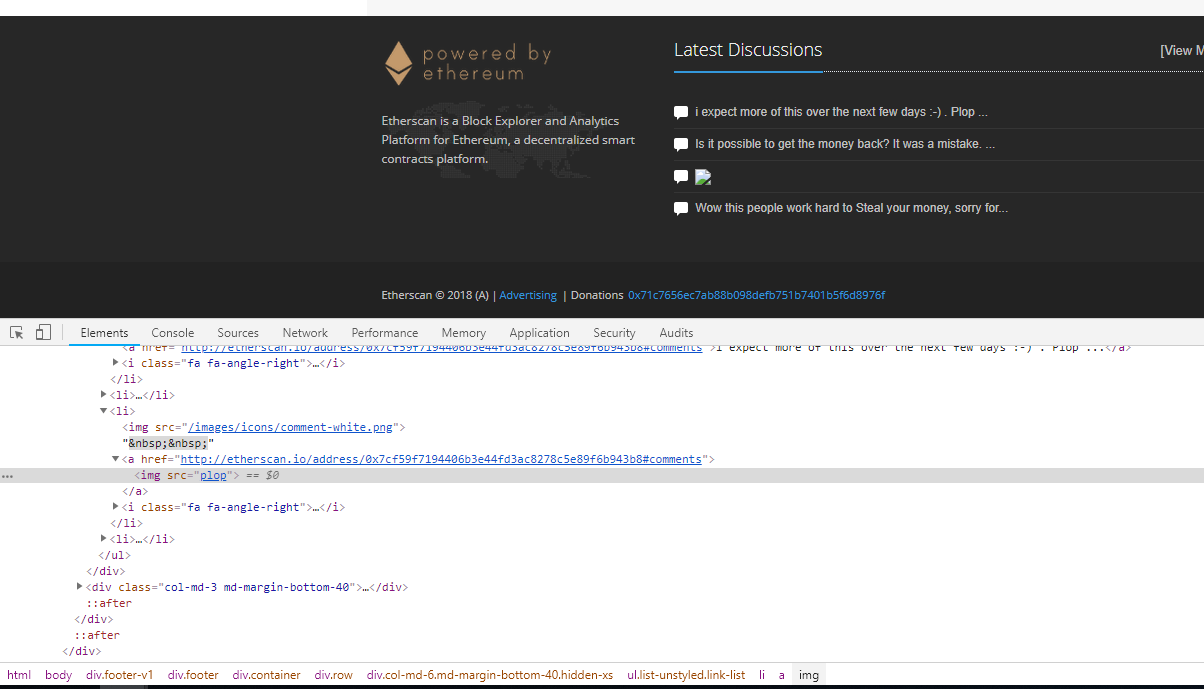

Now, this was no 'radnom popup' on their site, this was a demonstration that someone could inject script into their site, what we know as Cross-Site Scripting, or XSS. Their initial reaction was a good one, they'd identified that a Disqus comment was responsible for the injection of script so they disabled the comment section in the footer of the site. This is the comment that caused the problem.

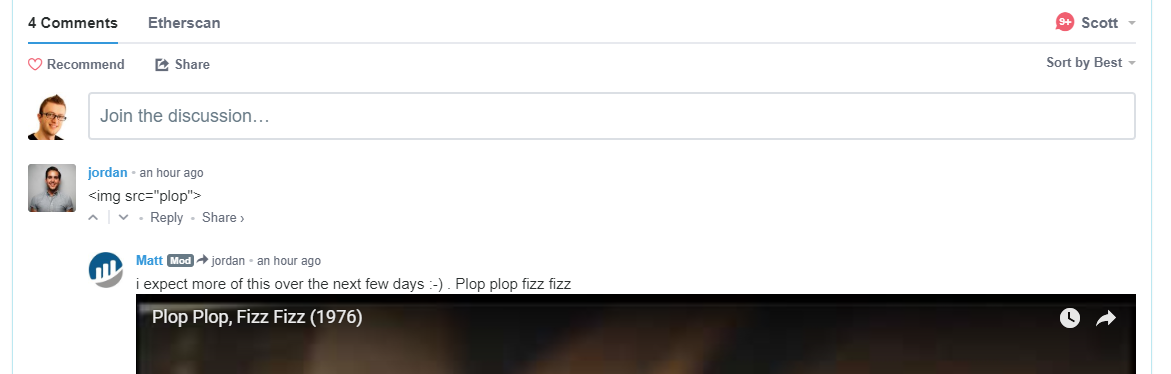

Now, this is interesting because I use Disqus comments right here on my blog and I've spent a lot of time testing and poking around to see what they can do. I got the feeling that Disqus was pretty robust and something like this wasn't quite likely. Also, the comment shown in the image is being rendered as text. If it was an actual image tag being treated as HTML, you wouldn't be able to see it as text like we do. Something fishy was going on but I couldn't see because the comment section had been removed.

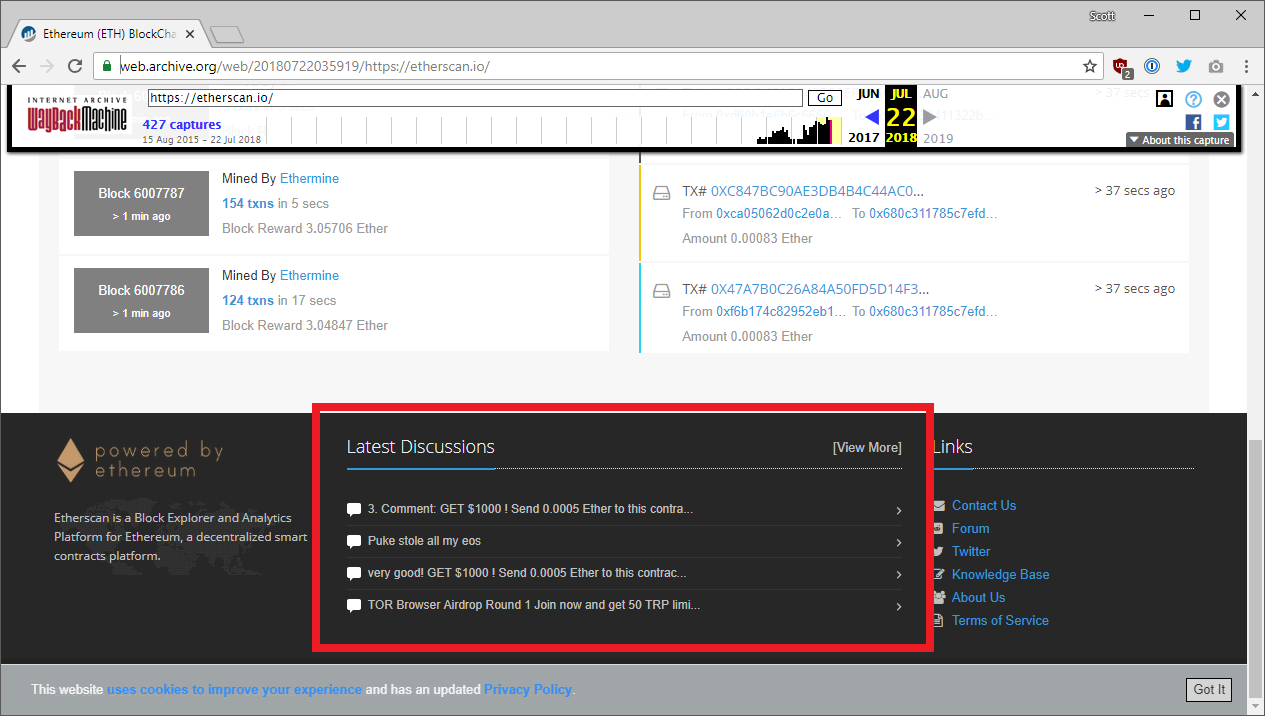

In a lot of scenarios like this, where I want to see what a site did before the present moment in time, I head over to the Wayback Machine and take a look at what the site looked like previously.

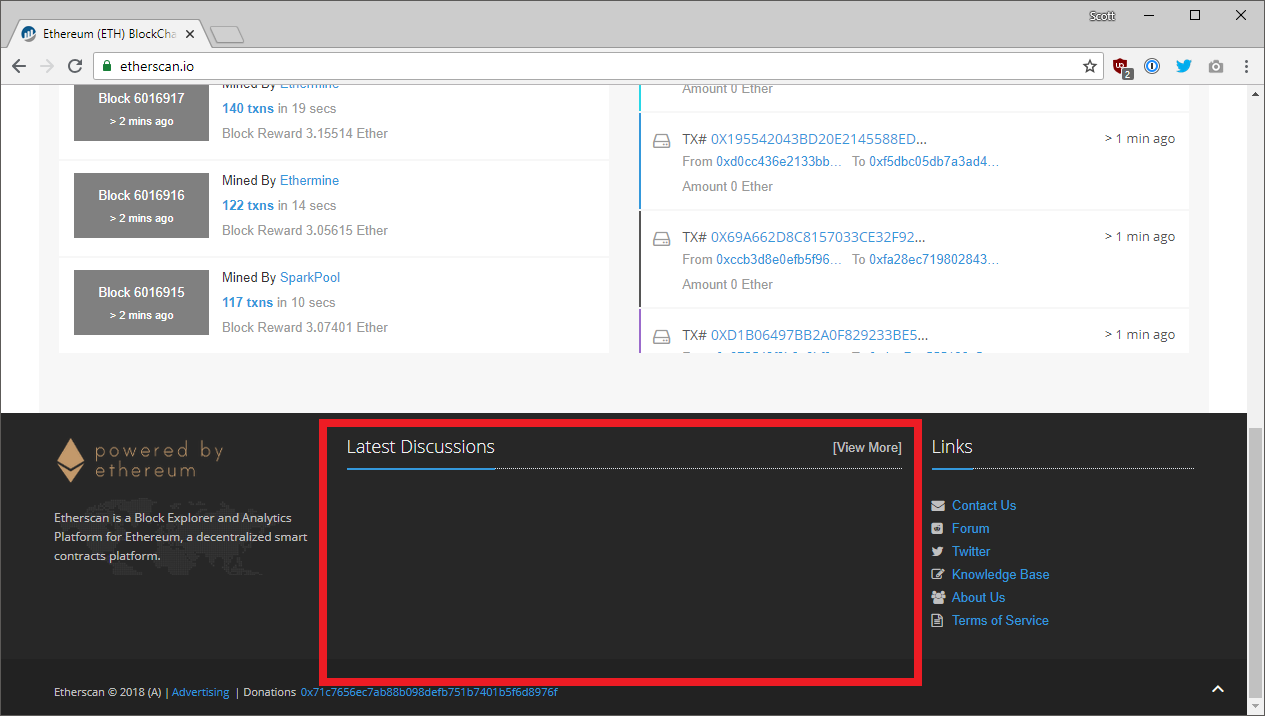

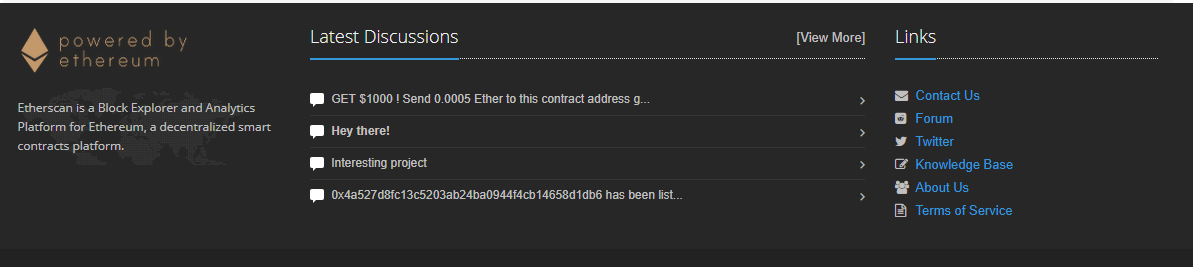

I only had to go back to yesterday to see what the site looked like and I was already getting a feel for what the issue was. It didn't look like a standard Disqus integration but instead it looked like a custom one. Perhaps they were fetching comments with the API and rendering them into the page footer instead. It did seem odd that they mentioned in the Reddit post that they needed to encode the footer HTML to prevent this so my guess was definitely that they were trying to do a custom integration. The thing is, whilst this was happening, the comments suddenly appeared on the site again. Oh wow, a fix deployed already? That was fast! So I tried to post a test comment...

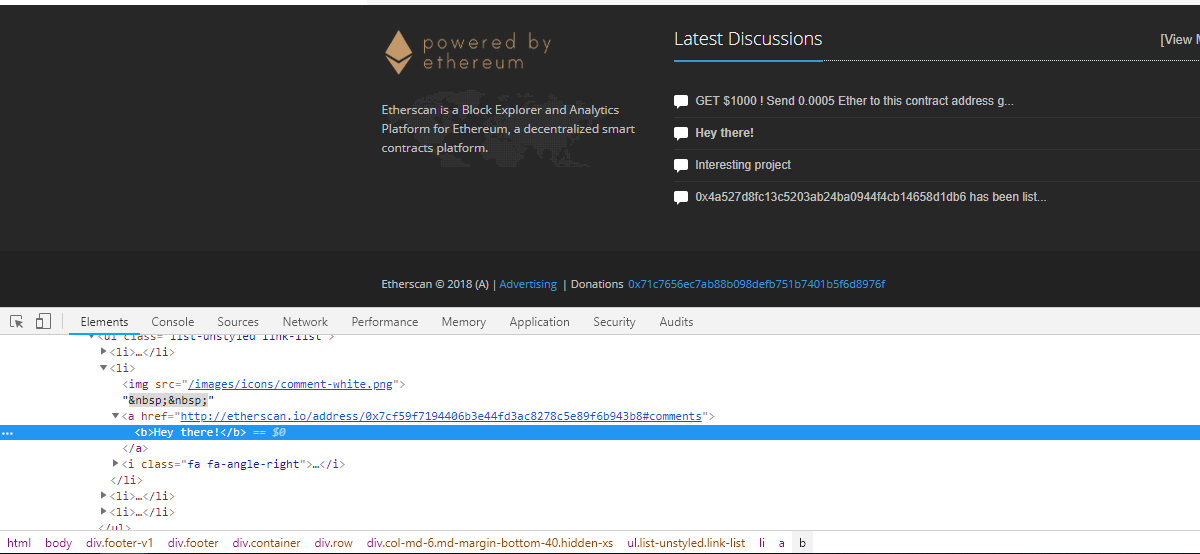

Of course I didn't post a script or anything hostile, just a benign bit of HTML to make a bold comment and see if they'd render that directly into their page without escaping. That would give me proof of HTML injection.

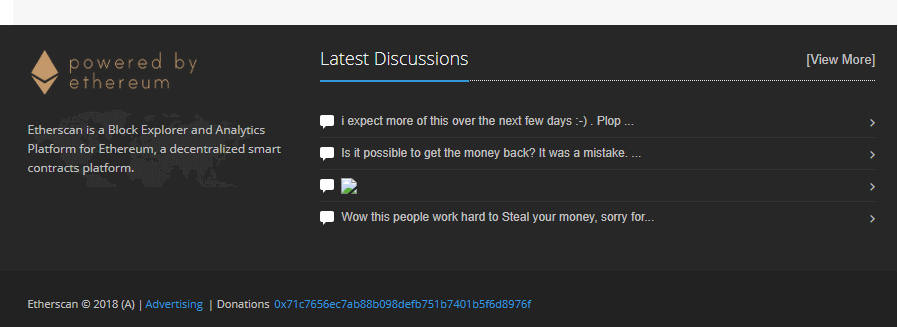

Yep, what the heck? Why is the comment section back if the site isn't fixed yet?! Just to make sure I wasn't going mad, and to verify my findings before I started making noise about something that wasn't an issue, I asked Jordan to verify it by doing a similar thing himself. I asked him to post a comment with an image tag that had a broken src attribute so it wouldn't load anything, but the browser would show a broken image to prove the HTML was injected.

Yep, the HTML comment was being injected into the footer and the footer is present on every page of the site too!

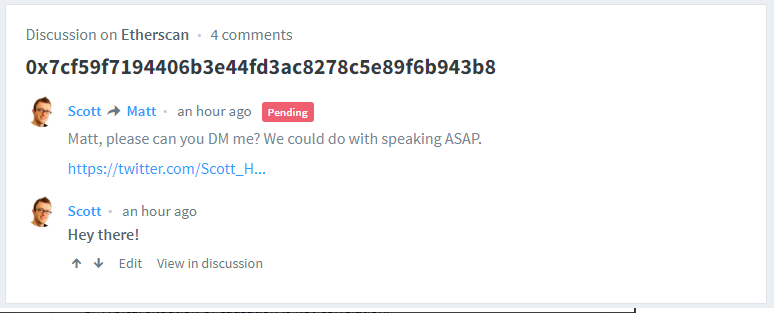

It was time to contact the site and rather handily, a mod had commented on Jordan's image post.

I decided to reply back and see if I could get them to contact me. It didn't seem they were taking the risk very seriously, but it was worth a shot. The comment is still pending and no one has contacted me..

The fix

It was around this time that the Reddit post was updated with further details.

It seems that the fix was specifically to 'handle un-escaped javascript exploits' via their comment system. Now, this doesn't fill me with huge amounts of confidence but I'm not exactly sure how they're doing it either. If they were escaping all HTML in the comments then the bold text and image comments wouldn't have worked. That makes me learn more towards them just trying to catch script tags which is fraught with difficulty. Want to know how easy it is to bypass XSS filters like these? Just check out the OWASP XSS Filter Evasion Cheat Sheet! Looking at the rest of the update it seems that someone had tried 3 times to inject the '1337' alert and web3.js too. They should probably count themselves lucky that whoever found this didn't do something more sinister...

The problem

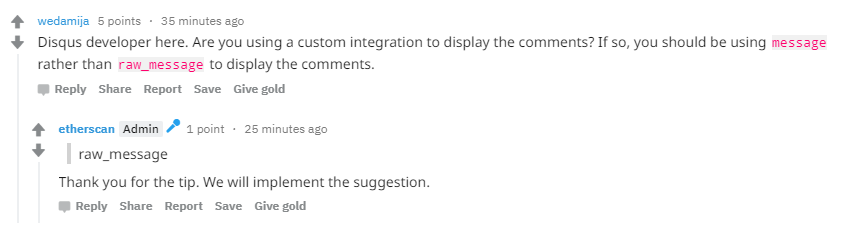

Looking at the comments in the Reddit thread it does seem that the custom Disqus integration was to blame.

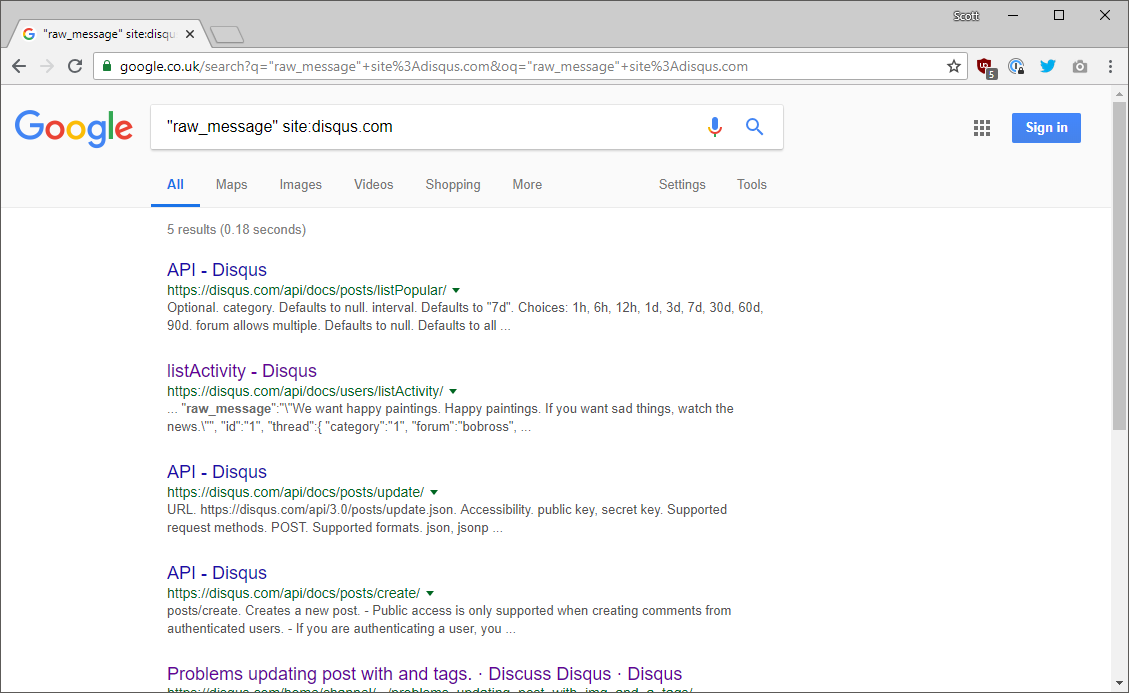

My guess is that they were using something like the listPosts API endpoint to fetch comments from Disqus and then grab the top few to insert into the footer section on their site. It seems that they were taking the raw_message instead of message which I guess is probably escaped for safe output into a HTML context. I say "I guess is probably escaped" because I'm struggling to actually find the difference between raw_message and message in the Disqus documentation. Even Google is struggling to find something about it.

You can see the results here but I've skimmed through Google and looked over the API docs but I cannot find a documented difference between these two values in the returned JSON. That's not to say it doesn't exist, but if it's this hard to find, I can see why someone might not find it. If this is the cause of the issue then fixing it should be fairly simple, switch from using raw_message to message instead. Still though, I'd always escape the output no matter who/where I got it from because it's user provided and thus evil. No matter which way you cut it though, this is a traditional XSS risk that could have been avoided.

CSP to the rescue

Yep, it's CSP again! This is exactly the kind of thing that CSP was built to stop and it would have made a great defense here even though traditional mechanisms like output encoding were missed/forgotten. A properly defined CSP would have neutralised the inline script here because inline script can be controlled on a site that defines a proper CSP. If the injected script tag was loaded from a 3rd party origin then the script would have been blocked because the origin wouldn't have been found in the CSP whitelist. Either way, the attack would have been neutralised and again, this is exactly what CSP set out to do.

What's even better is that they could have been alerted to this attack a lot sooner if they'd been using CSP reporting. When the browser blocked the hostile script it could send a report out to a service like Report URI and provide immediate information that there is script on the page that shouldn't be there. Not only is the user protected against the attack but the site owner would find out right away that there was a problem. The script payload here was not stealthy in the least bit, popping a JS alert on the page is a dead giveaway that there is a script there doing bad things. Just think if it hadn't popped that alert though. What if it had injected malware, a malicious redirect, modified or tampered with the page or installed a keylogger? There are countless ways this could have gone very, very wrong but yet again, this was a lucky escape.

We keep getting lucky

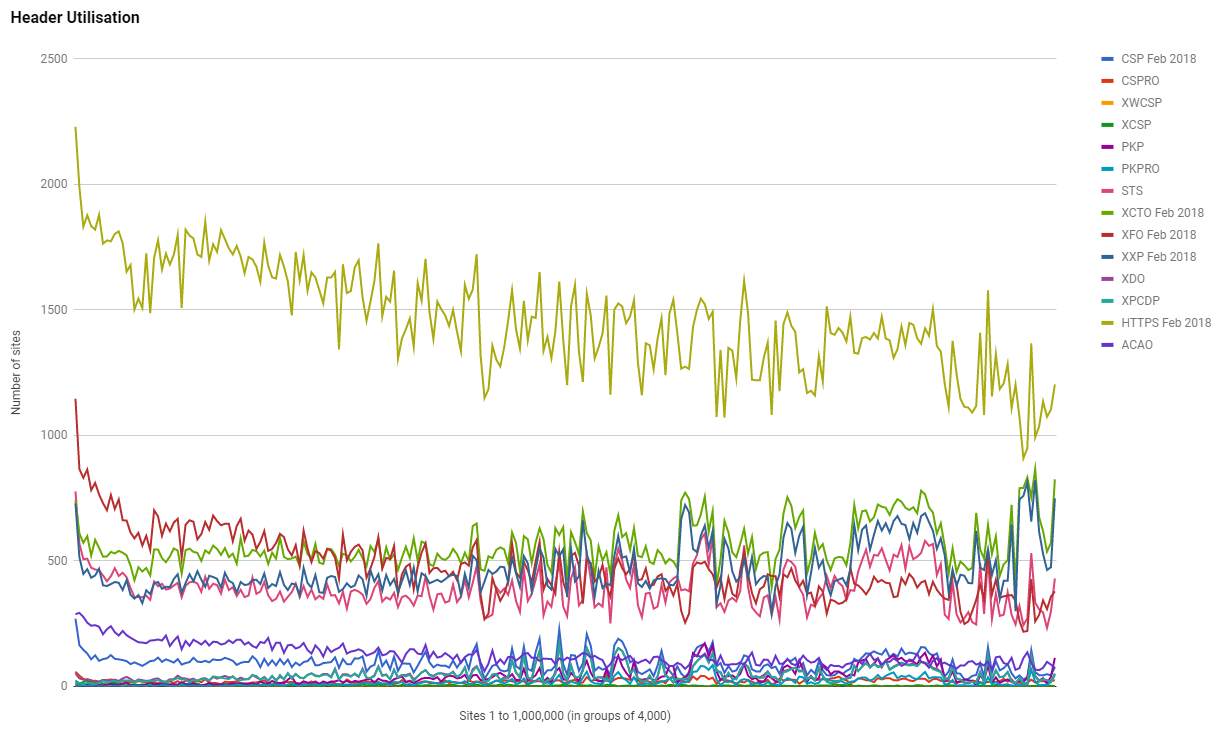

It was only a few months ago when I was talking about how 4,000+ government sites got hit with Cryptojacking after a piece of rogue JS installed a crypto miner on their site. Back then I detailed how CSP and SRI could have protected all of those government sites and to this day only a small handful of them have gone and deployed either of those protections. This is malicious script on government websites we're talking about and the response hasn't been great. Looking back at the data from my regular scans of the top 1 million sites on the web shows that we're not deploying these defensive mechanisms at scale either.

The February 2018 scan shows that only 2.4% of sites are deploying CSP and as the rank of the site gets lower, there is less chance they deploy it. My guess is that across all sites worldwide we have less than 1% of them deploying CSP.

It's time to start deploying things like CSP a lot more than we are. This is another big example of an occasion where something very serious could have happened, but it didn't because we had luck on our side. One day, our luck is going to run out.