I recently wrote about Bloom Filters, the hugely space efficient, probabilistic data structures, and how great they can be. I wanted to create a demonstration of just how useful they could be and thought of a demo using the Pwned Passwords data from Have I Been Pwned.

Bloom Filters

I already have a lengthy blog post on Bloom Filters so if you want the really technical details, you should head over there first. In short through, a Bloom Filter is a very small data structure that can usually be kept in memory and allows you to query against it. The benefit is that Bloom Filters can store an enormous amount of data and let you query against it very quickly. The one 'catch' of a Bloom Filter though is that it can only tell if you an item is 'probably in the data set' or, 'definitely not in the data set', but that's still very useful.

Pwned Passwords

The Pwned Passwords collection is curated by Troy Hunt and you can query against a list of 613,584,246 real world passwords leaked in data breaches with the goal of preventing users of your service from choosing poor passwords. The API is simple and provides strong privacy guarantees whilst being pretty fast to query against. The Pwned Passwords data set is also available to download, coming down in its smallest version as an 11GB compressed file that expands out to 25.1 GB. That's a pretty big file to keep locally and query against so I figured if you were doing that, a Bloom Filter might be able to help.

Setting up the Bloom Filter

In order to create a Bloom Filter, it's good to know at least something about the intended data set you want to insert and query against. As mentioned in the detailed post on Bloom Filters, we have the following:

m = the size of the array

n = how many items will be placed in the array

k = how many hash functions you use

p = probability of a false positive

We can quite confidently say they we know n, as there are exactly 613,584,246 passwords in the data set, and we can also control p, our desired probability for a false positive. That means that k and m are then easier to calculate and will be decided for us based on what results in the most optimal configuration depending on whether you're concerned more about space complexity or time complexity. Let's take a look at some examples, first considering the impact of varying k.

m = 23627429658 (2.75GiB)

n = 613584246

k = 30

p = 0.00000001 (1 in 100,000,000)

m = 24172283057 (2.81GiB)

n = 613584246

k = 20

p = 0.00000001 (1 in 100,000,000)

m = 23524963270 (2.74GiB)

n = 613584246

k = 27

p = 0.00000001 (1 in 99,857,412)

As you can see here, decreasing the number of hashes k we use to insert items into the filter has the effect of increasing the memory requirements m in order to maintain our desired false positive probability p. The 'correct' choice here depends on whether you are more concerned about the space complexity (RAM needed to store the filter) or time complexity of a query (having to calculate 20 hashes vs. 30 hashes). You can tweak these numbers as much as you like based on your own constraints but I'm happy with the above example using k = 27 and p = 0.00000001 as we can say:

- The filter will require ~2.74GB of RAM.

- The filter has a false positive rate of 1 in ~100,000,000!

- The time complexity of a query operation is low.

Building the filter

To build the filter we simply need to iterate over each line in the Pwned Passwords data and insert the hash into the filter. Creating a filter for use like this is a one-time operation and until the Pwned Passwords data set changes, the filter will not need to be regenerated.

<?php

use Palicao\PhpRebloom\BloomFilter;

use Palicao\PhpRebloom\RedisClient;

use Palicao\PhpRebloom\RedisConnectionParams;

require __DIR__ . '/vendor/autoload.php';

$n = 613584246;

$p = 0.00000001;

$bloomFilter = new BloomFilter(

new RedisClient(

new Redis(),

new RedisConnectionParams('127.0.0.1', 6379)

)

);

$start = microtime(true);

$before = memory_get_usage();

$bloomFilter->reserve('pwned-bloom', $p, $n);

$after = memory_get_usage();

echo ($after - $before) . "\n";

$count = 0;

foreach (importData() as $hash) {

$bloomFilter->insert('pwned-bloom', $hash);

$count++;

echo $hash . ' ' . $count . "\n";

}

$end = microtime(true);

echo 'Execution time: ' . ($end - $start) / 60 . 'm';

function importData() {

$fh = fopen('input.txt', 'r');

while(!feof($fh)) {

yield strtok(trim(fgets($fh)), ':');

}

fclose($fh);

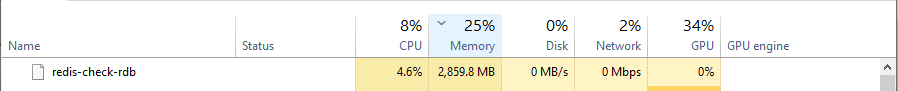

}The script reported that it was consuming ~2.8 GB of RAM, which is what we calculated, and that was also confirmed by Task Manager.

Creating the filter took almost 10 hours on my desktop running in WSL, which could easily be improved on, but as generating the filter is a one-time event and I let it run overnight, I'm not overly concerned about trying to improve this part.

Saving the filter

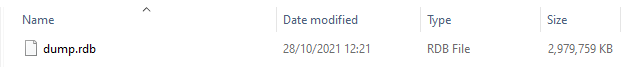

Using the SAVE command in Redis I created a snapshot containing the bloom filter for easy transport, sharing and backup.

redis-cli

127.0.0.1:6379> SAVE

OK

(119.55s)

I've also created a zip file version, even though there isn't any space saving to be had, to make sharing easier and the files will be linked at the bottom of the post along with the GitHub repo.

Querying the filter

The final step now, and the thing we've all been waiting for, is to start asking the Bloom Filter about some passwords!

<?php

use Palicao\PhpRebloom\BloomFilter;

use Palicao\PhpRebloom\RedisClient;

use Palicao\PhpRebloom\RedisConnectionParams;

require __DIR__ . '/vendor/autoload.php';

$bloomFilter = new BloomFilter(

new RedisClient(

new Redis(),

new RedisConnectionParams('127.0.0.1', 6379)

)

);

$candidates = [

'password1234', // pwned

'troyhuntsucks', // not pwned

'hunter1', // pwned

'scotthelmerules', // not pwned

'chucknorris', // pwned

];

foreach ($candidates as $candidate) {

$start = microtime(true);

$exists = $bloomFilter->exists('pwned-bloom', strtoupper(sha1($candidate)));

$end = microtime(true);

echo $candidate . ' ' . ($exists ? 'pwned' : 'not pwned') . ' in ' . ($end - $start) . "ms.\n";

}I've created an array of $candidates that I'm going to query against the filter and I've manually checked whether those candidate passwords are in the Pwned Passwords data set by both verifying it against the API and confirming whether it is present in the downloaded hash list. The only thing to do then is ask the question!

password1234 pwned in 0.00073099136352539ms.

troyhuntsucks not pwned in 0.00015020370483398ms.

hunter1 pwned in 0.00010013580322266ms.

scotthelmerules not pwned in 6.5088272094727E-5ms.

chucknorris pwned in 9.5129013061523E-5ms.That means my average query time against the filer was 0.000148677825927734ms! That's quite the impressive query time and taking that average, it means that I could, on my fairly basic setup here at home, query about 6,725,952 passwords against the filter each second!

Querying the API

Obviously querying the filter is going be faster as it doesn't involve the network (duh!), but I'm curious just how fast querying the filter is. If you're not familiar with this table, you should become familiar with it. I've seen various incarnations of it over the years but the underlying point always remains the same. It compares the speed of accessing data in various locations with a comparison to a more understandable unit for humans.

| Computer Time | Human Comparison | |

|---|---|---|

| 1 CPU cycle | 0.3 ns | 1 s |

| Level 1 cache access | 0.9 ns | 3 s |

| Level 2 cache access | 2.8 ns | 9 s |

| Level 3 cache access | 12.9 ns | 43 s |

| Main memory access | 120 ns | 6 min |

| Solid-state disk I/O | 50-150 μs | 2-6 days |

| Rotational disk I/O | 1-10 ms | 1-12 months |

| Internal Network | 2 ms | 70 days |

| Internet: SF to NYC | 40 ms | 4 years |

| Internet: SF to UK | 81 ms | 8 years |

| Internet: SF to Australia | 183 ms | 19 years |

| OS virtualization reboot | 4 s | 423 years |

| SCSI command time-out | 30 s | 3000 years |

| Hardware virtualization reboot | 40 s | 4000 years |

| Physical system reboot | 5 m | 32 millenia |

I've gone for a fair comparison of a main memory access vs. the internal network vs. the shortest Internet path of SFO to NYC. I added the Internal Network line myself to represent what I think is a reasonable response time if your Redis server was hosted locally somewhere and as the HIBP Pwned Passwords API runs on Cloudflare, so it would always be a short network path, I went for the shortest Internet path. Looking at those time comparisons now, you can see why we're seeing such a huge difference.

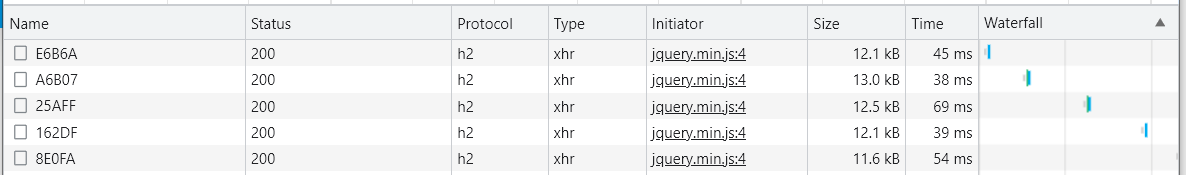

Taking those same 5 candidate passwords and running them against the Pwned Passwords API, considering our expectation from the above table, I got the following times in Chrome.

That's an average of 49ms to query against the Pwned Passwords API, or somewhere in the region of 329,571 times longer to query the API than to query the Bloom Filter! Even if we assumed an average of 2ms for a locally hosted Redis server, it's still 20x faster than querying the API directly, and we have to remember that this is an exceptionally fast API that you're unlikely to see matched in terms of performance elsewhere.

That's not all though, you then have to do some additional work with the response from the API. The Pwned Passwords API has some awesome privacy protections in the form of k-Anonymity so the response for that first request looks like this.

00AF95075E990658DE18AC06B46E9E581C3:5

0132EBFF6E9FA7EF05773C06D42F532DE33:1

014BCEDC1B602F662C6F1B96AED26F3FD13:7

01B8AD9DCECC9EBC3EAFFC2DC028212D81B:1

021537F2F889FC07EC4735F779F4BA0CBC9:1

022FD34C85A8C887848E1FBE1AF5F86B853:5

0283CDDAB5CD347E21F9E7EEE8F5F296A77:2

03090905DB882F74CA4A5754BEF7A9206D4:1

031A0020BD07CFDA9855EE3FE44D429DE57:2

034A02FF9C6A708125E38A101847411C2B5:2

0411ED08C1BB17FE4B3AC088EF1D759741C:2

0455D5E316BF8F56D7DA18FC42F089C9DE5:1

04582500B4107DE31567375207A557CE761:1

0471D10C93E5804A2DBEDCB1451CD8A5B4E:4

... 560 lines later ...

FBD6D76BB5D2041542D7D2E3FAC5BB05593:24695

FC3D5E5EE6E427971A2A17728BE3CF7BE90:1

FC446EB88938834178CB9322C1EE273C2A7:2

FD9BE57E9AA7DF04366694A3CA480256EC2:9

FDF342FCD8C3611DAE4D76E8A992A3E4169:13

FE074D46ADA9E63C912AE3AE2BE6AA81D71:2

FE36C085E615BB5E9F30E5EA9322C498AD4:3

FE391596FAB81655455E437319127E7EFB9:2

FE81480327C992FE62065A827429DD1318B:1

FEB3321CE615A2B986544888D42C17C417F:1

FF0C145449A6D428BDFC46961A5ED09ADB0:3

FF7B6CD1334AF86C15087D4C7D1EE0858C3:2Yep, that's 12.1kB of SHA1 hash suffixes! You would then have to search through these to see if the suffix you were interested in was present in the response and thus whether your candidate password was present in the response or not. This would undoubtedly add an insignificant amount of time on top of the HTTP request latencies above and the bandwidth requirements per query seem to be holding at around 12.26kB per query, which isn't huge, but a factor to consider.

False Positives

The question of false positives always has to be answered with Bloom Filters and this particular scenario is no different. When querying a candidate password against the filter, we can only get one of two answer back.

Is "password1234" in the data set?

Yes, with a 1/99,857,412 chance of false positive, or,

No.

If you query against the filter and the answer is "No", then the answer really is "No", the candidate password is not in the Pwned Passwords data set. This means you can safely allow the user to use that password and not think any more of it. If you query against the filter and the answer is "Yes", then you have a decision to make. First, you can accept the 1/99,857,412 chance of a false positive and block the user from using the password. This means that for every ~100,000,000 users that sign up to your service, you might annoy one of them. Second, you can query against the Pwned Passwords API to see if the password really was in the data set.

For me, I think the probability of a false positive is so low that this would be acceptable and I wouldn't then hit the API. If you were planning to hit the API any time you got a "Yes" answer from the query then perhaps significantly increasing the probability p when generating the filter would be a good idea in order to significantly reduce the space complexity of the filter and the time complexity of a query operation.

Benefits of a Bloom Filter

I want to clarify (before everyone points it out to me!) that yes it is simpler to just hit the Pwned Passwords API and that is indeed the way we're planning to go at Report URI. The API is super-low overhead and a Bloom Filter is probably best used somewhere where there is a much more frequent and costly overhead than a single use at account registration or password change. The API will also give you access to live data without having to regenerate the Bloom Filter when the data changes so I'd only recommend this as an alternative to hosting the entire Pwned Passwords data locally if that's what you're doing now ([but-why.gif]). This is intended to be a good demonstration of the kind of thing that's possible with Bloom Filters and I hope it's achieved that. Now that we've seen what a Bloom Filter can do, I'm interested in what uses might pop up for one in the future and if you have any ideas of where a Bloom Filter might be useful, drop by in the comments below!

Links

Here are the links for the code and files used above: