I recently made some changes to my home network to increase the speed and reliability of my connection, but I did hit a little bump in the road along the way.

My Ubiquiti Home Network

If you're not familiar with my Ubiquiti Home Network you should check that out first and my other posts on using the awesome Pi-Hole with DNS over HTTPS (DoH), forcing devices to use your own DNS server and more directly to the point of this blog post, how I seriously boosted the speed and reliability of my home Internet connection.

In that post I detail how my Unifi Security Gateway (USG) is using both my usual home internet connection delivered through the phone line and my 5G hot spot that has an unlimited data plan. This means I have more bandwidth available and one can cover for the other in the event of a failure. More speed and more reliability, until something went wrong.

eth2 is down!

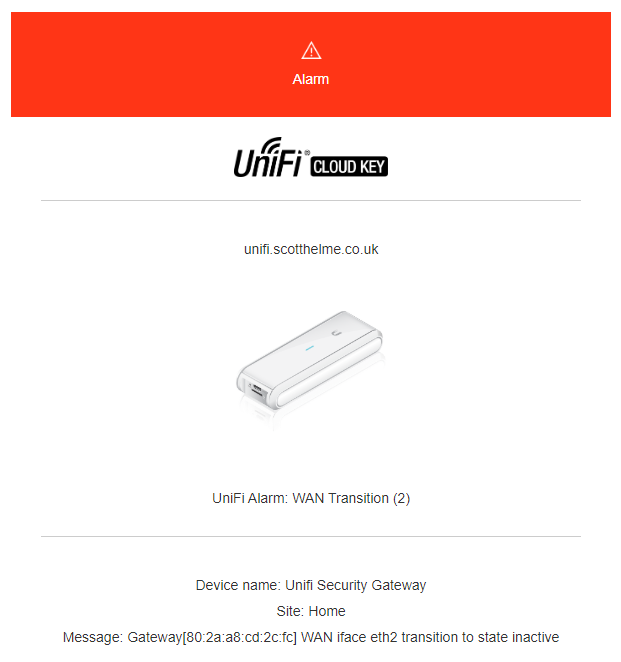

I got the following notification via email, because the Ubiquiti kit lets you know when there's a problem, and it was telling me that the 5G backup connection which runs on eth2 was down.

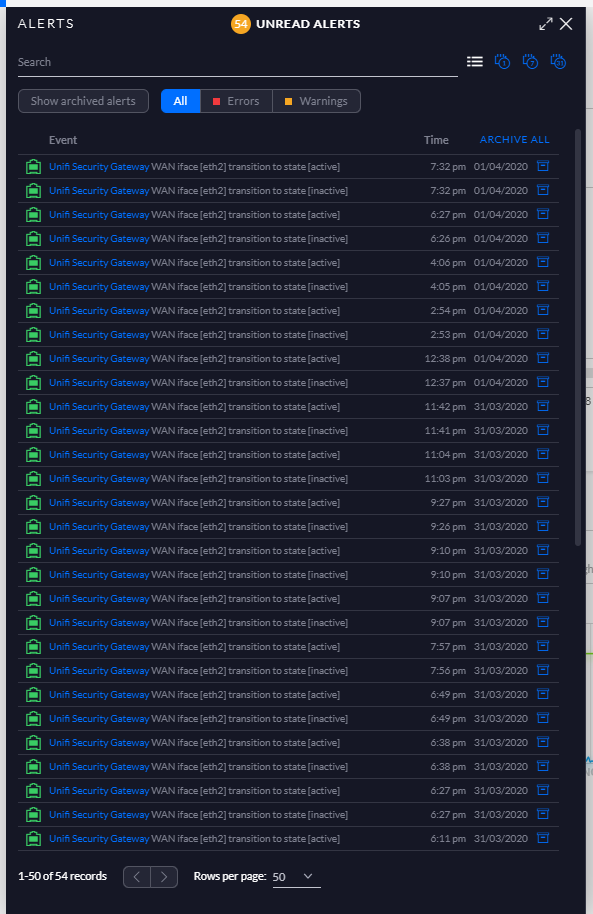

This seemed odd but I thought hey, it's odd times right now, maybe our local mast or provider had an issue and sure enough, 2 minutes later I got a notification to say it was back up. But then it happened again. And again. And again! The eth2 interface was constantly going up and down and I thought perhaps it's not the provider now because my phone, which will be using the same mast, isn't having any issues. I had a heap of these alerts showing in the controller!

Unifi Security Gateway WAN iface [eth2] transition to state [active]

Unifi Security Gateway WAN iface [eth2] transition to state [inactive]

Failover monitoring

Of course, the USG has to monitor to see if each connection is alive so it can make decisions about which connection to use and how much data to push through each one. It seemed more likely to me that there was an issue with the monitoring that was causing it to look like the connection was dropping and coming back.

If you SSH into the USG you can use 2 commands to see what's happening:

Scott@UnifiSecurityGateway:~$ show load-balance watchdog

Group wan_failover

eth2

status: Running

failover-only mode

pings: 19986

fails: 1491

run fails: 0/3

route drops: 1

ping gateway: ping.ubnt.com - REACHABLE

last route drop : Mon Apr 6 08:41:51 2020

last route recover: Mon Apr 6 08:42:36 2020

pppoe0

status: Running

pings: 20243

fails: 4

run fails: 0/3

route drops: 1

ping gateway: ping.ubnt.com - REACHABLE

last route drop : Mon Apr 6 08:41:55 2020

last route recover: Mon Apr 6 08:42:38 2020

Scott@UnifiSecurityGateway:~$ show load-balance status

Group wan_failover

interface : eth2

carrier : up

status : failover

gateway : 192.168.8.1

route table : 201

weight : 0%

flows

WAN Out : 4

WAN In : 0

Local Out : 0

interface : pppoe0

carrier : up

status : active

gateway : pppoe0

route table : 202

weight : 100%

flows

WAN Out : 315000

WAN In : 857

Local Out : 2105

These commands will tell you the current status of the connections and how the USG is monitoring for their health. You can see that it tests this by sending a ping to ping.ubnt.com and that there are 3 failures required before it will consider the connection down. At the time of the incident it seems like I was having some ping issues on the eth2 interface and it only took 3 bad pings to mark the connection as down. I wanted to tweak this a little to reduce the sensitivity and stop the connection being set to inactive so easily.

Changing the failover criteria

It's easy to change these settings directly on the USG via SSH using the configure command.

Scott@UnifiSecurityGateway:~$ configure

[edit]

Once you've run that command you can now edit the configuration and set/change options as needed.

Scott@UnifiSecurityGateway# set load-balance group wan_failover interface pppoe0 route-test type ping target 8.8.8.8

[edit]

Scott@UnifiSecurityGateway# set load-balance group wan_failover interface eth2 route-test type ping target 8.8.8.8

[edit]Those 2 commands will change the target for the ping from the current ping.ubnt.com to 8.8.8.8 instead. You can change the target to any (reliable) ping target that you like and also don't forget to update the interface names to match your own. If you want you can also change the interval between the ping tests.

Scott@UnifiSecurityGateway# set load-balance group wan_failover interface pppoe0 route-test interval 10

[edit]

Scott@UnifiSecurityGateway# set load-balance group wan_failover interface eth2 route-test interval 10

[edit]You can also control how many failed requests are required to consider the link down and how many successes are required to consider the link back up.

Scott@UnifiSecurityGateway# set load-balance group wan_failover interface pppoe0 route-test count failure 6

[edit]

Scott@UnifiSecurityGateway# set load-balance group wan_failover interface pppoe0 route-test count success 3

[edit]

Scott@UnifiSecurityGateway# set load-balance group wan_failover interface eth2 route-test count failure 6

[edit]

Scott@UnifiSecurityGateway# set load-balance group wan_failover interface eth2 route-test count success 3

[edit]To finish up making your changes you need to commit them, save them and then exit the configure tool.

Scott@UnifiSecurityGateway# commit

[edit]

Scott@UnifiSecurityGateway# save

Saving configuration to '/config/config.boot'...

Done

[edit]

Scott@UnifiSecurityGateway# exit

exitWith that saved you can now check the watchdog and see that it's using the newly configured parameters.

Scott@UnifiSecurityGateway:~$ show load-balance watchdog

Group wan_failover

eth2

status: Running

failover-only mode

pings: 8

fails: 0

run fails: 0/6

route drops: 1

ping gateway: 8.8.8.8 - REACHABLE

last route drop : Mon Apr 6 08:41:51 2020

last route recover: Mon Apr 6 08:42:36 2020

pppoe0

status: Running

pings: 0

fails: 0

run fails: 0/6

route drops: 1

ping gateway: 8.8.8.8 - REACHABLE

last route drop : Mon Apr 6 08:41:55 2020

last route recover: Mon Apr 6 08:42:38 2020

We can see it now requires that 6 failures are required to consider the route down and that it will be pinging 8.8.8.8 instead. The changes we made have now been applied, but they will not persist across a reboot of the USG.

Making the changes persistent

To make the changes stick even after the device is rebooted, we need to SSH to the CloudKey (or your controller) and make changes to the config.gateway.json file. The changes you are going to need to make will have to be exported from the USG config so just before we hop over to the CloudKey, let's grab the config from the USG.

Scott@UnifiSecurityGateway:~$ mca-ctrl -t dump-cfg

{

"firewall": {

"all-ping": "enable",

"broadcast-ping": "disable",

"group": {

"address-group": {

"authorized_guests": {

"description": "authorized guests MAC addresses"

},

...... a *lot* more JSONThat's probably going to dump up way too much config, which is JSON, so we can redirect it to a file that we can then open and take snippets from.

Scott@UnifiSecurityGateway:~$ mca-ctrl -t dump-cfg > temp-config.jsonWe then need to open the file and look for the new config to take with us to the CloudKey.

Scott@UnifiSecurityGateway:~$ vi temp-config.json

{

"firewall": {

"all-ping": "enable",

"broadcast-ping": "disable",

"group": {

"address-group": {

"authorized_guests": {

"description": "authorized guests MAC addresses"

},

...... a *lot* more JSONScroll through the file until you find the load-balance section which is about 2/3 of the way through. Here is mine.

"load-balance": {

"group": {

"wan_failover": {

"flush-on-active": "disable",

"interface": {

"eth2": {

"failover-only": "''",

"route-test": {

"count": {

"failure": "6",

"success": "3"

},

"initial-delay": "20",

"interval": "15",

"type": {

"ping": {

"target": "8.8.8.8"

}

}

}

},

"pppoe0": {

"route-test": {

"count": {

"failure": "6",

"success": "3"

},

"initial-delay": "20",

"interval": "15",

"type": {

"ping": {

"target": "8.8.8.8"

}

}

}

}

},

"lb-local": "enable",

"lb-local-metric-change": "enable",

"sticky": {

"dest-addr": "enable",

"dest-port": "enable",

"source-addr": "enable"

},

"transition-script": "/config/scripts/wan-event-report.sh"

}

}

},Now, we don't want to take the entire section, we only want to take parts relevant to the changes we just made. So, trim away any of the JSON that we don't need and to finish up, wrap the entire thing in another set of curly brackets.

{

"load-balance": {

"group": {

"wan_failover": {

"interface": {

"eth2": {

"route-test": {

"count": {

"failure": "6",

"success": "3"

},

"interval": "15",

"type": {

"ping": {

"target": "8.8.8.8"

}

}

}

},

"pppoe0": {

"route-test": {

"count": {

"failure": "6",

"success": "3"

},

"interval": "15",

"type": {

"ping": {

"target": "8.8.8.8"

}

}

}

}

}

}

}

}

}This is now the finished JSON we need to take to the CloudKey and put in the config.gateway.json file. On the CloudKey, open the file in an editor and create it if it doesn't exist.

Scott@UniFi-CloudKey:~# vi /usr/lib/unifi/data/sites/home/config.gateway.jsonNote that home is the name of my site so you will need to update that to your site name in the command. If the file already exists then you need to insert the new JSON alongside the existing JSON but if the file doesn't exist then simply set the content to our new JSON we created above.

{

"service" : {

* existing JSON stuff already here *

},

"load-balance": {

"group": {

"wan_failover": {

"interface": {

"eth2": {

"route-test": {

"count": {

"failure": "6",

"success": "3"

},

"interval": "15",

"type": {

"ping": {

"target": "8.8.8.8"

}

}

}

},

"pppoe0": {

"route-test": {

"count": {

"failure": "6",

"success": "3"

},

"interval": "15",

"type": {

"ping": {

"target": "8.8.8.8"

}

}

}

}

}

}

}

}

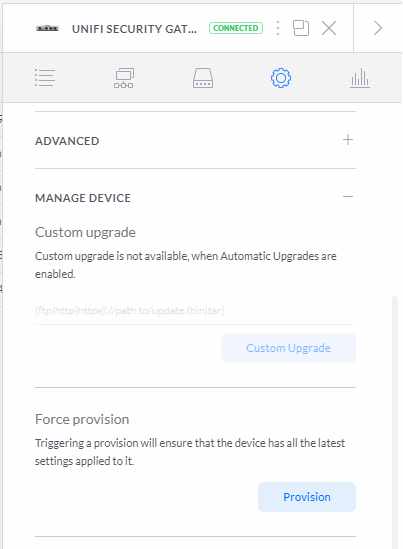

}You can see how I had to insert the new load-balance section alongside some existing JSON as I made changes here in a previous blog post so just do something similar if you need to. It's a really good idea to use a JSON validation tool to check your JSON is well formatted before you save it here and use it. Once you've saved that file all you need to now is provision the USG through the web interface.

All done

Since I made these changes I've not had a single hiccup on the backup connection so I can only guess that perhaps the latency on the 5G connection was a little too high and maybe triggering the failover detection. Either way, if you ever come across the same problem of an interface going up and down, and you can't figure out why, perhaps this will help.