Regular readers will have seen me talk about OCSP many times before and some of those times are going back quite a number of years. That's why it came as quite a surprise to me to see OCSP thrust into the limelight again in recent days because of an issue on the latest release of Mac OS, Big Sur.

What is OCSP?

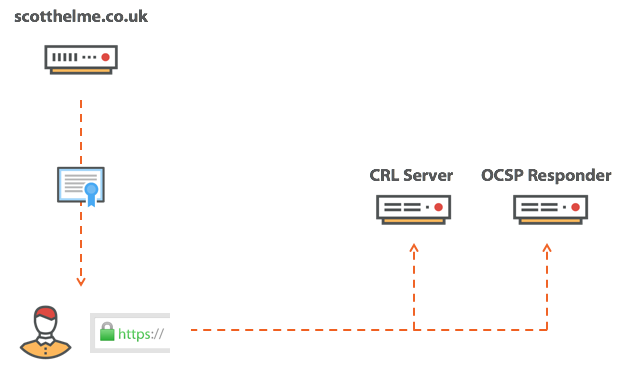

OCSP is the Online Certificate Status Protocol and it allows a client to check if a certificate has been marked as 'revoked' which means the client shouldn't trust it under any circumstances.

I wrote a blog post in July 2017 called Revocation is Broken and the idea was nothing new at that time either. The predecessor to OCSP, CRL (Certificate Revocation Lists) were long abandoned and the demise of OCSP was upon us. OCSP suffered with great privacy, performance and availability issues that made it quite a drawback. OCSP Stapling did seek to address the privacy and performance concerns but ultimately couldn't save the failing protocol.

What are the recent concerns?

The recent concerns are actually just the same concerns we've always had but they were thrust back to the forefront of our minds again with the release of macOS Big Sur. Not that the release of the new OS version caused a direct issue, but it seems the load placed on Apple for the downloads caused issues with their OCSP server too. Clients call in to check the status of a certificate, that check hangs due to server load and users launching apps experience delays. This is the main availability concern of OCSP but it seems that this recent event brought the privacy concern under a lot of scrutiny too.

There were a few good blog posts I've seen doing the rounds, including Your Computer Isn't Yours, Does Apple really log every app you run? A technical look and Can't Open Apps on macOS: An OCSP Disaster Waiting To Happen, that all look at and highlight the privacy issue with OCSP. Sending an OCSP request indicates to the OCSP server that you need to know the status of a particular certificate. In the case of a TLS certificate that would likely identify an individual site, like scotthelme.co.uk, but in the case of a code signing certificate it would likely identify the developer. It's slightly less specific but if the developer is Signal Messenger, PornHub or Mozilla (likely Firefox or Thunderbird), you can still learn a lot about the client asking these questions. You know what apps they're running, when they're running them and where/who the user is (source IP). The other concern is that not only can Apple collect all of this information, but that OCSP is a plaintext protocol so anyone on the network, like your ISP, can also gather this data and profile you.

What is the solution?

Well, that really depends on who you are, what your goal is and if you're willing to make any sacrifices.

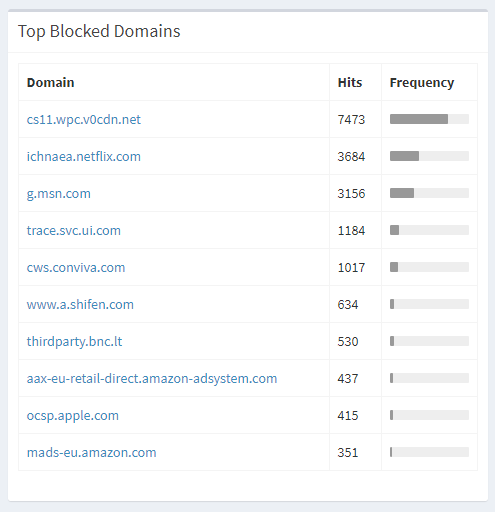

The first thing a user can do is block traffic going to ocsp.apple.com so that these privacy leaking and performance harming requests are never made. But, you might think 'well hang on, what happens if the client can't make these requests?' This is something I covered in Revocation is broken, OCSP is (almost always) a soft-fail check so if the OCSP request fails, the client doesn't care and continues anyway! I run a PiHole on my network to block ads and other unwanted traffic at DNS on my network and earlier this year I blocked all OCSP and CRL traffic on my network. The clients will silently fail and continue on regardless. The ocsp.apple.com domain is even in the Top 10 Blocked Domains on my network, yet everything works fine.

At this point many people may be alarmed and we hear things like how dangerous it is to disable a security feature and how much of a bad idea this is. But is it? At this point I will draw a slight distinction between certificates used for TLS and for Code Signing. With a TLS certificate we're protecting against an adversary on the network and an adversary on the network can trivially block traffic like OCSP requests, rendering the mechanism useless. With Code Signing certificates the threat model is slightly different, but could still reasonably include someone attacking you on the network. Either way it's unreliable and can quite easily let you down and cause problems, as we've seen. If the request fails completely then the client will simply continue on without a single care in the world.

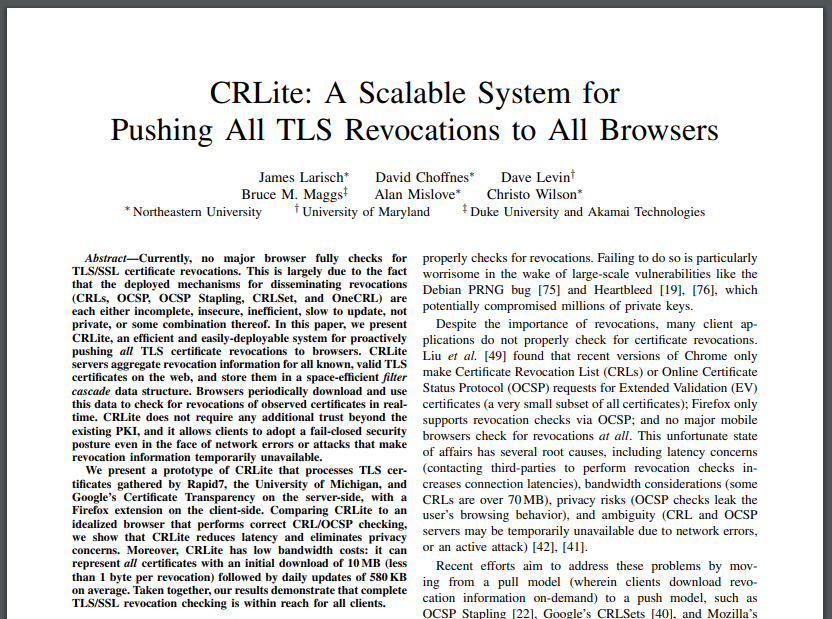

The next option, and the type of option I always prefer, is one that doesn't involve the user in any way. Users shouldn't have to do stuff. Users shouldn't have to know stuff. If you have an idea and at any point in the explanation of your idea you say "the user should", I'm going to shoot you down right there. Users are unreliable, and that's not a criticism of users, they should be carefree and we should not rely on them. Technology has failed when we introduce the user into the system as a component. That's why something like CRLite could potentially be a solution to the problem of revocation in general. I've written a detailed blog on CRLite: Finally a fix for broken revocation? but to summarise it here, it's a space efficient way of including details about all revoked certificates into the client. The client can then query this local 'database' without a privacy/performance compromising online check and hard-fail on the result.

Further ways of mitigating the risk here come down to developers too, like getting a certificate with a shorter validity period. This would help keep the lists of revoked certificates much smaller (because once they're expired we don't care and they drop off the list) and it reduces the window of risk that a user could face with bad software signed with a revoked certificate that they don't know about or can't query for an OCSP response.

In the end it's a tough problem and there's no 'real' solution yet, as I set out in my Revocation is Broken and CRLite blog posts, but we can certainly work to improve things in the interim. For now though, this is just another example in my growing list of reasons that OCSP really isn't fit for purpose and should be replaced by better mechanisms.

Update

**16th Nov 2020**

Shortly after publishing this article, Apple published a support article that addresses some of the concerns raised here.

Gatekeeper performs online checks to verify if an app contains known malware and whether the developer’s signing certificate is revoked. We have never combined data from these checks with information about Apple users or their devices. We do not use data from these checks to learn what individual users are launching or running on their devices.

In honesty, I never expected Apple would be doing anything questionable with the data but my concern was more around the pointlessness of the soft-fail OCSP request in the first place and the privacy leak over the network. They continue:

These security checks have never included the user’s Apple ID or the identity of their device. To further protect privacy, we have stopped logging IP addresses associated with Developer ID certificate checks, and we will ensure that any collected IP addresses are removed from logs.

It is true that the OCSP request does not contain data like an Apple ID or any other identity about the device itself but as I mentioned above, the source IP was a concern. Given that OCSP requests are simply HTTP GET requests, it seems logical that Apple did log the source IP in (probably) traditional web server logs which will now be scrubbed.

In addition, over the the next year we will introduce several changes to our security checks:

* A new encrypted protocol for Developer ID certificate revocation checks

* Strong protections against server failure

* A new preference for users to opt out of these security protections

With regards to the final section about new changes, it does raise a few questions.

Apple promise an "encrypted protocol" but my initial guess is that we're not talking about OCSP over HTTPS instead of HTTP. The problem with encrypting a revocation check is where do we check the certificate that the revocation infrastructure provides us? You end up in a bit of a loop and ultimately give in to something like not revocation checking the certificate provided by the revocation infrastructure. For those wondering how OCSP responses (or CRLs for that matter) are resistant to tampering, they are signed by the issuer and the client validates the signature, we don't need integrity/authenticity provided by secure transport like TLS, we get it with signatures.

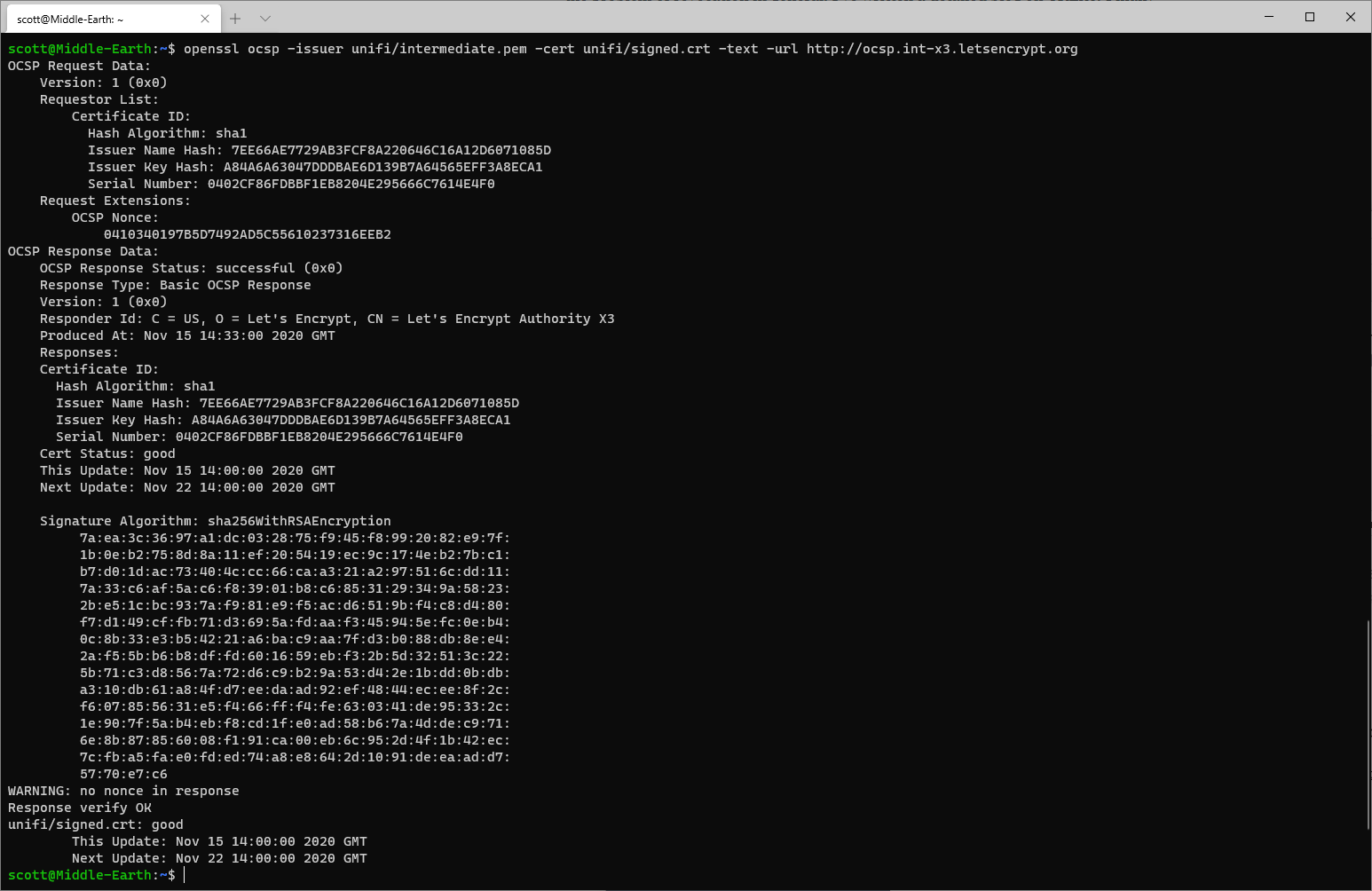

Having "Strong protections against server failure" basically just means adding more cloud. Behind an OCSP responder is a server doing something quite expensive in generating and signing the OCSP response, but OCSP responses can be aggressively cached for a good period of time. Take the header image of this blog which handily proves this point, I did an OCSP request for one of my Let's Encrypt certificates.

That OCSP response was produced on Nov 15 2020 and is valid until Nov 22 2020, 7 whole days. That means you can stick a HTTP cache in front of the OCSP responder and serve this response from cache for days, meaning no real load hits the OCSP responder itself.

Finally, Apple will give users an option to opt out of OCSP checking, presumably just for the particular purpose of checking code signing certificates. As I mentioned above, a user can already 'opt out' by simply blocking these requests but having a more formal option is of course going to be the better course here.

It's great to see Apple acknowledge the concerns and respond to them so quickly, but I don't think it really changes any of the above. Without more details on the new, encrypted protocol they promise I can't comment on how that might be better or worse so until we hear more, we'll just have to wait!