I've been running report-uri.io for almost 3 years now and in that time I've been regularly shocked, surprised and thrilled at the success it's seen. It's time to take the next steps and what follows is a series of big announcements!

A big investment

There are so many things I'm excited to announce but one of the biggest is definitely that Troy Hunt is joining the team! I'm sure readers of my blog already know who Troy is but if you don't, here's a little bit of info. Troy's a Microsoft MVP (Most Valuable Professional) and Regional Director, Pluralsight author and security guru. He's also the creator of Have I Been Pwned? and can be regularly found blogging over at troyhunt.com. You can read his announcement about joining the team and his reasons why over on his blog here. One of the things I realised quite a few months ago was that report-uri.io was growing faster than I could handle. The number of users, report volumes, costs, everything. I needed to respond and get out ahead of this before the site became a victim of its own success and it just couldn't be sustained on the current model. Troy was a perfect fit for an investor because he's heavily involved in the security space that the service operates in and has a genuine passion for the technology. His investment allowed me to begin the process of building the next version of the site, which I've dubbed 'v2', and his involvement and expertise have been vital along the way. I'm also really looking forward to how he can help the service grow and evolve in the future as a product evangelist.

Becoming sustainable

When I started Report URI it was a free service for anyone to use because I wanted to make the benefits it offered available to as many people as possible. Given it was built using the latest in cloud technologies the cost of hosting was incredibly cheap, until things started growing, a lot. At first it was thousands of reports per month, then tens of thousands, then hundreds of thousands, millions and eventually billions (yes, with a 'B') of reports per month! Even with the service built as efficiently as possible, and making constant updates and upgrades to keep improving, the cost was simply becoming too much. I introduced the idea of optional subscriptions so that those who could support the service and wanted to had an easy way to pay. That was always going to be difficult though, especialy as the service was still free, and the only other alternative was to require people to pay. The problem with asking people for money for something is that it changes the relationship in quite a significant way. People now have a reasonable expectation of a certain level of service and support, which was difficult to provide when the site wasn't a full-time job and run by just me. This is why the site is now being re-launched with paid subscriptions and I've taken investment to kick-start that process. Without a sustainable business model the service simply wouldn't be able to survive but there will still be a free account tier so that everyone can use the service in a useful way without having to pay.

Branding and visual updates

With the new branding for Report URI came the new site which you can now see at report-uri.com, ready for your viewing pleasure. The new site is simpler and has a much cleaner feel to it, we were long overdue a visual revamp.

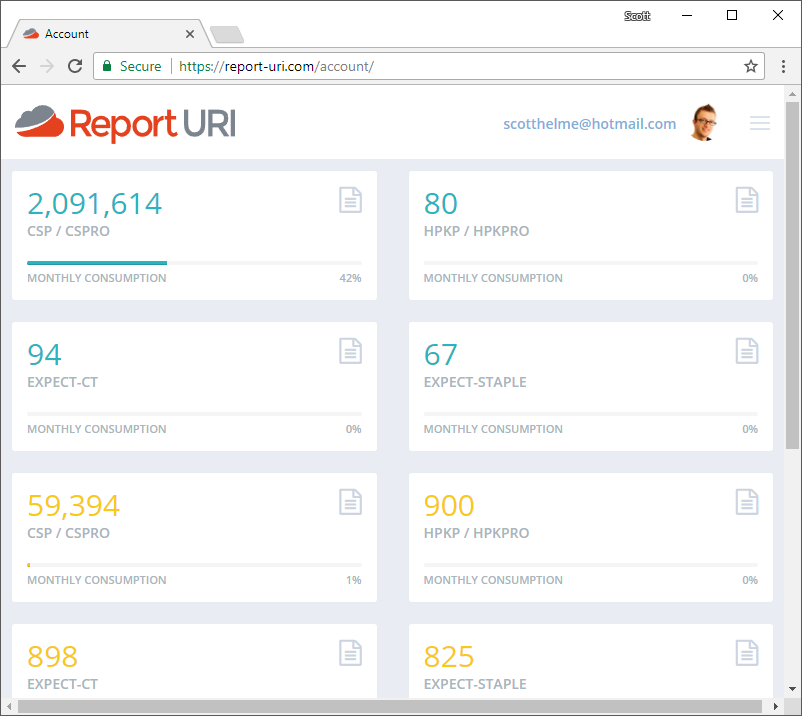

It's not just the public areas of the site that got some attention though, the account section of the site has gone through a few small changes too! The previous account homepage wasn't really very useful but that's changing and we're now making great use of the space.

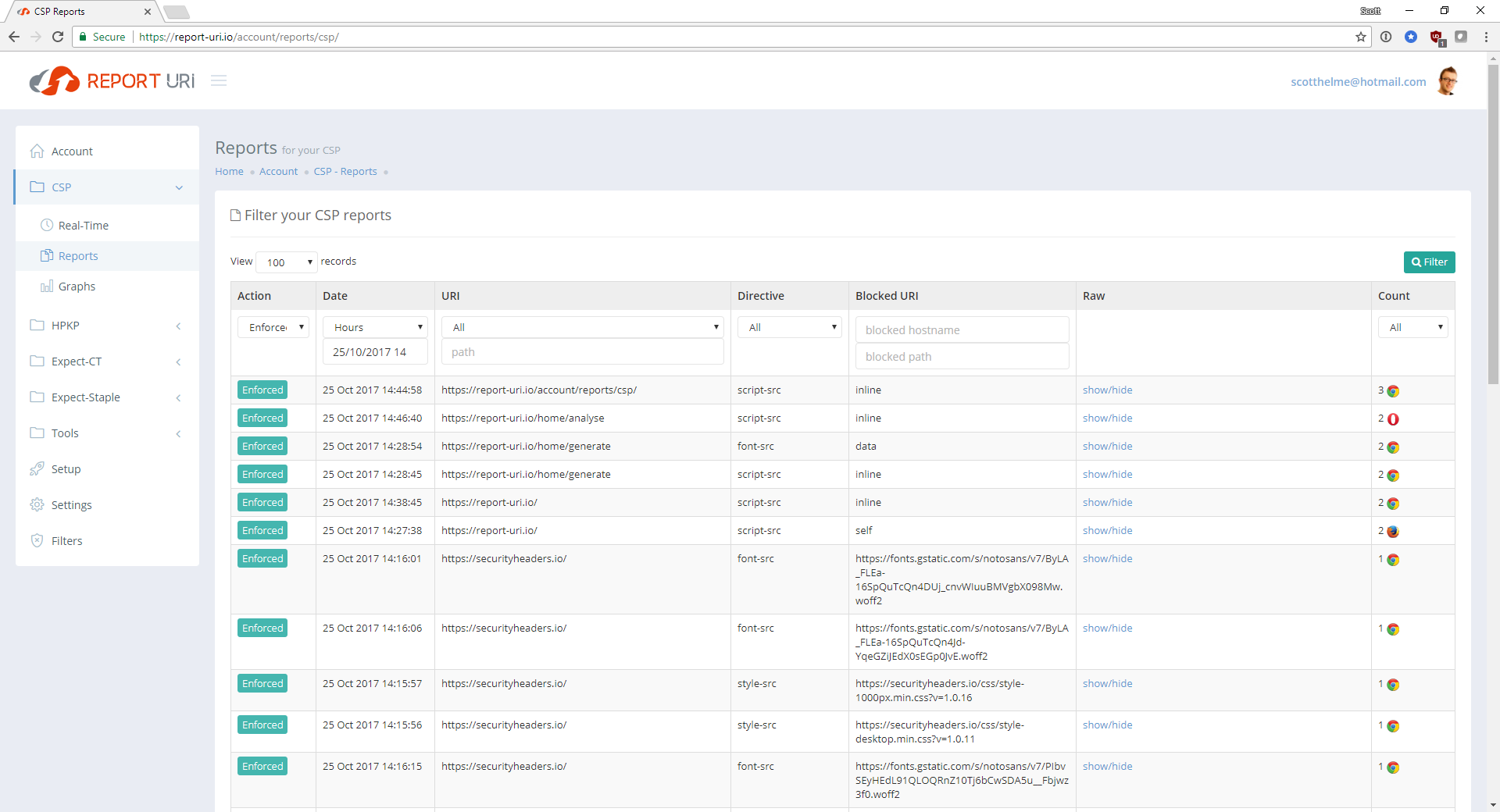

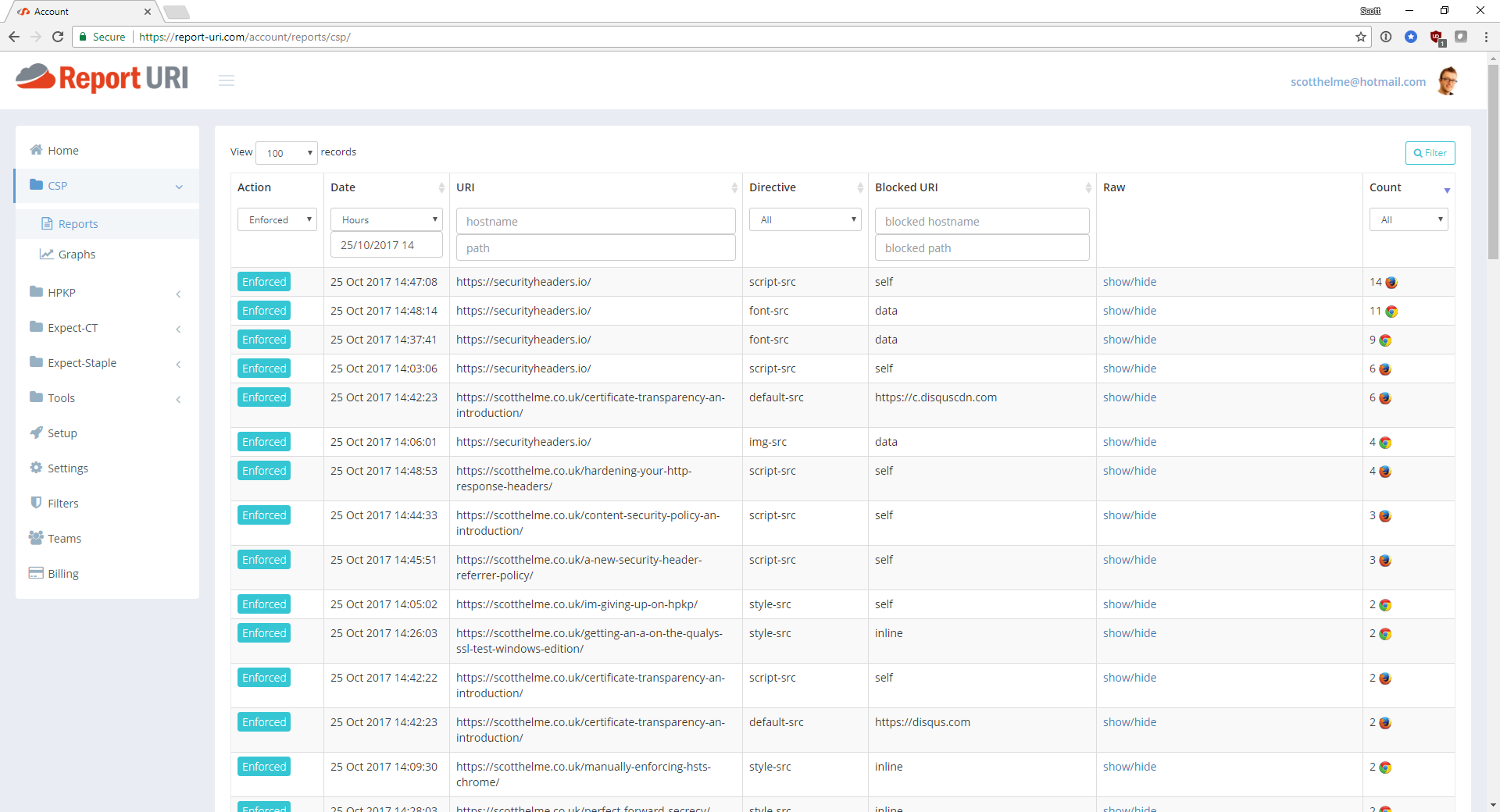

Along with a visual touch up on the icons for the menu the Reports pages have also had a little improvement. The hostname field now allows text input to search on any domain which makes it a lot easier to search if you have quite a few domains and subdomains.

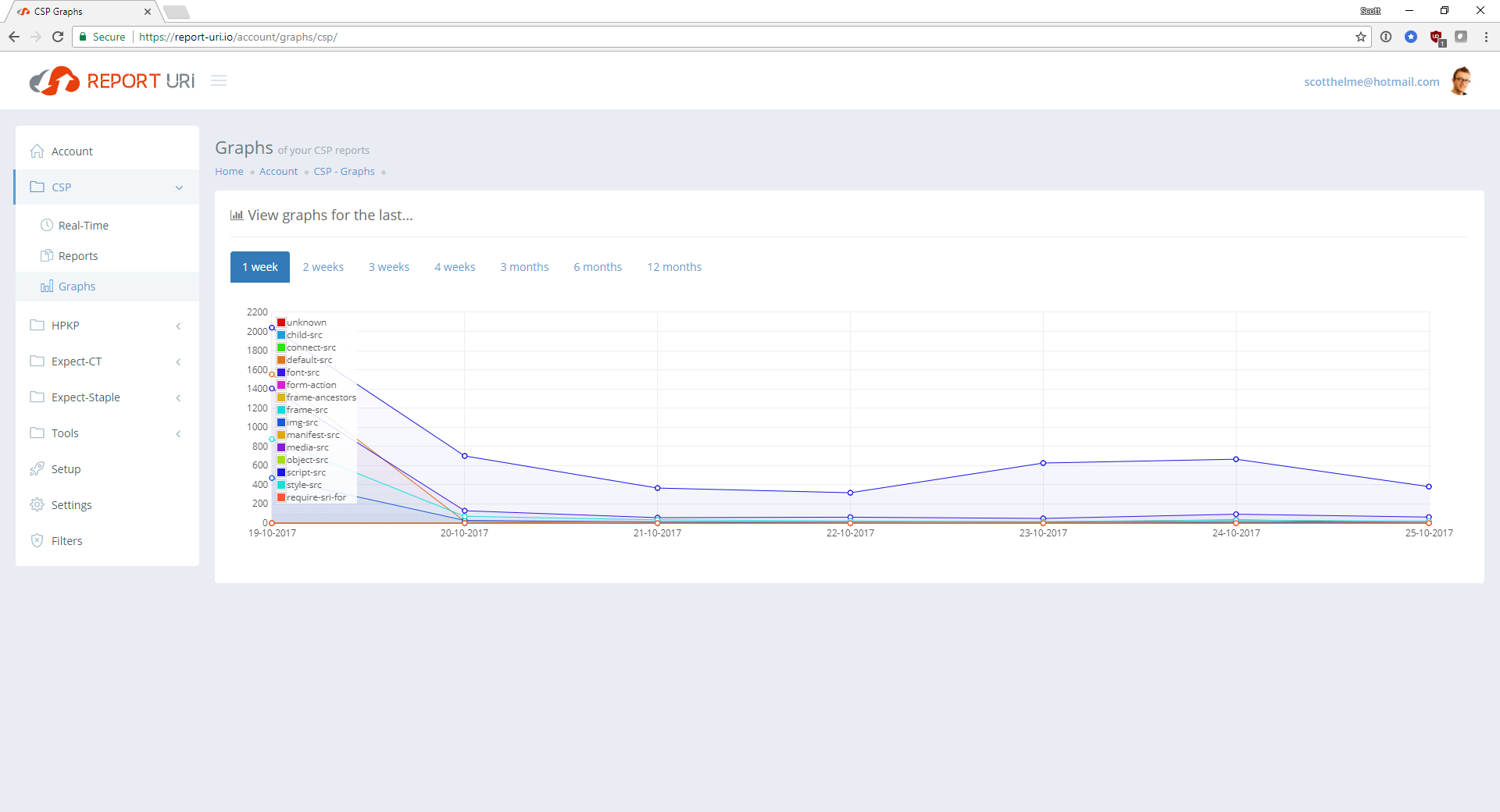

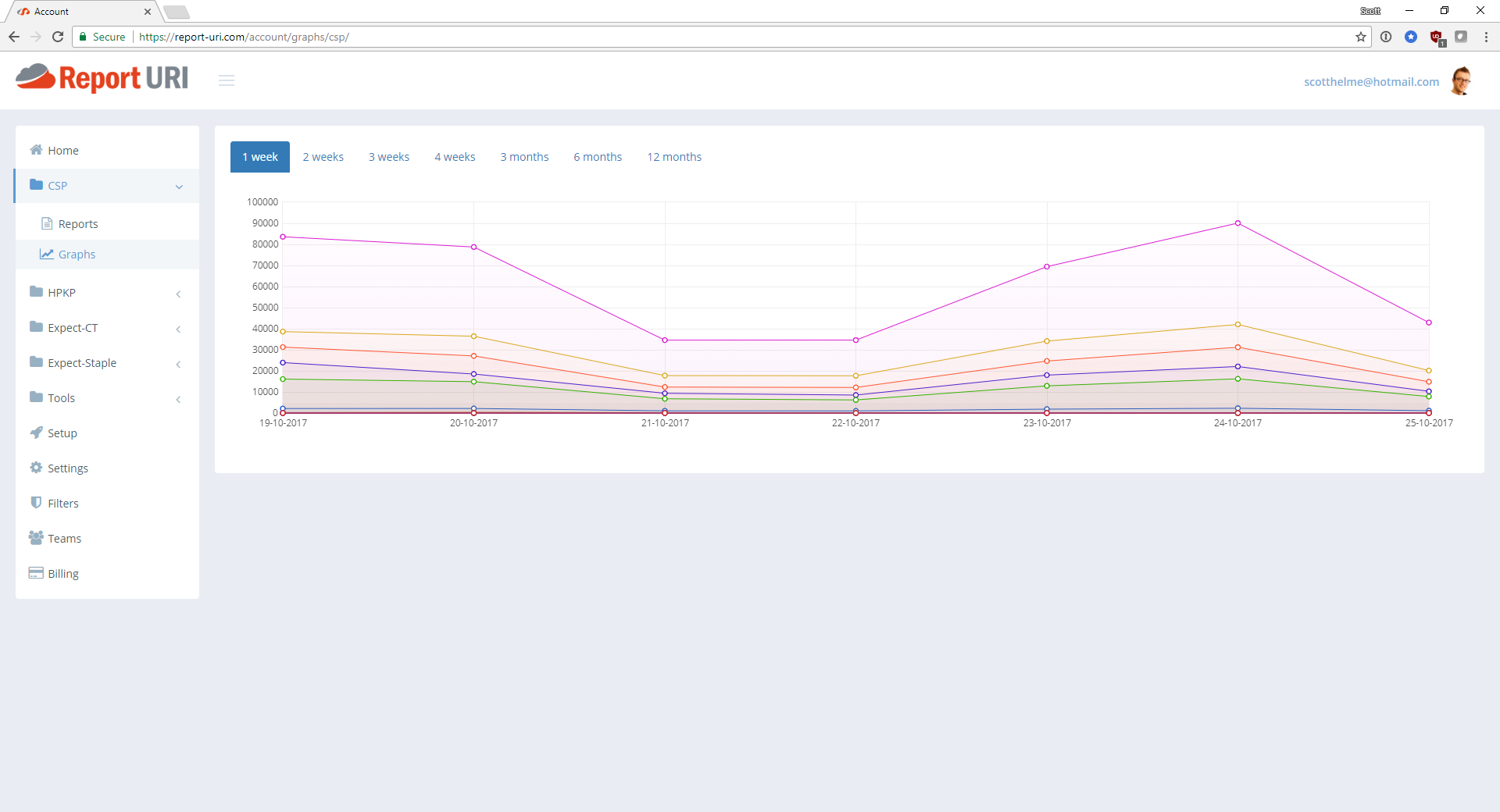

The Graphs page also got some changes and the legend for the graph is now gone. On CSP reports with all the new supported features the legend was simply getting too big so now you can hover on the lines and get a tool tip with information on the type of report and the number of reports.

New infrastructure

One of the other big changes in the v2 launch is behind the scenes and involves some pretty significant changes in the infrastructure that powers the service. I'm going to do more detailed articles on some of those changes and the reasons why I made them, but it all boils down to lessons learnt from dealing with billions of reports every single month. When the site first started it was fairly easy going to process the few tens of thousands of reports I was receiving. In 2017 though a busy month could see me processing a little over 5,000,000,000 reports and that fundamentally changes, well, everything! The servers are still hosted in DigitalOcean and I'm still using Azure Table Storage for the back-end but there are a few tweaks and modifications I've made along the way to drastically improve performance. Stay tuned for those deep dive blogs in the coming days and weeks.

New servers

We're still using DigitalOcean for all of our server needs and you can check them out with some free credit using my referral link. Previously we were using Ubuntu 14.04 and PHP 5.6 but the new site now uses Ubuntu 16.04 and PHP 7.0 instead. These both represent some quite significant upgrades and we took the opportunity to build a brand new infrastructure for the .com domain and move over to that at launch so we had a clean break. This did simplify things quite a lot as it meant we could get everything fully working and tested under the .com domain and then simply redirect all traffic from the .io when we were ready. On top of all that we also updated to the latest version of our framework, CodeIgniter!

New Redis cache

I've talked before about how memcached really helped with performance by allowing us to cache certain items at the edge like user's filtering preferences so the servers processing reports didn't have to reach back to Table Storage frequently. That was a pretty big change but every single inbound report still required a connection and insert into Table Storage to place the report into the user's table. With the new Redis cache a significant amount of the communications with Table Storage will be removed but the impact on the service will be mostly invisible.

The biggest cost to try and avoid, both in terms of performance and finances, was that each inbound report required an insert into Table Storage. Each insert has an associated fee for the operation and bandwidth and then the network overhead too which increased the performance burden on our servers. The new Redis cache now sits between our servers and Table Storage and brings with it a phenomenal improvement.

Every time a report hits our servers now, all that happens is that the report is placed into the Redis cache and a HTTP 201 is returned. Previously we had to do all processing, filtering, rate-limiting and all other work whilst accepting the report from the browser and then place it into Table Storage. That meant that the browser could be waiting well over a second for us to respond with the appropriate status code. It wasn't really a problem, the reports are sent asynchronously with low priority, but it wasn't as efficient as it could be. Now we simply accept the report from the browser and immediately say 'thanks and goodbye', no more hanging around. The averge time to get a response used to be 1,000ms+ and on the new infrastructure we're hopping to get that down to 500ms-600ms instead, a pretty significant reduction!

As the ingestion servers are now only placing the reports into the Redis cache we have a series of consumers that grab the contents of the cache and process all reports every 10 seconds and insert them into Table Storage. Coupled with the performance advantages mentioned above this 10 second buffer period allows us the opportunity to massively cut down on our traffic to Azure by stacking duplicate reports. Previously if we got 100 of the same report it meant 100 inserts into Table Storage along with the associated performance and financial costs. With the Redis cache buffering reports for 10 seconds, those same 100 reports are now a single insert into Table Sorage with a count of +100 instead and the performance saving there is staggering. Given the nature of CSP reporting, and CSP reports being our largest number of inbound reports by a huge margin, we get a lot of duplicated reports. In fact, most of the reports we get are exactly the same. If you have a site with a fair volume of traffic and a problem on one page, every single visitor will report that same problem over and over again. If we now get thousands of reports for that same issue over the 10 second period it doesn't matter, they will all still result in a single insert into Table Storage, this is a massive win.

The only one downside to this is that there is now a very small delay between a report being sent and it showing up in your account. The worst case scenario for this delay is the 10 second buffer period plus a few seconds for processing the reports in the cache. Given the staggering improvements in performance and the vast reduction in cost that this method provides we were fairly happy with the very small delay this introduces. Most people are unlikely to be sat monitoring their dashboard waiting for the first sign of a report coming in so in pretty much all cases I don't think this delay will matter at all.

New session cache

Having been really impressed with Redis for our new report cache, I also wanted to pull our session storage out of Azure and bring it closer to the application. Previously we used Table Storage as our PHP session store and it has and does work exceptionally well. When it was just me running the show and I didn't have the time or the inclination to run my own distributed session store for all of the app servers it solved a big problem for me really easily. The code for our CodeIgniter implementation is available on GitHub if you're interested. We're now going to be bringing things closer and using Redis to provide session storage. Having our Redis cache on the local network means that accessing the session store will now be considerably faster than reaching out to Azure which directly translates into faster page load times as we're shaving off roughly 40ms of overhead to fetch from Table Storage. Whilst there is also a cost saving here by avoiding interactions with Azure it's insignificant next to the cost of reports and actually less than the cost of running the Redis cache. The decision is about performance and not cost savings.

The only other thing to note is that we will be using a dedicated Redis cache just for session storage. If we were to share the same cache being used for report ingestion then there could be performance concerns if we get hit with a large amount of inbound reports. By running a dedicated cache we can alleviate those concerns and ensure the best performance on the front end.

1st team member

A few months ago I invited another security researcher and developer to come and join me for the ride and Michal Špaček became the first official member of the team. Tempted by the prospect of building a service to help organisations deploy and monitor security across the web (also beer and money) Michal has honestly been invaluable since he joined. One of the things I really hated about building the service alone was that all ideas were vetted and approved by me, which sounds like, and is, a terrible idea! I wanted to surround myself with people that would challenge me and my assertions, make valuable contributions, who knew the technology and the industry and honestly, most importantly, were just good people. Both Michal and Troy perfectly fit the bill and I have no doubt they will both make the service significantly better as a result.

The new pricing structure

One of the things that I realised fairly early on about the Report URI pricing structure was that it was unecessarily complex. After reaching out to my wider network and getting some great feedback, this is how we arrived at the current pricing structure.

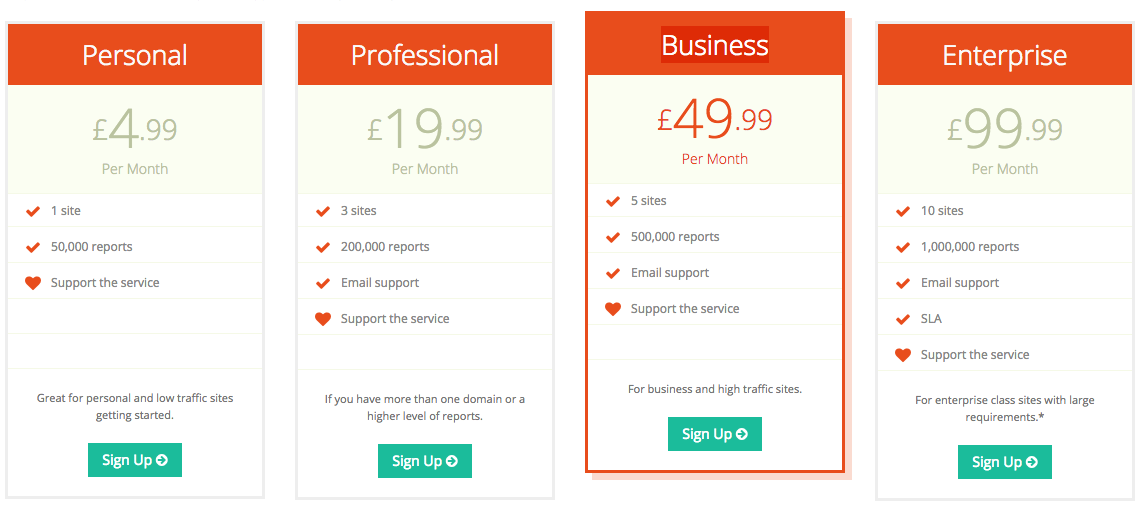

One of the first pricing structures I introduced was the various tiers available on the optional subscriptions.

The model was fairly basic and the largest difference between tiers was the number of reports you were able to send and how many sites you could monitor reports from. As I began to develop the 'v2' site it was a fairly similar approach and the strucutre didn't really change much.

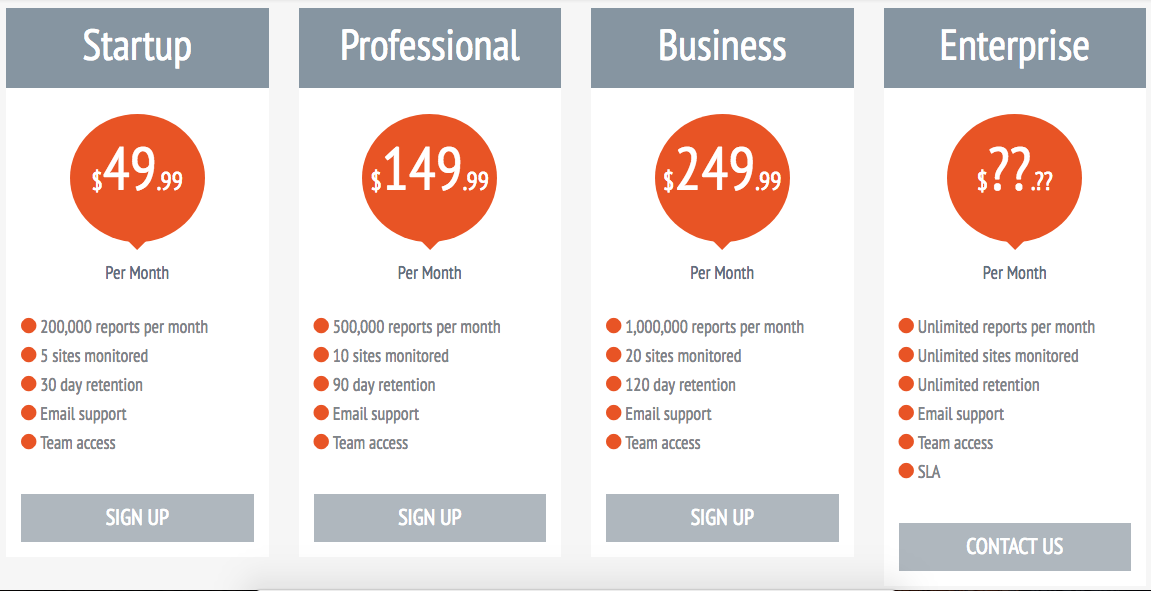

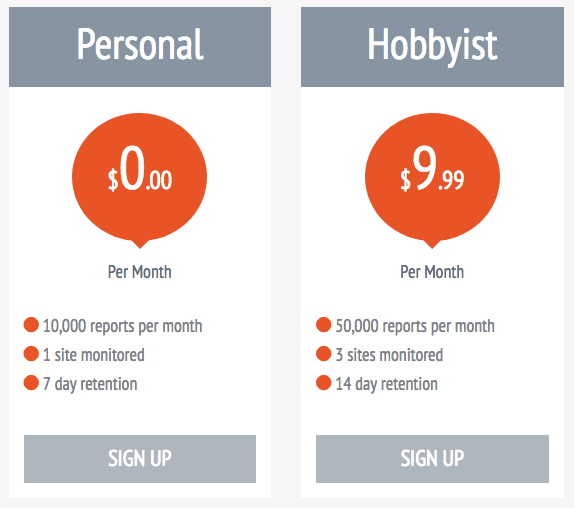

There would continue to be a free tier available for those who needed basic reporting and then three fixed-price tiers before the cusomtisable Enterprise plan. One thing that I wasn't so keen on, and something that Troy also raised, was the jump between the free tier and the first paid tier. The gap of $49.99 seemed too high and was likely to prevent a lot of people from moving from the free plan to a paid plan so a new, cheaper tier was introduced.

I was much happier with the $9.99 tier being introduced but we were now taking up an awful lot of space on the homepage and the pricing was fairly complicated with varying restrictions on each level. After further feedback and thoughts on the tiers we realised things needed to be simplified.

Simplify, simplify, simplify

When I started to pick apart each of the restrictions on each of the plan levels, I realised that most of them were created just so that there was some benefit to increasing to a higher plan. Take the number of domains being monitored, the actual number doesn't really matter to us because it's the number of reports that incurs a cost. It could be a million reports from one domain or one report from a million domains, it's the same thing. For that reason we decided to remove the number of sites limit for all of the tiers and allow any account tier to report for an unlimited number of sites. This simplified the pricing, simplified the code where we'd have to apply these restrictions and made the service accessible to users with fewer high volume sites or many low volume sites. It was a win all around.

Troy took a similar view of the retention period that was varied based on account level too. The main things with all of these reports is that the real value is found as things are reported and shortly after. As the data becomes older it does lose some of its value and if a problem is ongoing then reports will continue to be sent. We retain the full data of reports for a period of time and then erase it, keeping only aggregate data for the graphs and stats pages. Instead of having this varied level of retention, and all of the added complexity that brings, we decided to give all accounts a fixed level of retention that was useful and then erase data after that, storing only the aggregate and statistics.

The other two remaining features were Team Access and Email Support for your account tier and the simplest way to offer these was based on whether the account was a paid account or not. On the free account there is no Team Access or Email Support and on any level of a paid account you would get Team Access with Email Support being introduced slightly higher up.

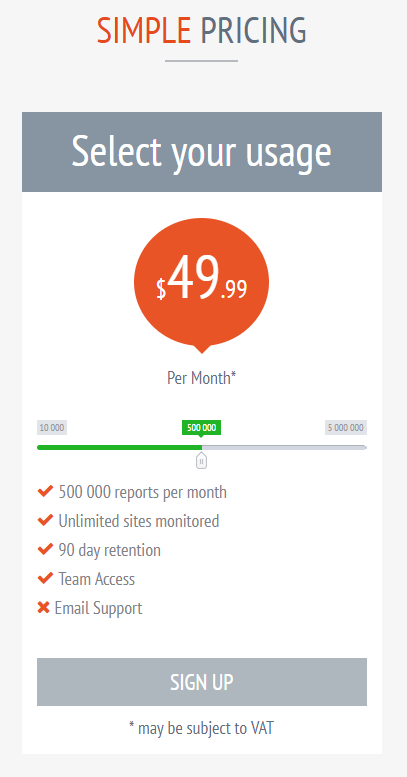

Usage based priced

With pretty much all of the complexity removed from the pricing model it was now mainly based on the number of reports you wanted, which makes sense as that's the only real thing that matters. Once we're there, why even have tiers at all? That's when we changed the pricing model from fixed tiers to a slider based on your desired usage so you could fine tune this to exactly what you need.

With this model came a level of simplicity that everyone was really happy with. You move the slider to the amount of reports you need and you can collect reports from an unlimited number of domains. If you have a few high volume sites or many low volume sites it doesn't matter, unlimited domains for everyone! For the Team Access and Email Support things are also really simple, just increase the slider to the level required for each feature. The last thing, data retention, is now 90 days for everyone, regardless of your account level which means it's still super useful even on the free tier.

How many reports do I need?

This is still one of the hardest questions to answer because there are just so many factors that affect the answer. What's the demographic of your audience, what country are they based in, how mature is your policy, how much traffic do you get, what kind of content do you have on your site and much more. Based on all of the research I've done and numbers I've crunched it's still surprisingly difficult to even give rough guidance here! The best I can suggest right now is that if you're policy is fairly new and you're just starting to explore reporting you will probably need as many reports as you have pageviews. That's not to say that every page will fire a report but you will probably have some pages that fire many reports and some that fire none. As your policy matures and your reporting requirement falls you can just adjust the slider down and reduce your billing cost, everything is completely flexible and you aren't ever locked in. If you're not using all of your allowance you can adjust your subscription down and likewise if you've consumed all of your allowance you can increase it to continue reporting.

As always I'd love to hear feedback and thoughts on the pricing structure, please drop by in the comments below.

Teams

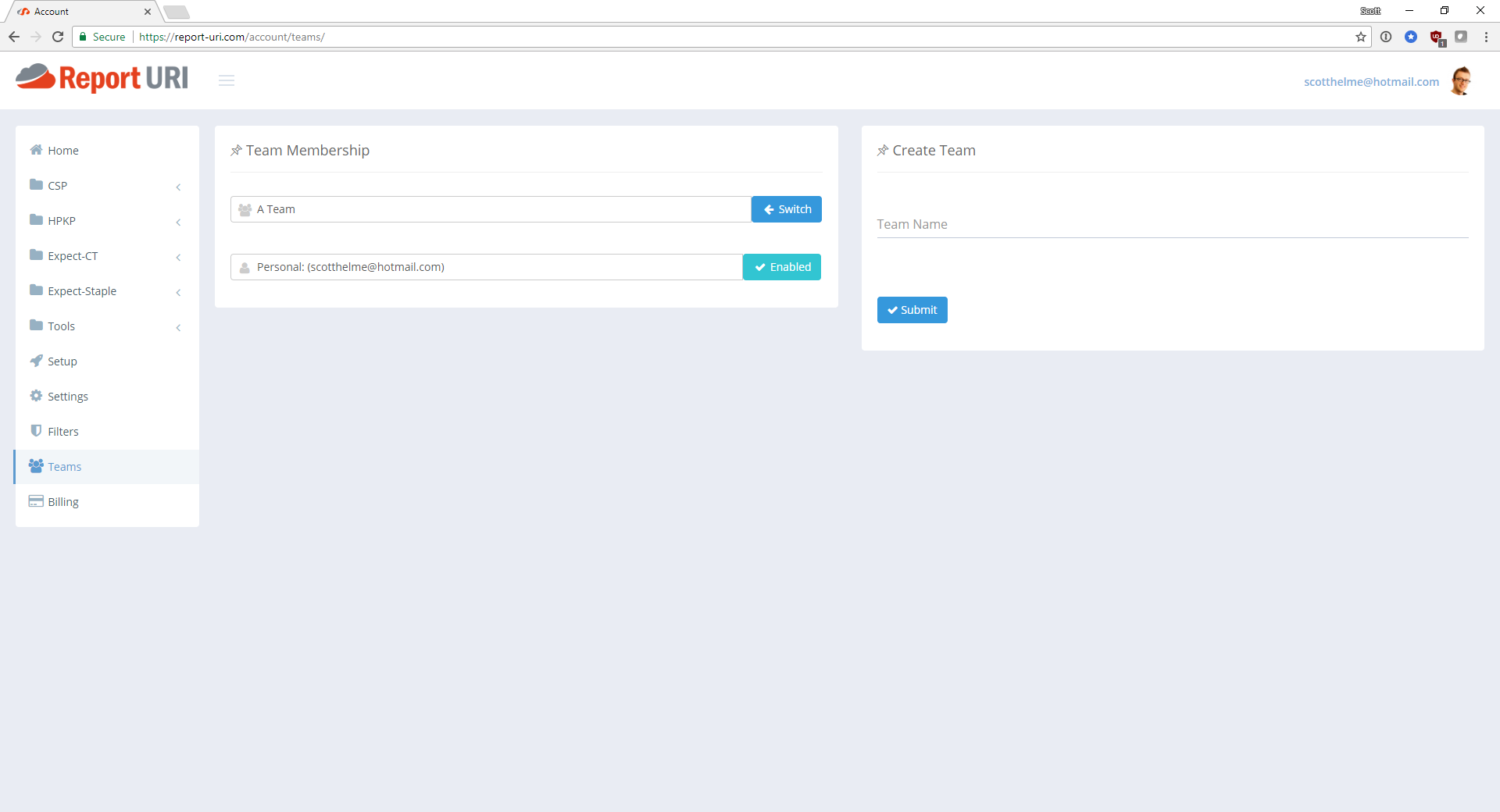

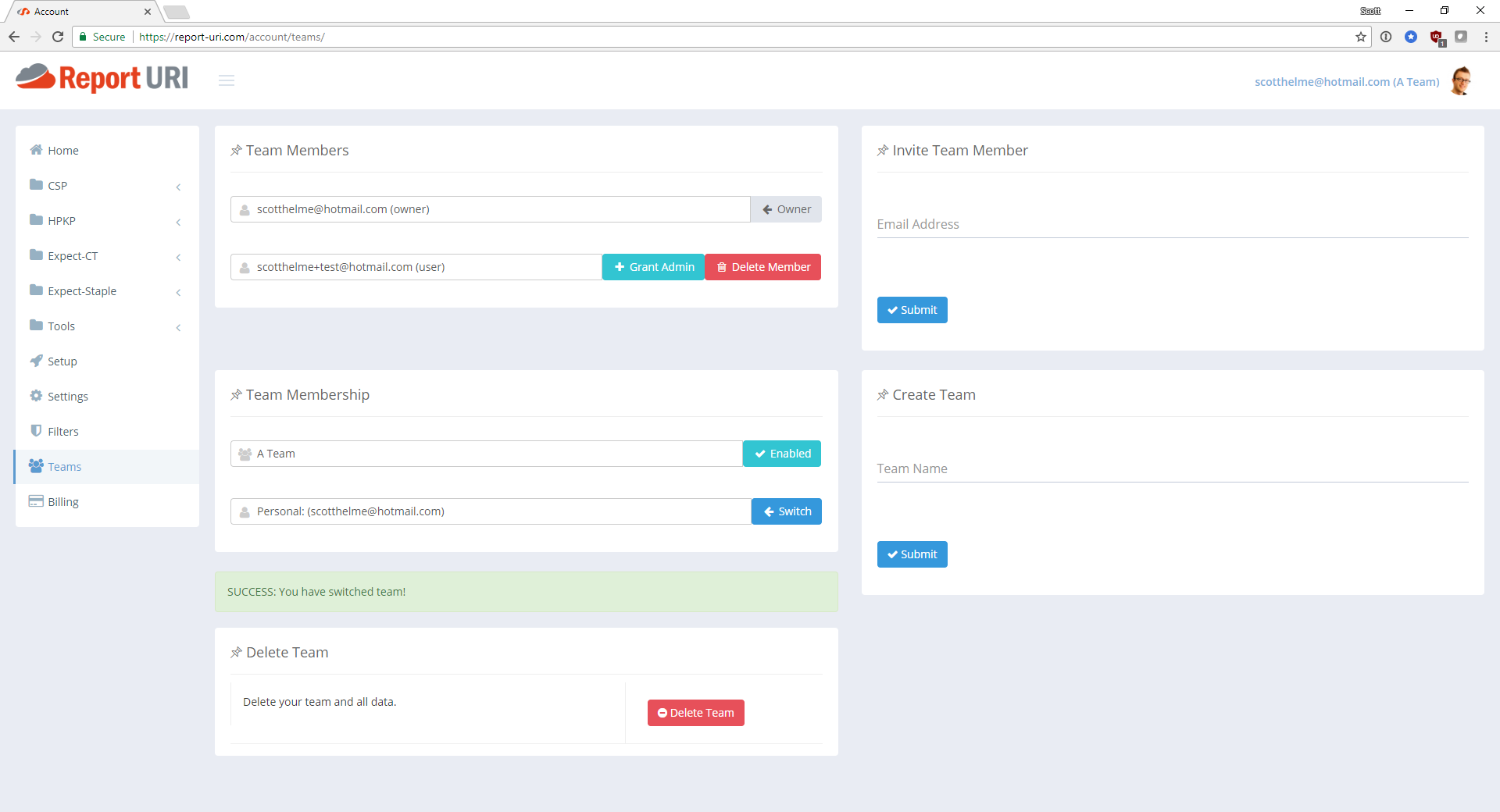

By far the most frequently requested feauture is for team accounts with some form of role based access. You asked and we listened so I'm really happy to introduce Teams!

If you're subsribed at a level that has Team Access you can create a team and invite anyone you wish to join it, even free accounts. Whoever creates the team will be known as the Team Owner and this cannot be changed. The Team Owner will have eternal admin rights over the team and can invite other team members to join. Other team members will be able to hold two different roles, Team Member or Admin. As a Team Member another user will be able to view all report data and settings but will not be able to modify any settings or invite new team members. If the Team Owner grants the Admin role then that user can change settings for the team and invite new members but will not be able to grant Admin to anyone.

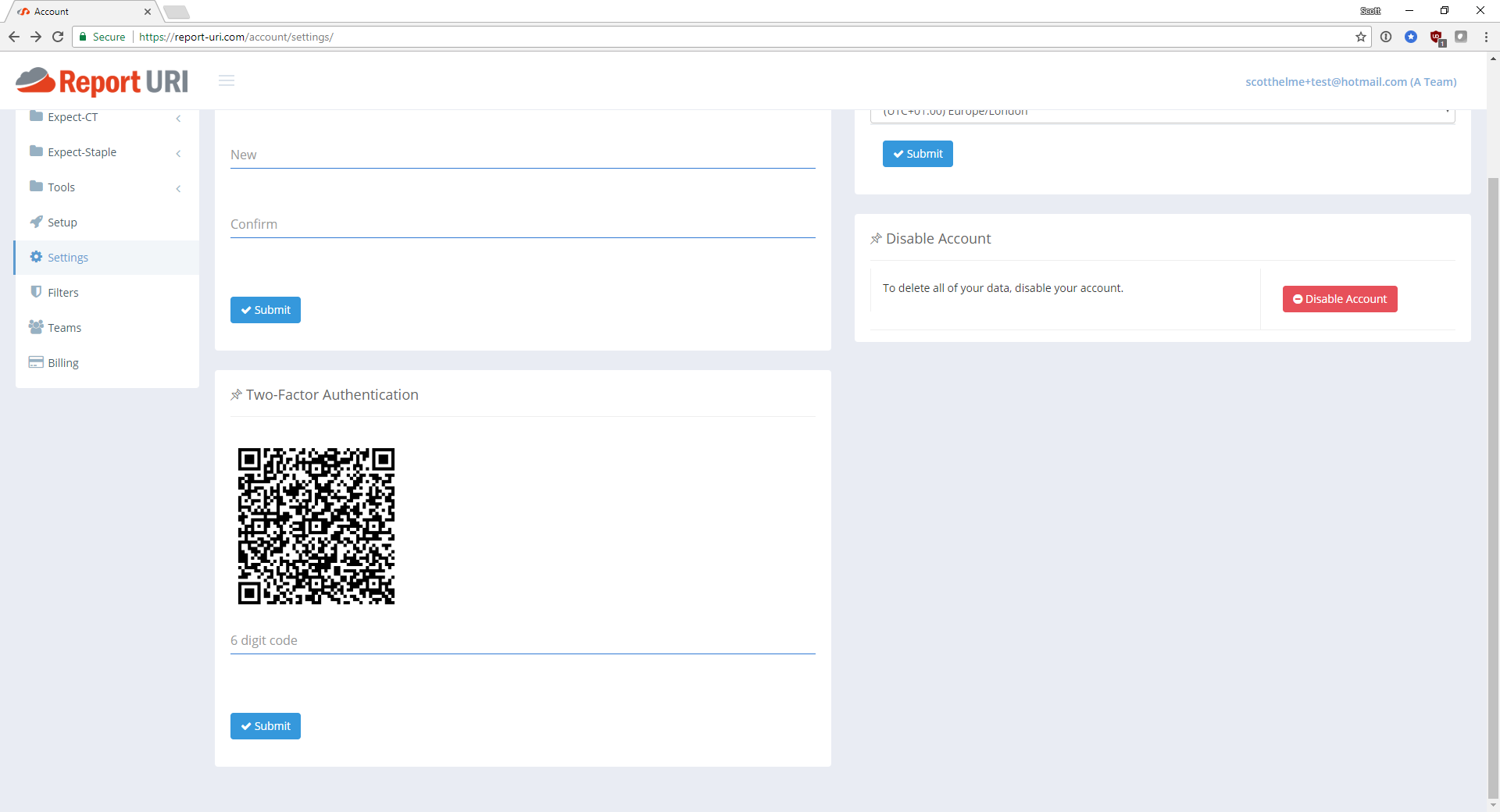

2FA

Another thing that I should have implemented much longer ago was 2FA but I can finally say it's here! Head over to the Settings page and you can enable 2FA on your account using the app of your choice like Google Authenticator or Authy.

When you enable 2FA you must make sure that you keep a safe copy of your recovery code. If you lose the ability to generate your 6-digit 2FA code then the only way to gain access to your account again is with the recovery code.

The next steps

The publication of this blog marks the official launch of the new 'v2' service and there are a couple of other small but important changes you might notice. To introduce some consistency and have a definitive way to refer to the service, we've decided to go with "Report URI" as the name to refer to the site. With the 'official' name for the company decided there were a couple of other changes and the main one of these is the move to the .com domain. In real terms this changes very little but it shows the next step in maturing the service and with that came a minor update to the branding too. All traffic from the .io domain will be redirected to the .com so no reports will be lost but it would be good if you could update the reporting address in your policy when you get the chance to do so. In the coming weeks and months there are countless amazing features we will be working on and delivering so please stay tuned and follow our progress in bringing everything we have in store. On the face of it CSP reporting might not seem that exciting but I'm going to bet that we can change your mind.