Regular readers will know that I make extensive use of Azure Table Storage in both Report URI and Security Headers. As Report URI has grown and we’re now processing billions of reports per month for our users we’re always interested in performance or efficiency savings wherever possible. We recently launched a whole new bunch of features and behind the scenes there was a pretty sweet performance tweak.

A quick intro to Table Storage

I have loads of blogs on our use of table storage so you can always dig into those if you want the technical details but at a really high level Table Storage is a key:value store. You can put something in with a key and then query it back out with the key, and, well, that’s pretty much it! The key is actually a combination of two parts, called the Partition Key and Row Key, which allow for some flexibility when querying, and entities (rows for relational database people) can have properties (columns, except no schema). We put reports into Table Storage using a variety of PK and RK combinations that allow us to query them out really efficiently and we also store a running counter that we increment ourselves as that’s faster and easier than trying to count all of the rows when you’re looking for something like the total number of reports for a given day for example.

PK: csp26092018

RK: total

count: 12489The problem

If we want to draw a graph of CSP reports for the last week then we need to pull back 7 entities, the total count for today and the 6 previous days.

for ($i = 0; $i < $unitsToGoBack; $i++) {

partitionKey = $reportType . date('dmY', strtotime("-{$i} days"));

try {

$entity = $this->tableRestProxy->getEntity($this->table_name, $partitionKey, 'total')->getEntity();These 7 getEntity() calls are made against Table Storage sequentially and they get us the data we need to plot onto the graph, we can now return the page and draw the graph for the user. This all works really well and we can go up to the last month in this fashion without any real performance worry because Table Storage is seriously fast. When we switch to looking at reports for a whole month, and say the last 6 months, we don’t pull out 180 counts for each day, we also maintain a count for the month so we can just retrieve that, requiring only 6 getEntity() calls. What happens when we can’t work around many calls like that though? In the last update I wanted to add an overview graph for the last 7 days for all report types. That meant I needed to get the day count for each of the 7 days for each of the 5 report types we support, CSP, HPKP, CT, XSS and Staple. That means 35 sequential calls into Table Storage and while Table Storage is really fast, we’re getting into territory where that many sequential calls is starting to be too many. To add to that problem we also went and added 4 new reports types didn’t we… That now means we need 63 sequential getEntity() calls into Azure to get all of the entities needed to render the graph, not good. There’s really no way for us to change or get around requiring that many calls, we just needed to do them faster and that meant we needed to async them.

Async and PHP

People familiar with other languages may have long used the concept of async requests with promises and other constructs, but in PHP, and especially for me, this was a more recent development. I start to dig around in the Azure Storage PHP SDK and noticed that it’s using Guzzle and has support for making async requests to Table Storage but doesn’t do so by default. At least this means it shouldn’t be too hard for us to add support!

/**

* Gets table entity.

*

* @param string $table The name of the table.

* @param string $partitionKey The entity partition key.

* @param string $rowKey The entity row key.

* @param GetEntityOptions|null $options The optional parameters.

*

* @return GetEntityResult

*

* @see http://msdn.microsoft.com/en-us/library/windowsazure/dd179421.aspx

*/

public function getEntity(

$table,

$partitionKey,

$rowKey,

GetEntityOptions $options = null

) {

return $this->getEntityAsync(

$table,

$partitionKey,

$rowKey,

$options

)->wait();

}Given the really simple nature of our getEntity() loop that we looked at earlier, all we need to do is batch up these calls and dispatch all of them together.

$promises = [];

foreach ($this->config->item('valid_report_types') as $reportType) {

for ($i = 0; $i < 7; $i++) {

$promises[$reportType . $i] = $this->tableRestProxy->getEntityAsync('reports' . $tableId,

$reportType . date('dmY', strtotime("-{$i} days")), 'total');

}

}Once we have our batch of requests they simply need to be executed and have the results returned. There are a couple of different ways to do this and it depends on what your tolerance to a request failing is.

// Throws exception if any request fails

$results = Promise\unwrap($promises)

// Waits for all requests to complete, even if some fail.

$results = Promise\settle($promises)->wait();Because we’re doing getEntity() calls we may get a 404 back from Table Storage if the entity we ask for doesn’t exist, which would be classed as a failure and stop the rest of the requests if we were using unwrap, so settle is what we need. Because we can expect to see a 404, in which case it means we have no reports for that particular day, we need to be tolerant of that and simply count the failure as a 0 count. Now that we have the result of all of our queries back we can process the entities we got and populate the array for the graph as we would have done before. The big question is, how much of a performance gain did we see?

The results

Now, when I’m developing and testing features I’m doing that locally on my dev server at home so we need to bear in mind the impact that has on the numbers. When my server at home calls back to Azure it has a considerably higher latency because I’m in the UK (basically in a field) and our Azure instance is on the West Coast of the USA. Our normal production servers are also located on the West Coast and are roughly 4ms away. That said, the relative difference between async and non-async will be the same, it’s just that my numbers look a lot bigger here because of my location.

float(6.2748548984528)

[["19-09-2018",0,0,0,0,0],["20-09-2018",0,0,0,0,0],["21-09-2018",0,0,0,0,0],["22-09-2018",0,0,0,0,0],["23-09-2018",0,0,0,0,0],["24-09-2018",0,0,0,0,0],["25-09-2018",0,0,0,0,0]]

float(1.2707870006561)

[["19-09-2018",0,0,0,0,0],["20-09-2018",0,0,0,0,0],["21-09-2018",0,0,0,0,0],["22-09-2018",0,0,0,0,0],["23-09-2018",0,0,0,0,0],["24-09-2018",0,0,0,0,0],["25-09-2018",0,0,0,0,0]]That’s some pretty significant improvement, a 79.75% reduction, and you can probably guess which array was generated using the synchronous calls and which one was generated using the asynchronous calls! It’s also worth noting that only 5 counts are being returned here each day because I tested and deployed this before we had support for the 4 new report types fully implemented, this will save us even more in that scenario.

Limitations on where we can use this

Doing async calls into Table Storage like this is no doubt a huge performance boost and it doesn’t cost any more or less for us to do it, it’s exactly the same. Of course this means we want to do this wherever possible but it’s not as simple as being able to execute all of our queries async in this fashion. The getEntity() calls above are a great example of where this works perfectly, we’re providing the table, partition key and row key of the entity which will either be returned or won’t exist and give us a 404, nice and easy. A similar thing could be said for insertEntity too, we generate an entity locally and call insertEntity() which will either succeed or fail and we could call multiple inserts together. You'd normally use an Entity Group Transaction (EGT) for that but if the entities are in different partitions then you can't, you can however use this method. What about less specific queries though, like queryEntities())?

queryEntities()

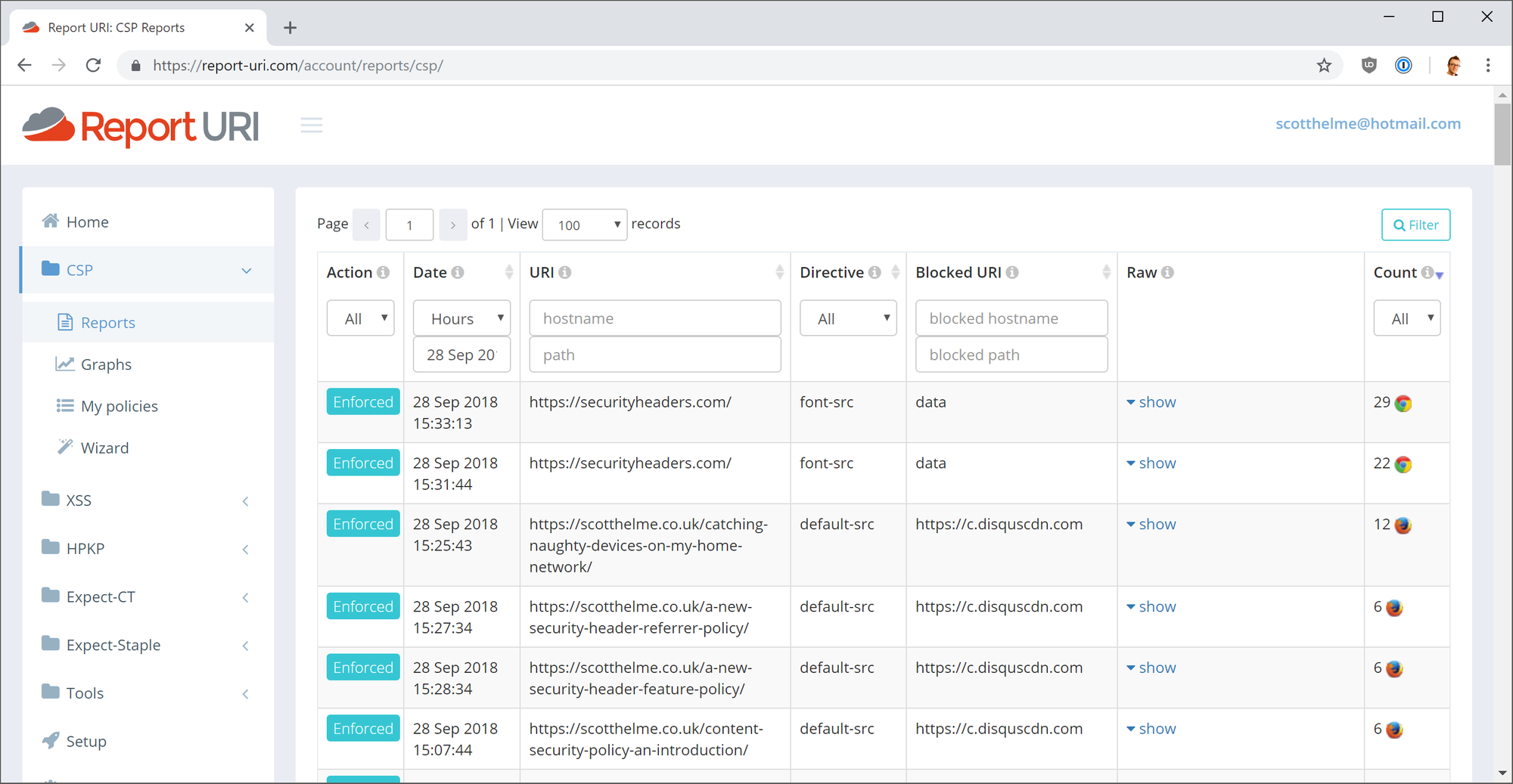

We use queryEntities() a lot in Report URI, it’s how we populate the tables on the Reports page with all of the reports that match your chosen search criteria.

A page like that would result in a really simple query that looks like this where simply fetch all CSP reports for the current hour.

PartitionKey eq 'csp2809201815'One of the limitations of queryEntities() is that it can only return a maximum of 1,000 entities at a time. If there are more than 1,000 entities then the result of the query will contain a continuation token that allows you to call back into Table Storage to fetch the rest of the Entities.

$result = $this->tableRestProxy->queryEntities($table, "PartitionKey eq 'csp2809201815'");

$reports = $result->getEntities();

$nextPartitionKey = $result->getNextPartitionKey();

$nextRowKey = $result->getNextRowKey();

while(!is_null($nextRowKey) && !is_null($nextPartitionKey)) {

$options = new QueryEntitiesOptions();

$options->setNextPartitionKey($nextPartitionKey);

$options->setNextRowKey($nextRowKey);

$options->setFilter(Filter::applyQueryString($filter));

$newResult = $this->tableRestProxy->queryEntities($table, $options);

$reports = array_merge($newResult->getEntities(), $reports);

$nextPartitionKey = $newResult->getNextPartitionKey();

$nextRowKey = $newResult->getNextRowKey();

}

This means that if we want to pull back 5,000 entities as a result of our query then we have to do those 5 calls sequentially because there’s no way to know the continuation token until we’ve pulled back the result. That puts us in a bit of a pickle but whilst I was looking at this it did give me an idea of how we might be able to work around that limitation... If you’re familiar with Table Storage and have an idea let me know in the comments below but for now I will probably save that for another blog as it’s a little too long to go into here!

Future ideas

As I said, there’s an idea burning away in my mind on how we can get around the 1,000 entities limit but also where else in our code we can do some trickery to allow us to squeeze more performance out of things by using async calls to Table Storage where it might not be immediately obvious and one of them would be another pretty significant performance improvement too. For now, I hope the code and examples above might help others using Azure and the Storage PHP SDK and give you an insight into the inner workings and development of Report URI!