Over the weekend, I saw a tweet from Troy Hunt who posed a little project idea. Having heaps of spare time... I thought I'd take on the challenge and see if I could help!

The idea

As I'm sure many of you know, Troy runs Have I Been Pwned, a site that tracks data breaches and allows people or organisations to be alerted to their exposure. Of course, after a data breach, one might wonder how seriously a website takes security, given the event.

Looking for a little project to keep you busy on the weekend? I was just thinking: how many of the breached websites in @haveibeenpwned now have a security.txt file? So, if you feel like grabbing those domains and querying them all, there's an API here: https://t.co/ftiKkfH7Hp

— Troy Hunt (@troyhunt) July 23, 2023

That sounds simple enough, let's get on it!

Crawler.Ninja

I have another project called https://crawler.ninja, where I scan the top 1,000,000 sites in the World every day and analyse various aspects of their security. You can see a summary of the daily scan data, or, access a dump of all historic scan data, but be warned, there are now many terabytes(!) of historic data!

I sliced out a bit of the crawler code because I already look for the presence of the security.txt file that Troy was asking for, and then I could run the scan against the list of breached domains from HIBP. Pulling the list of domains for all the breaches in HIBP is easy, here's my code to do it.

<?php

$ch = curl_init();

curl_setopt($ch, CURLOPT_RETURNTRANSFER, 1);

curl_setopt($ch, CURLOPT_URL, 'https://haveibeenpwned.com/api/v3/breaches');

$response = curl_exec($ch);

$breachList = json_decode($response, true);

$domainList = [];

foreach ($breachList as $breach) {

if (isset($breach['Domain']) && trim(strtolower($breach['Domain'])) !== '') {

$domainList[] = trim(strtolower($breach['Domain']));

}

}

$domainList = array_unique($domainList);

foreach ($domainList as $domain) {

file_put_contents('output.txt', $domain . "\r\n", FILE_APPEND | LOCK_EX);

}Here's the list of 641 unique domains that I found and that I used for analysis.

Security.txt

The idea of the security.txt is really quite simply and it's explained in that blog post, but the TLDR; you can put contact info in a text file in this specified location for people to reach you when things go bad. You can see I have one of these files on my important sites:

https://scotthelme.co.uk/.well-known/security.txt

https://securityheaders.com/.well-known/security.txt

https://report-uri.com/.well-known/security.txt

There are some specific requirements on where to host this file and how to present it in RFC 9116, but for the most part, it seems that people get it right. Notably, the following:

For web-based services, organizations MUST place the "security.txt" file under the "/.well-known/" path

The file MUST be accessed via HTTP 1.0 or a higher version, and the file access MUST use the "https" scheme

It MUST have a Content-Type of "text/plain" with the default charset parameter set to "utf-8"

This field [Contact] MUST always be present in a "security.txt" file.

It seems the biggest failure of sites that attempt to meet the requirements is to have a content-type of text\plain instead of the required text\plain; charset=utf-8. For this analysis, I'm going to take a slightly more relaxed approach and allow text\plain but I've also provided the list of compliant sites with the more strict check too.

On the strict check, only 6 sites out of the 641 checked have a security.txt file and on the relaxed check, it's slightly better at 11... That means we're only seeing around 1.7% of these sites using security.txt files!

Security Headers

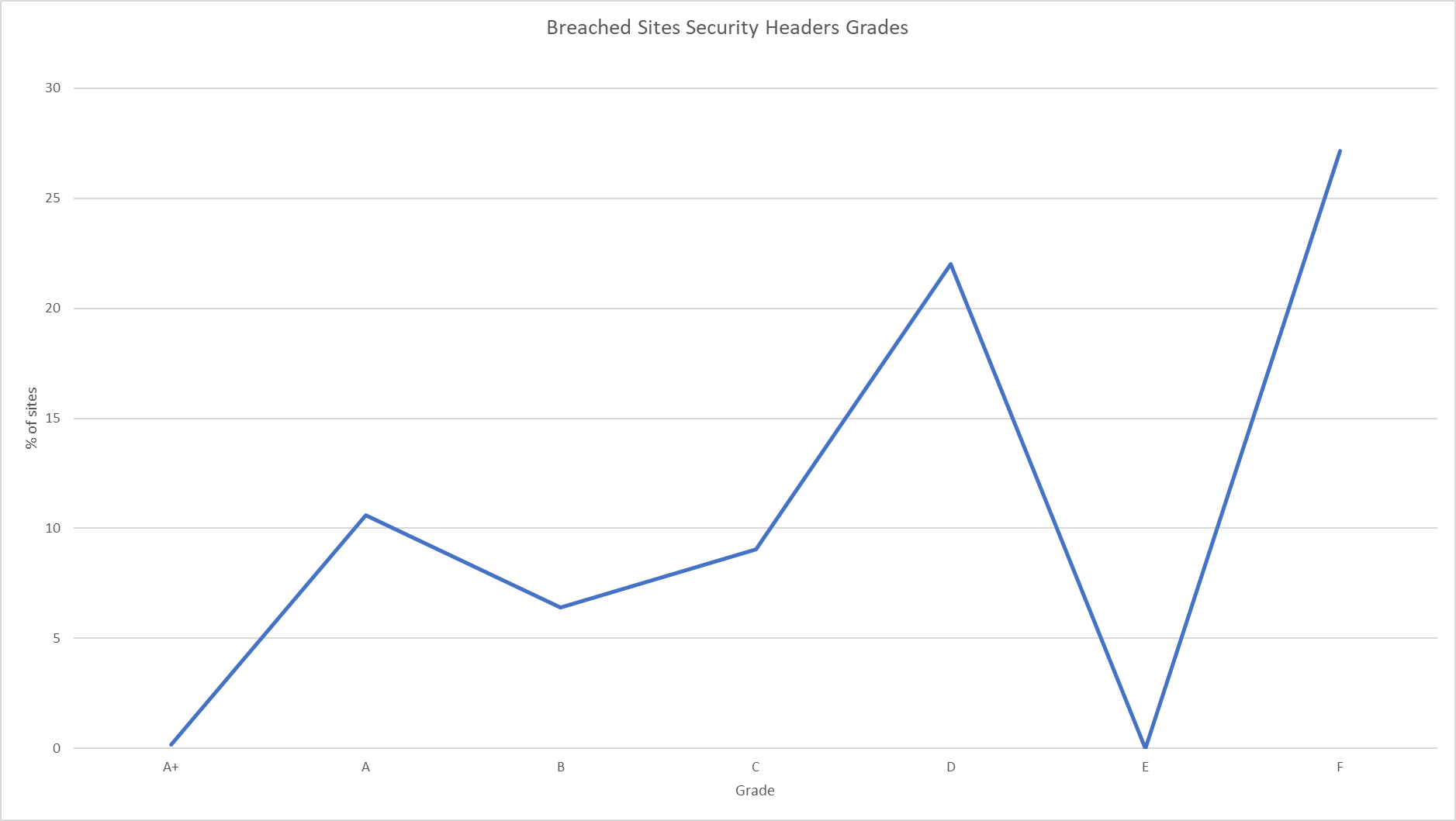

Whilst I was doing this security analysis, I figured I could quickly and easily extend this to add some more value. Regular readers will know of my Security Headers project that recently joined Probely, where you can do a free security scan of your website in ~2 seconds! Well, Security Headers also has an API so I wrote a quick API client to run the list of HIBP domains against the Security Headers API too. You can see the raw results to do your own analysis, but I think this graph nicely summarises that things aren't as good as they could be.

There were a handful of domains that don't resolve, some that blocked our scanner outright and some that didn't respond to the scanner, but we got a successful scan on 483 domains show in the data. Here's how the scores break down for them.

| Grade | Sites |

|---|---|

| A+ | 1 |

| A | 68 |

| B | 41 |

| C | 58 |

| D | 141 |

| E | 0 |

| F | 174 |

Surprisingly it's not too bad, but there was only a single site that got an A+, on a list of a some really, really big sites.

It will be interesting to see how this tracks in the future and whether or not the presence of something like a security.txt file, or even your score on Security Headers, can be used in any way as indicator of how seriously you take security! 🤔

Want to try the Security Headers API? Get 10% off your first 3 months with HIBP10 at checkout!