It's time for the 4th instalment of my Alexa Top 1 Million scan and I've added a heap of new metrics to the crawler for analysis. On top of this there are also some other exciting announcements. Let's dig in!

Previous Crawls

I've done 3 previous crawls before now and they were Aug 2015, Feb 2016 and Aug 2016. They've shown some awesome trends in our adoption of security headers and HTTPS and I've also made several improvements to my crawlers along the way. These latest results are literally fresh off the press as I'm now running my crawl every single day, but more on that later, so let's dig in.

Feb 2017

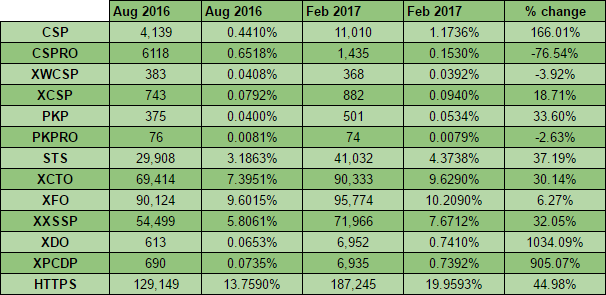

It's really awesome to see that we are still making great progress towards securing the web and the most recent results back that up. Here is a quick glance at the headline figures from the latest scan.

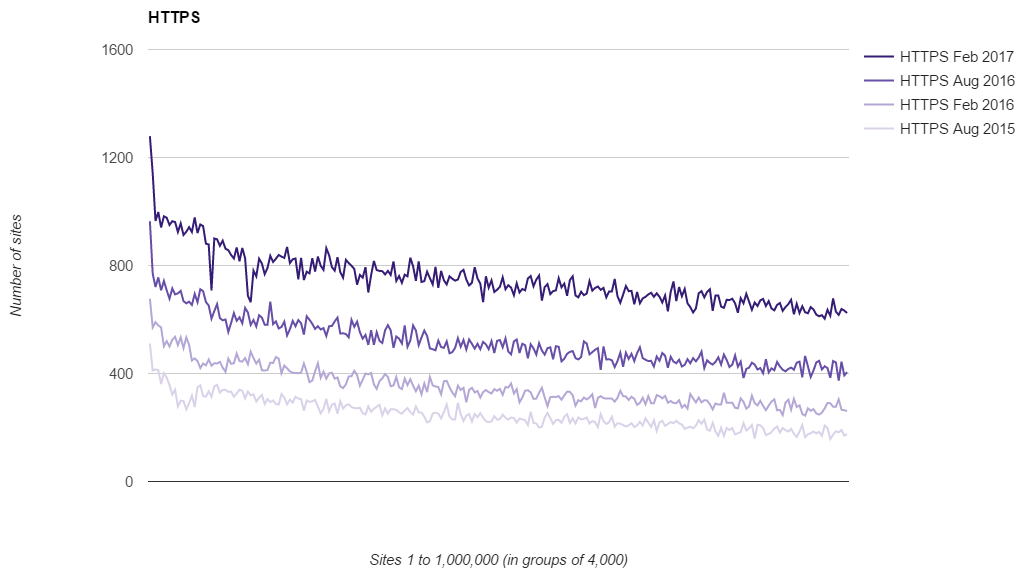

There are some really good numbers in here and the first and hopefully most obvious is the absolutely enormous jump in the adoption of Content Security Policy. In the last 6 months there has been a 166% increase in the number of sites deploying CSP in the Alexa Top 1 Million which represents a great success. There are countless great things that you can do with CSP but it looks like the most popular things right now are helping sites migrate to HTTPS / fixing mixed-content and framing/clickjacking protection (see data later). On the subject of HTTPS and migrations, the other really important metric I'm tracking is how many of the sites actively redirect from HTTP to HTTPS and I'm glad to say we're still seeing significant progress being made there too! With a 45% increase in the number of sites redirecting to HTTPS we're now just a whisker away from having 20% of the Top 1 Million sites using HTTPS. This is how that progress looks over the last 2 years.

Security Headers

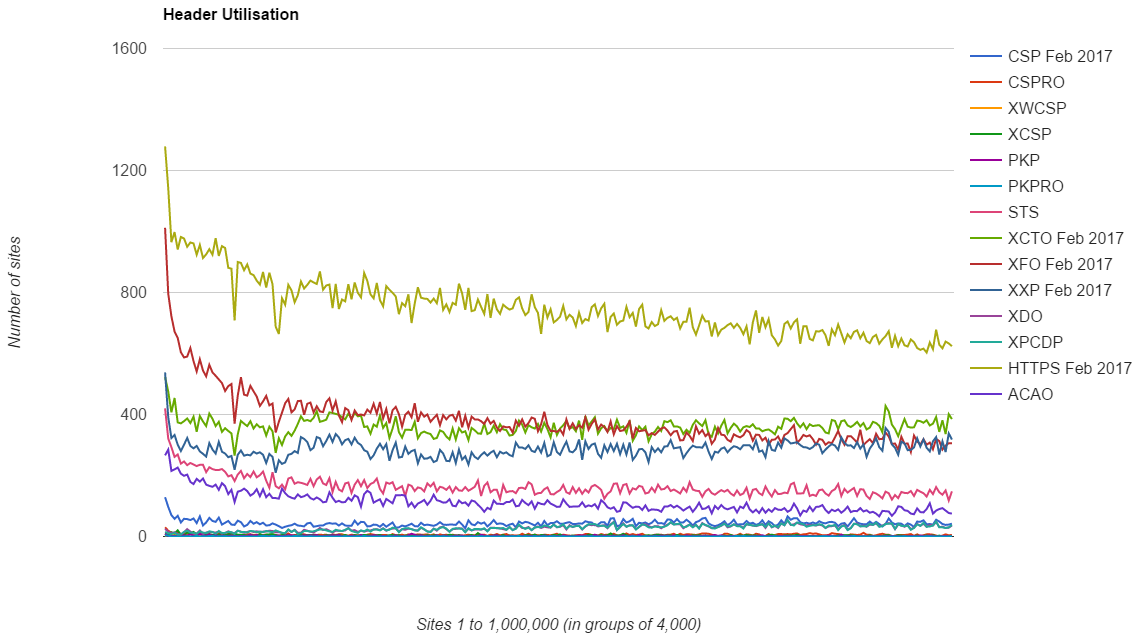

Of course, the original purpose of me starting these scans was to track the use of security headers across the Top 1 Million and we're still seeing positive indicators across the board there too.

All of the familiar trends from previous scans are still present, with high adoption at the top end of the ranking and then a drop in adoption as you move down through the lower ranked sites. There's also the same trend breakers present in this scan, the XXP and XCTO headers, that buck the trend and actually increase in use as you move down the ranking. There's still no solid explanation for that!

Let's Encrypt

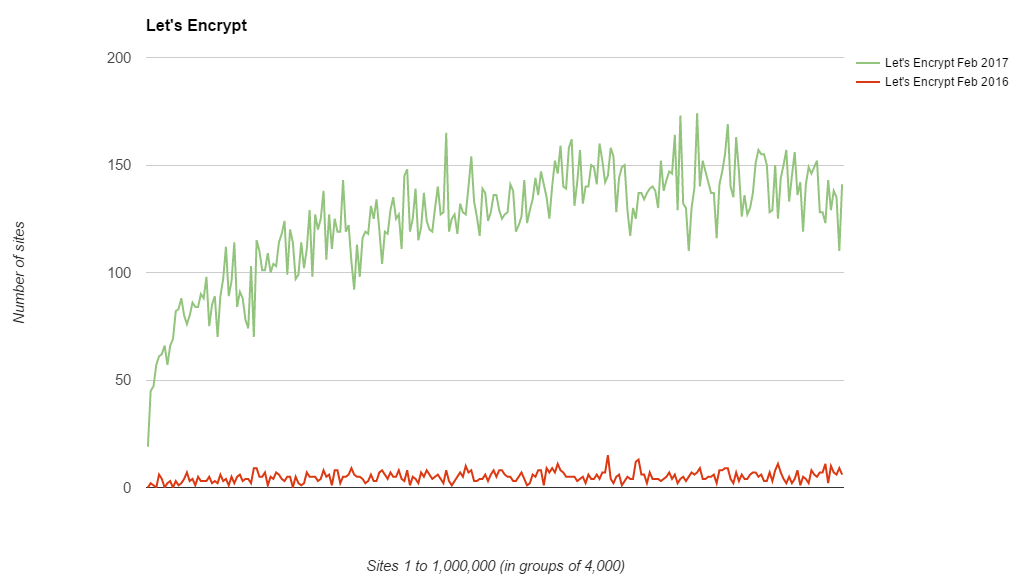

A year ago I also added code to track the usage of Let's Encrypt as a CA when I was performing the crawl. At the time they did have a pretty small presence but that has change, a lot!

The lower usage at the top end seems to be fairly expected with all of the really large sites probably having commercial agreements with 'big' CAs but with growth like that, I look forward to their August 2017 results to see what they can do in the next 6 months!

Crawler overhaul and regular scans

I've tweaked and improved the crawler as I've run each of these scans to add new features and make it more efficient. Over the Christmas break though I wanted to take it up a notch and completely re-wrote my crawler with the intention being to run a crawl of the Alexa Top 1 Million every day. Since December I've been refining and improving this process and I'm now reliably crawling the entire 1 million sites and storing the data every single day! The crawlers log basically everything they do to a MySQL database and once they're all done it produces a nice summary of the crawl for me:

Total Rows: 938133

Security Headers Grades:

A 1763

A+ 678

B 26910

C 716

D 56509

E 81973

F 769504

R 80

Sites using content-security-policy: 11010

Sites using content-security-policy-report-only: 1435

Sites using x-webkit-csp: 368

Sites using x-content-security-policy: 882

Sites using public-key-pins: 501

Sites using public-key-pins-report-only: 74

Sites using x-content-type-options: 90333

Sites using x-frame-options: 95774

Sites using x-xss-protection: 71966

Sites using x-download-options: 6952

Sites using x-permitted-cross-domain-policies: 6935

Sites using access-control-allow-origin: 27840

Sites redirecting to HTTPS: 187245

Sites using Let's Encrypt certificate: 31032

Top 10 Server headers:

Apache 206396

nginx 138860

cloudflare-nginx 86344

Microsoft-IIS/7.5 36266

Microsoft-IIS/8.5 31501

LiteSpeed 20643

GSE 18443

nginx/1.10.2 14625

nginx/1.10.3 13944

Apache/2.2.15 (CentOS) 13013

Top 10 TLDs:

.com 459161

.net 49756

.ru 48977

.org 45171

.de 24226

.jp 18958

.uk 15296

.br 13627

.ir 13352

.in 12784

Top 10 Certificate Issuers:

Let's Encrypt Authority X3 31030

COMODO RSA Domain Validation Secure Server CA 27372

COMODO ECC Domain Validation Secure Server CA 2 19094

Go Daddy Secure Certificate Authority - G2 18606

RapidSSL SHA256 CA 8991

GeoTrust SSL CA - G3 4876

AlphaSSL CA - SHA256 - G2 4404

Symantec Class 3 Secure Server CA - G4 4271

Symantec Class 3 EV SSL CA - G3 4200

RapidSSL SHA256 CA - G3 4067

Top 10 Protocols:

TLSv1.2 171723

TLSv1 7945

TLSv1.1 208

SSLv3 1

NULL 0

Top 10 Cipher Suites:

ECDHE-RSA-AES256-GCM-SHA384 75448

ECDHE-RSA-AES128-GCM-SHA256 50357

ECDHE-ECDSA-AES128-GCM-SHA256 19963

ECDHE-RSA-AES256-SHA384 11152

DHE-RSA-AES256-GCM-SHA384 3631

DHE-RSA-AES256-SHA 3036

ECDHE-RSA-AES256-SHA 2538

AES256-SHA256 1882

AES128-SHA 1872

AES256-SHA 1843

Top 10 PFS Key Exchange Params:

ECDH, P-256, 256 bits 154384

DH, 1024 bits 6059

ECDH, P-521, 521 bits 3508

ECDH, P-384, 384 bits 3291

DH, 2048 bits 1172

DH, 4096 bits 159

ECDH, B-571, 570 bits 50

ECDH, brainpoolP512r1, 512 bits 4

DH, 768 bits 3

DH, 3072 bits 2

Top 10 Key Sizes:

RSA 2048 bit 146817

ECDSA 256 bit 20046

RSA 4096 bit 11233

RSA 1024 bit 237

RSA 3072 bit 86

ECDSA 384 bit 55

RSA 8192 bit 8

RSA 3248 bit 5

RSA 2049 bit 4

RSA 4056 bit 3

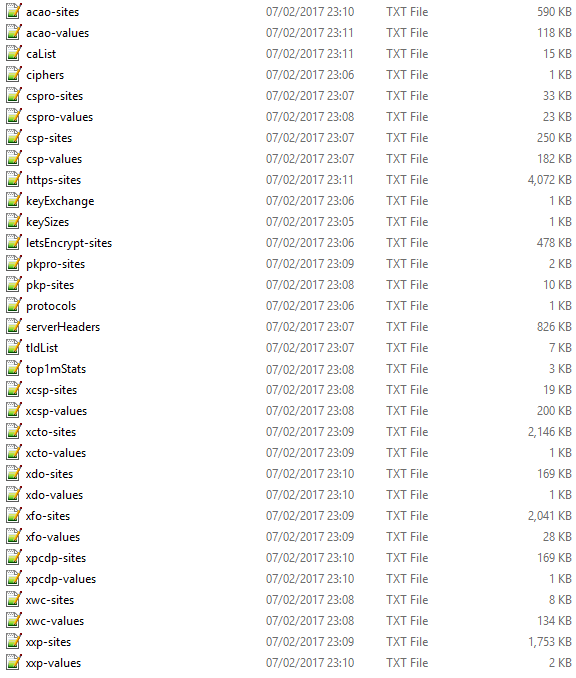

Alongside this summary I get individual files listing every site that uses each feature, like CSP, and their configurations, so I can see what people are commonly configuring.

For example, csp-values.txt shows me the most popular CSP configuration at a glance and the first few entries give an indication to what a lot of sites are using CSP for.

Values for content-security-policy:

upgrade-insecure-requests 838

frame-ancestors 'self' 377

frame-ancestors 'self' ; 286

frame-ancestors 'self'; 233

default-src https: data: 'unsafe-inline' 'unsafe-eval' 97

frame-ancestors 'none' 86

The caList.txt file also shows all of the certificate providers I encountered during the crawl and identifies the name of the intermediate that signed the leaf.

Certificate Issuers:

Let's Encrypt Authority X3 31030

COMODO RSA Domain Validation Secure Server CA 27372

COMODO ECC Domain Validation Secure Server CA 2 19094

Go Daddy Secure Certificate Authority - G2 18606

RapidSSL SHA256 CA 8991

GeoTrust SSL CA - G3 4876

As you can see, Let's Encrypt are now the largest issuing intermediate in the Alexa Top 1 Million but if you combine the total count for COMODO's RSA and ECC intermediates then they are the largest by count. I wonder how long that will last with the raging success that Let's Encrypt are seeing?

Opening up the data

Just like all of my previous scans I've put the data for this scan into my publicly available Google Doc Spreadsheet, Alexa Top 1 Million Scan Results. That's a great reference and quickly/easily used by most people but now the crawler is running daily and I'm logging a whole bunch of data I wanted to open things up even more. To that end I'm making available a zip file containing the entire output from a crawl, specifically the one this blog is based upon, the 7th Feb 2017. Now, the file is pretty large, weighing in at 1.2Gb compressed, but it contains everything!

Download Raw Data

Data for all scans is now available here.

I'm opening it up under the same license as my blog and I'd love to see what else people can do with it. There is a lot of data in there and I'm sure there are all kinds of interesting things that people could do with just some basic SQL query skills. Please keep me apprised in the comments below if you do anything awesome with it. As I'm running these scans daily now I could also do with a good solution to host the data if there's demand for access to it. Right now it rsyncs down to my local NAS server at home where I have a lot of free space, but I can't host that publicly for easy access. At ~1.2Gb per day it's an interesting problem but I am happy to open this data up to the world, if you can help out with hosting it, please get in touch.