Last week I published my Alexa Top 1 Million crawl for August 2018 and there was some really interesting data in there along with several new metrics covered in the report. Over the months I've managed to use my crawler data for further analysis outside the scope of the report and I wanted to cover there here.

August 2018

You can read the August 2018 report which contains all of the 'official' analysis but this blog post is going to focus more on other things you can do with the data. I publish all of the raw data on https://crawler.ninja so you can get the details there but I want to see if I can encourage further analysis. The things in this blog post aren't covered in my reports but are the kind of additional analysis I'd be interested in seeing done with the data. Perhaps you can think of something I haven't thought of and you'd like to use the data yourself.

Certificate Expiry

The crawler already looks at and publishes data on a variety of certificate related metrics like public key type and size along with who issued the certificate to track CA usage in the top 1 million. Recently I decided to add a check for how long is left until the certificate expires, with some quite surprising results. I created 4 lists and when I'm parsing the certificates I record entries for certificates that have already expired at the point of the check, certificates that expire in the next 24 hours, certificates that expire in the next 3 days and then certificates that expire in the next 7 days.

https://crawler.ninja/files/certs-expired.txt

https://crawler.ninja/files/certs-expiring-one-day.txt

https://crawler.ninja/files/certs-expiring-three-days.txt

https://crawler.ninja/files/certs-expiring-seven-days.txt

The lists are updated every day so the numbers will of course fluctuate but at the time of writing there are 388 sites serving an expired cert(!), 186 sites that expire within 24 hours and 471 that expire within 3 days.

X-Powered-By

As regular users of Security Headers will know, the X-Powered-By header will regularly leak information about software that is your running on your server and often the exact version. As the crawler scoops up all HTTP response headers that sites return, we can do analysis on exactly what sites are running on (that divulge this information). Here are the top 10 X-Powered-By headers:

ASP.NET 69759

PleskLin 25130

PHP/5.6.37 21240

PHP/5.4.45 16199

PHP/5.6.36 12789

PHP/5.3.29 10297

PHP/5.5.38 9178

PHP/7.0.31 8604

PHP/5.6.30 8142

PHP/5.3.3 7292

There's a lot of ASP.NET in there and it's the single largest entry in the list but it doesn't specify versions in the header so they're all grouped. Looking at the rest of the top 10 list I think it's fair to say that PHP is probably a lot more common than both ASP.NET and the second entry PleskLin but it breaks out into specific versions. Here's the list of all distinct X-Powered-By headers if you want to take a look at the whole thing.

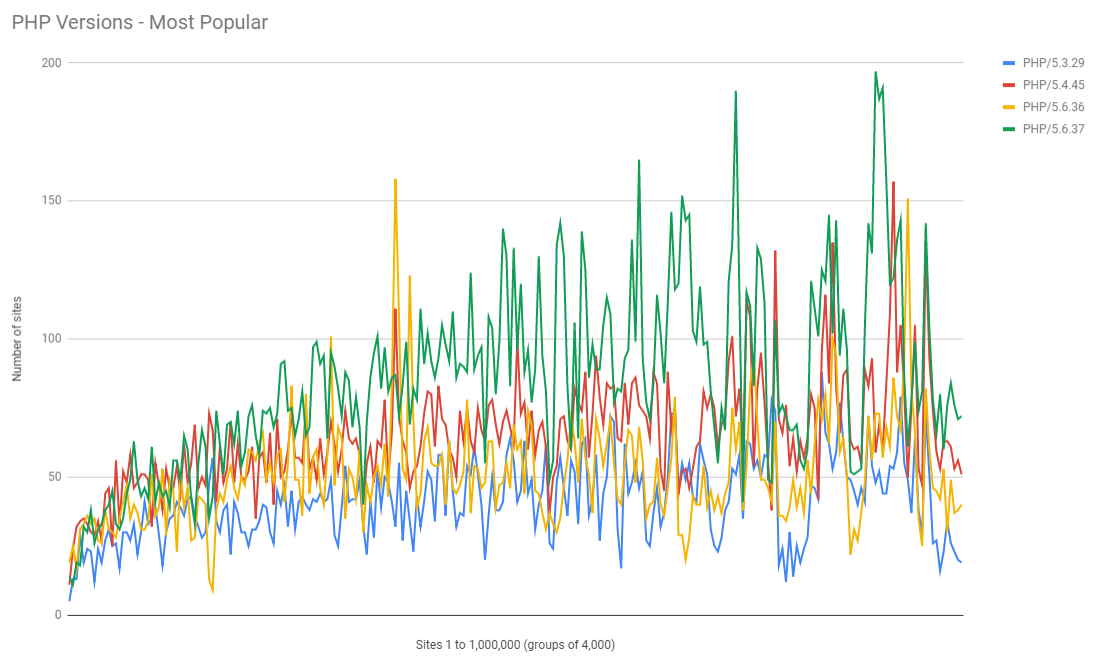

Looking at the different versions of PHP in that list it was sad to see old versions of PHP in such high numbers. PHP 5.3 stopped receiving support in August 2014, PHP 5.4 stopped getting support in September 2015, PHP 5.5 in July 2016 and PHP 5.6 loses support very soon in December 2018! That's a lot of outdated versions and in quite high numbers... I decided to check if there was any link between the rank of the site and their use of particular PHP versions. Here's the 4 most popular PHP versions in use:

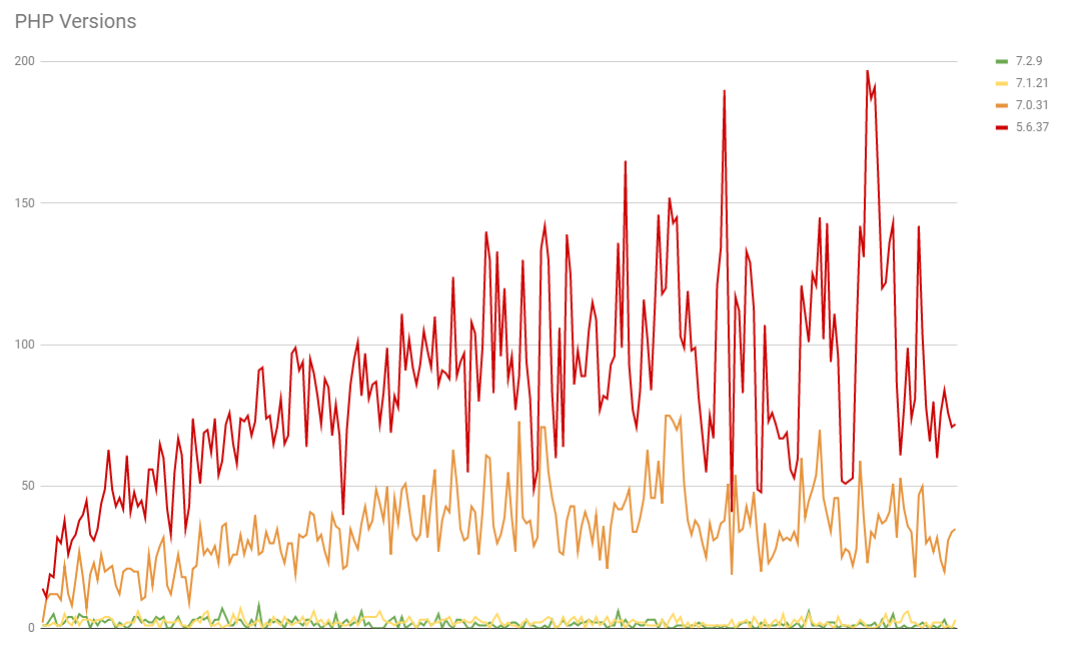

You can see that in general the use of PHP increases as sites have a lower rank in the top 1 million and that the older versions do have less presence, which is at least some good news! Now here are the last releases for each minor version.

The use of PHP seems really low at the top end of the scale and this could be that PHP isn't used or that X-Powered-By headers are stripped but overall we can see that the older versions still far outweigh the newer ones and that that the problem gets worse as we go down the ranking.

X-Aspnet-Version

Having focused on PHP let's take a look at the other main header back there and switch over to the X-Aspnet-Version header which does set specific version numbers. Here are the top 10 values for the header:

4.0.30319 39116

2.0.50727 4473

[empty] 555

0 81

1.1.4322 76

XXXXXXXXXX 29

v1.1.1 14

7.91424E+17 6

N/A 5

XXXXXXXXXX 4

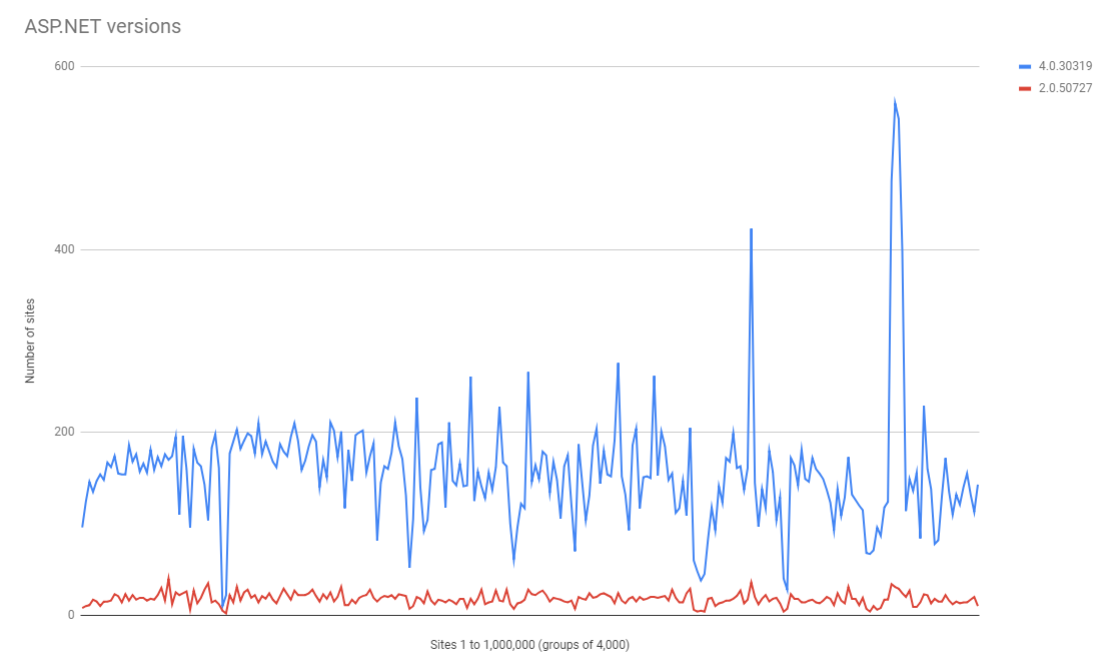

We can see the vast majority of sites setting this header are reporting either 4.0.30319 or 2.0.50727 as the version and the 3rd entry in that list is a blank value in the header. Let's take a look at the distribution of those 2 main versions in the top 1 million sites.

This metric breaks the usual trends and also has a fairly flat distribution. There is a significant drop in the top 4,000 sites but after that there's only a small decline across the entire top 1 million sites of the use of the higher version.

Number of redirects

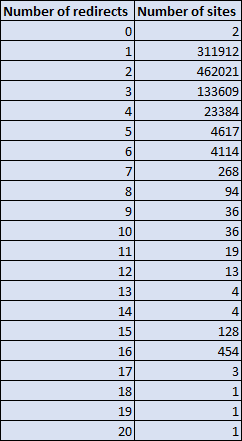

The crawler stores the headers for the entire redirect chain so we can also see what the state of HTTP redirects are. The crawler starts at http://[domain] and then follows redirects until we end up somewhere that gives us a HTTP 200 if all goes well, we hit 20 redirects at which point we give up or some error happens. The average number of redirects we see per site is 1.9 but there are certainly some outliers.

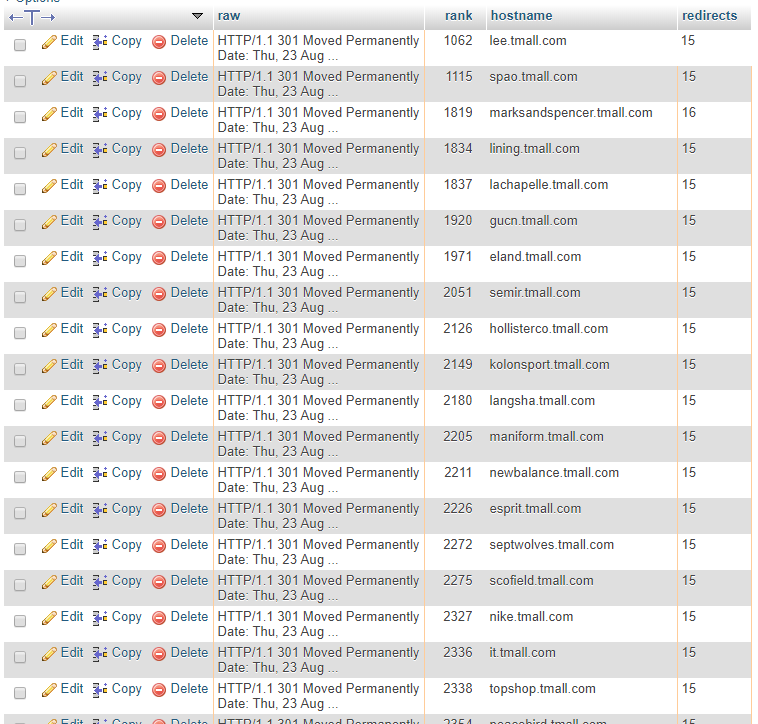

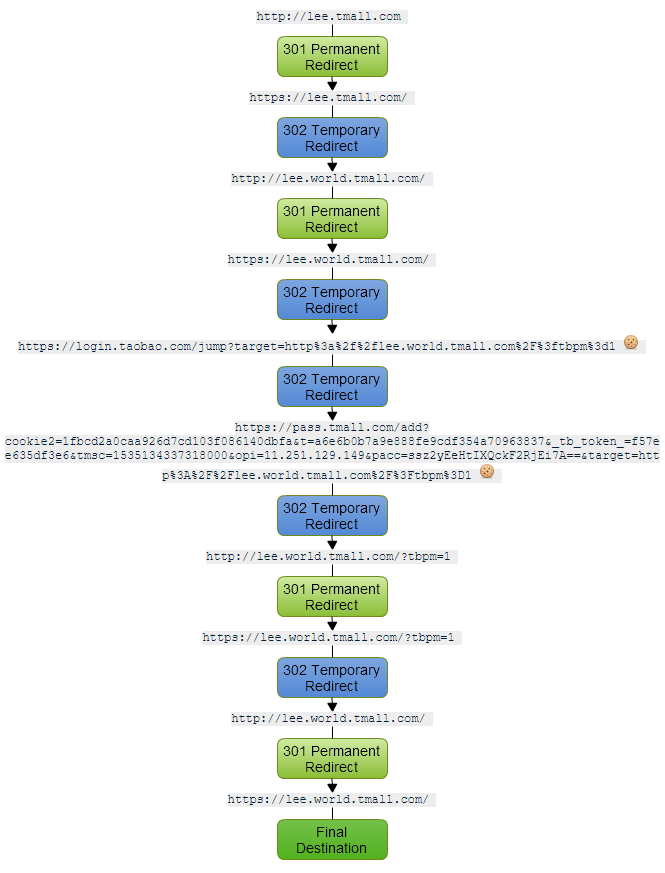

The vast majority of sites have 1-3 redirects with a small portion on 4 redirects too. After that the numbers do drop off quite quickly but there's a spike in sites that have 15 and 16 redirects! That's a bit crazy so I had to check it out and it does look like the data is right. Basically all of the sites are subdomains under another domain in the form *.tmall.com.

There is slightly different behaviour depending on where or how I scan the domains, some online services are only showing 10 or even 8 redirects in the chain for example!

Status Codes

Another thing I wanted to look at was the kind of status codes the crawler was getting back. Each HTTP request the crawler makes, including during redirects, is logged so I can take a look at all of them. Here's the top 10 status codes and message.

522751 HTTP/1.1 301 Moved Permanently

289209 HTTP/1.1 200 OK

69379 HTTP/1.1 302 Found

13674 HTTP/1.1 302 Moved Temporarily

5493 HTTP/1.1 404 Not Found

5360 HTTP/1.1 403 Forbidden

5139 HTTP/1.0 302 Found

4970 HTTP/1.0 301 Moved Permanently

4383 HTTP/1.1 302 Redirect

2151 HTTP/1.1 302 Object moved

At the top of the list they all seem relatively ok but as you move further down the list, full list is available here, there are some odd entries. For example there were 48 instances of 201 Created, which is odd given that all I'm doing is making a HTTP GET request. There are also several variations of not being authorised, rate-limited and challenged for authentication too. The most interesting thing is the huge number of sites that seem to be delivering the redirect to their www subdomain in the HTTP header itself and not a location header.

1 HTTP/1.1 302 http://www.unosantafe.com.ar/

1 HTTP/1.1 302 http://www.unosanrafael.com.ar/

1 HTTP/1.1 302 http://www.unoentrerios.com.ar/

1 HTTP/1.1 302 http://www.tooldex.com/

1 HTTP/1.1 302 http://www.southernglazers.com

1 HTTP/1.1 302 http://www.site44.com/

1 HTTP/1.1 302 http://www.singularcdn.com.br/

1 HTTP/1.1 302 http://www.senasa.gob.ar/

1 HTTP/1.1 302 http://www.sanjuan8.com/

1 HTTP/1.1 302 http://www.primiciasya.com/

That's just a few but there are a lot of them doing that and other than that we just have 1 or 2 crazy items throughout the list.

Other ideas and possibilities

I'm sure there's a lot more that can be done with the data, it just requires someone to think of it and have the time to do it with the data I make available. If you'd like me to add a new metric to the crawler then drop by in the comments below and make a suggestion. Other things that could be done include:

Certificate Transparency

Logging all certificates to Certificate Transparency logs and checking CT qualification of certificates. The certificate chain is stored in the raw data so this could also be done historically by grabbing the old archives too.

Certificates

Certificate chain validation (and logging errors in the database) so I could detect what % of the top 1 million have chain errors. Right now the crawler could encounter all kinds of errors with certificates and it'd be good to log them and see if there are any particularly frequent kinds of errors.

TLSv1.3

This is something I'm already aware of and I'm definitely going to add to the crawler. I really need TLSv1.3 support so I can track adoption now it's a standard and I think it will be closely related to the HTTPS and certificates data anyway.

HTTP/2

Similar to TLSv1.3 above, I'm also going to add support for HTTP/2 at the same time and track adoption of that too. Adding HTTP/2 support should be possible already, it's just a case of finding the time!