My blog recently became the target of an orchestrated Denial of Service (DoS) attack using a HTTP GET flood. Aimed at generating huge amounts of load on the MySQL back end, it was very effective. As the attack ramped up, the sheer number of queries being executed caused the MySQL service to consume the remaining system RAM and was subsequently terminated. Attacks like these can be a huge inconvenience, but fortunately, there are some simple steps that you take to mitigate them.

The Attack

The first attack on my server took place on a Sunday night and I wasn't around to respond to it! Waking up on the Monday morning I was met with the standard WordPress error message "Error Establishing Database Connection". I thought something had crashed or gone wrong somewhere and after a quick check, I noticed that MySQL wasn't running. I fired it back up, up the site came and off to work I went. After a hectic Monday I didn't get time to look into that evening and so, Tuesday arrived. The attacker came back and within an hour had managed to knock the site offline again. Waking up on Tuesday morning I quickly brought the site back up and made plans to look into it. After some log trawling it quickly became apparent what was going on.

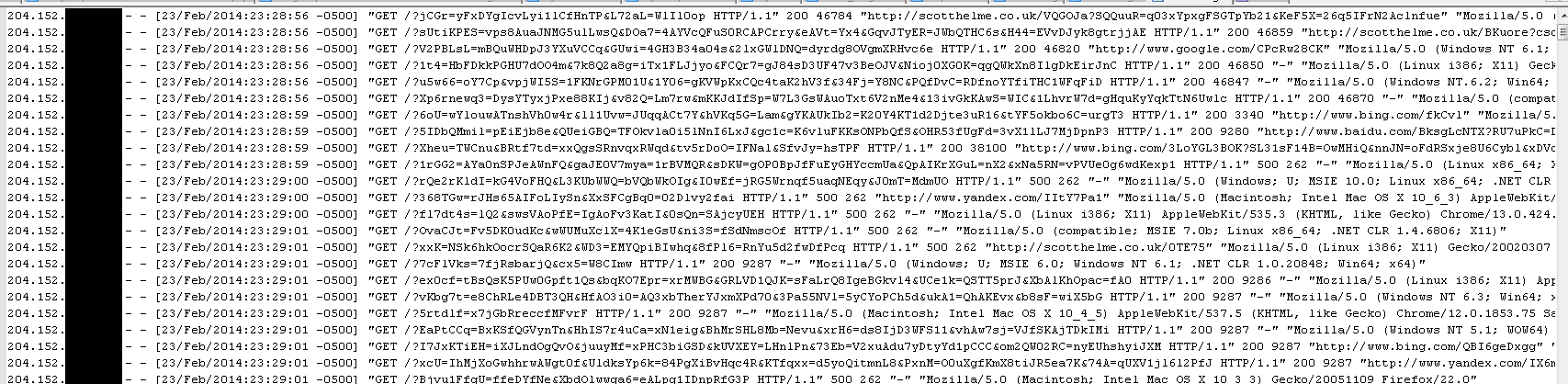

I was being hit with a HTTP GET flood. Whilst they aren't particularly sophisticated, a HTTP GET flood can be very effective. At the start of the attack I was being hit with an average of 4-5 hits per second on the index page. As you can see, each request has a unique parameter with it, and that's the crucial bit. I've used services like Blitz before to test how many hits per second my server can withstand, and 5 hits per second doesn't even come close to registering on the panic-o-meter. The only problem is that the hits generated by Blitz were for a small selection of pages by comparison, all of which could be cached. As every single one of these GET requests was unique, every single one of them bypassed the cache and resulted in a MySQL query too. This results in an unusually large amount of resources being consumed and as the attack ramped up, so did my RAM consumption.

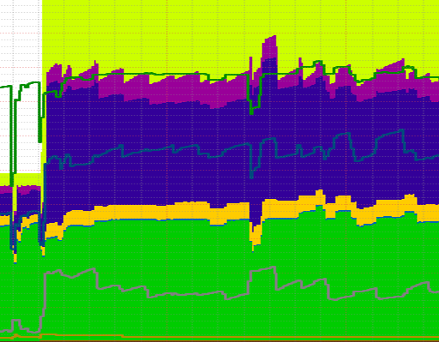

This graph shows my RAM usage on the server for the week of the attacks. The first 2 distinct dips on the left are the Sunday and Monday night when the MySQL service consumed too much RAM and was subsequently terminated. On the Tuesday I doubled up on the resources and this is represented by the large jump in the graph as the amount of RAM now available has increased. Over the next few nights the attacks came and went and the server managed to hold up. Around a week later I was hit with another larger DoS attack which can be seen on the graph again. The steps I had taken to bolster my defences seem to have held up against the attack and the attackers haven't returned since.

Reporting Compromised Servers

One thing that I noticed about the IP addresses being used in the attacks was that they were all compromised web servers. With only a small handful of servers involved, I decided to contact the hosting providers to see if I could remove some of the weapons from the attacker's arsenal.

@QuadraNet your servers are being used in a DoS attack. Where do I report abuse?

— Scott Helme (@Scott_Helme) February 25, 2014

Most of the hosts I contacted throughout the attacks were very responsive in asking for logs to prove the DoS and then acting to clean up the affected servers. This seemed to help reduce the impact of the attacks and was costing the attacker each time he launched a DoS attack and exposed more compromised machines. He was losing assets each time he attempted to DoS me. Not only that, reporting compromised web servers to a hosting provider is always a good idea anyway. If I did nothing about it then those servers would go on to be used in other attacks, no doubt about it. Just think of it as doing your part to help clean up the Internet.

Rate Limiting

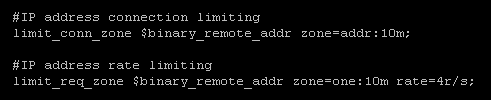

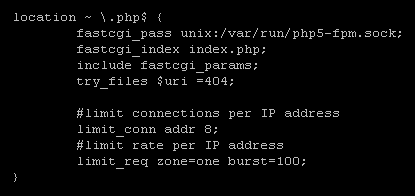

The next obvious step to defend myself was to apply some aggressive rate limiting to my traffic, talk about closing the stable door after the horse has bolted! Fortunately, this is really easy in nginx and I'd advise anyone else to apply them before it's too late. Nginx offers 2 modules for this, ngx_http_limit_conn_module which limits the number of connections per IP address, and ngx_http_limit_req_module which limits the number of requests per IP.

The zones are defined in the nginx configuration and are the shared memory locations that will be used to store IP address data. A 10Mb zone is enough to store data on tens of thousands of IP addresses and should be more than enough. If the zone storage is ever exhausted, the server will return a 503 to additional requests until there is free space. For my rate limiting per IP, I went with 4 requests per second. You can also define the limit as requests per minute using 240r/m, for example. This means that any given IP address is restricted to only making 4 requests per second on average. Moving onto the the virtual hosts config file, there are a couple more additions needed. I've placed the rate limiting inside my PHP location block. If you place it at the top level the rate limiting will apply to all requests made against the server. This will include image assets, JS and CSS files etc... I'm not too bothered about rate limiting those as the main bulk of the attack was aimed at consuming compute resources and not bandwidth. Besides, if you have an image heavy blog post, you don't want to be returning 503 errors if the rate limits are hit. By only throttling the requests to PHP, I can control the requests going to resource intensive tasks. As you can see above I've allowed a burst of up to 100 requests outside of the average 4 requests per second limit. If a large office all receive a link via email at the same time I want to allow the sudden surge in traffic from the same IP, but if that surge is sustained it starts to look like an attack and will be throttled back.

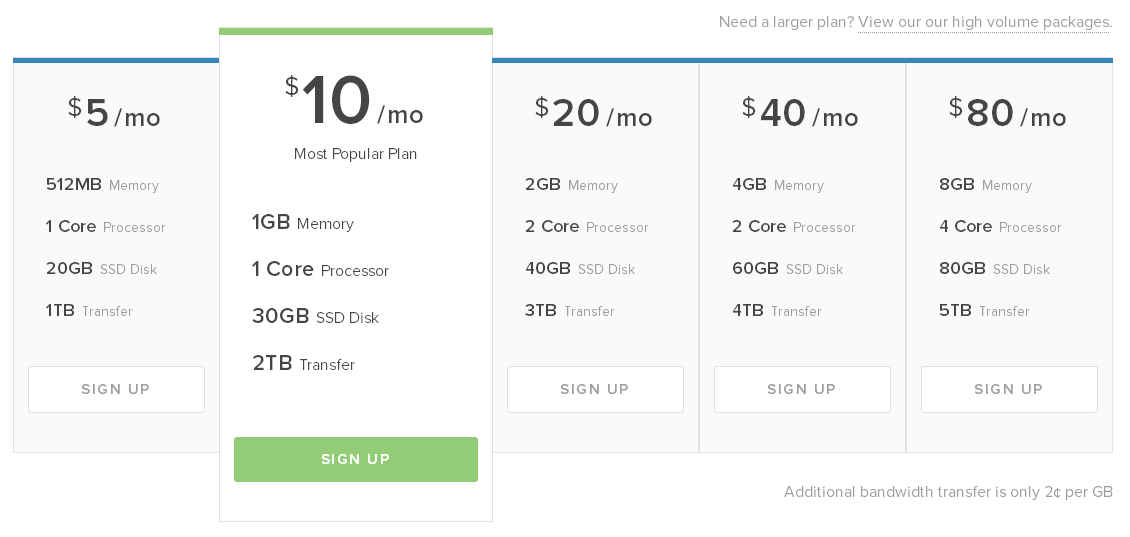

Increasing Resources

As my blog is hosted on a DigitalOcean (referral link) VPS, known as a droplet, I can very easily change the amount of resources at my disposal. As I was already on a fairly cheap package, there was no harm in doubling up on resources and strengthening my defences.

With twice the amount of processing power and memory at my disposal, the server should be much more capable of handling genuine surges in legitimate traffic and at holding up against subsequent malicious attacks. This hike in hardware performance means that the attacker would now need to come back with twice the attack force they had previously and, coupled with the aggressive rate limiting, they'd need to step it up by an order of magnitude again. At this point, I think it'd be more effective to go after a saturation of the network interface bandwidth.

Calling The Authorities

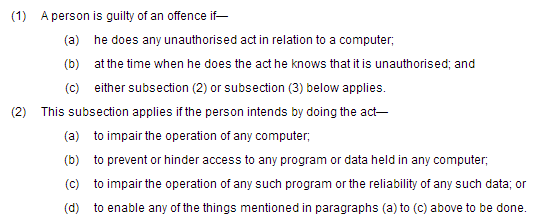

According to the provisions set out in the Computer Misuse Act 1990, the attacker was committing a criminal offence under Section 3, Subsection 1 and Subsection 2.

The attacker didn't have authorisation to launch the attack against my site and did so to impair the operation of my web server (computer) and hinder access to data (my blog) held on it. With this information I thought that contacting the authorities would be the best course of action. The attack on my site could have been only one action of the attacker, they could be involved in all sorts of other related crimes and the information I had in the form of my logs could potentially help an investigation. Not only that, no matter how small or minor, this is still a crime and as such should be reported. There's been plenty of headlines regarding hackers going to prison from Anonymous and LulzSec and many more on cyber-crime in the UK being on the rise. I figured that reporting this should be easy enough and called the local police headquarters to ask for advice on how to proceed. Turns out they weren't so impressed with my call. Despite their being a law in place to criminalise such actions, no one that I spoke to knew anything about it, knew who or where to refer me to and worst of all, didn't take me seriously. At one point in the call I had serious concerns that I was going to be receiving a knock on my door from an officer to arrest me for wasting police time.

After much protesting and insisting that this was a genuine enquiry, I was advised to contact Action Fraud who are "a central point of contact for information about fraud and financially motivated internet crime". This didn't really sound like the department I needed to speak to but I wasn't opposed to getting off the phone at this point! A quick call to Action Fraud confirmed my initial worries that they weren't the correct people to be speaking to. I suffered no direct financial loss as a result of the attack, I received no blackmail or ransom demands and had nothing of value stolen. Great, back to square one. Having spent a great deal of my spare time on the phone already, I have no crime reference number and nothing to show for my efforts. I wasn't about to go back through the whole process again and expect a different outcome. If anyone has any advice in this regard, please feel free to drop me a comment below.

Why Did They Do It?

The honest answer is, I have no idea. Just 3 days before the first attack, the 21st Feb, I published an article about 'My TLS conundrum and why I decided to leave CloudFlare'. You can imagine my annoyance when just 3 days after leaving the services of one of the best DDoS protection providers going, my site should fall victim to a DDoS attack! I didn't receive any communication from the attacker or any indication of who was behind it. The one interesting thing I did notice from my logs was that there was 1 IP address visiting my site fairly regularly during the attacks and only during the attacks. Once the attack began and started to pick up, this IP address would make a request for my index page every 5 or 6 minutes. This was the same for the next 4 nights. The IP address was nowhere to be found in my logs other than when an attack was taking place and all they seemed to do was reload the index page on a regular basis. Very interesting indeed! My guess is that this was the attacker checking to see if the site had gone down, but who knows.

In Closing

You have no idea when such an attack might take place or what the reasons for you being the target are. As prevention is always better than a cure, I'd strongly advise you to setup some form of rate limiting or throttling on your server before you need them. Even some very loose limits in place can help against a large attack and if they ever need to be tightened up during an attack, everything is in place. You don't want to be implementing new security measures whilst under attack. Get them in place and tested before you need to depend on them.